Why ChatGPT has become Lazy?

PLUS: Video Synthesis with Spatial-Temporal Fusion, Tiny Llama, AI Copilot for your phone

Today’s top AI Highlights:

FlowVid: Taming Imperfect Optical Flows for Consistent Video-to-Video Synthesis

Task Contamination: Language Models May Not Be Few-Shot Anymore

1.1 Billion Parameter Model Trained on 3T Tokens in Just 90 Days

Microsoft Copilot App Comes to iOS

& so much more!

Read time: 3 mins

Latest Developments 🌍

Spatial Data and Temporal Clues for Better Videos 🌠

While diffusion models have significantly advanced image-to-image (I2I) synthesis, extending these improvements to video has been challenging due to the difficulty in maintaining consistency across video frames. FlowVid addresses this by intelligently combining spatial data and temporal optical flow clues from the source video. Unlike previous methods that solely relied on optical flow, FlowVid skillfully manages its imperfections.

Key Highlights:

FlowVid's unique method combines spatial conditions with temporal optical flow clues from the source video. This dual approach is critical in managing the inaccuracies in optical flow estimation, a challenge that has traditionally impeded the progress of V2V synthesis technologies.

The framework significantly accelerates the video generation process, producing a 4-second video at 30 FPS with a resolution of 512×512 in just 1.5 minutes. This performance is markedly faster than current leading methods, including CoDeF, Rerender, and TokenFlow. Additionally, FlowVid's seamless integration with existing I2I models facilitates a wide range of video modifications, from stylization to object swaps and local edits.

The model is trained on 100k real videos from Shutterstock. In comparative user studies, FlowVid was preferred 45.7% of the time, surpassing competitors like CoDeF (3.5%), Rerender (10.2%), and TokenFlow (40.4%) in preference rate.

The Answer to “ChatGPT Has Become Lazy” 🐼

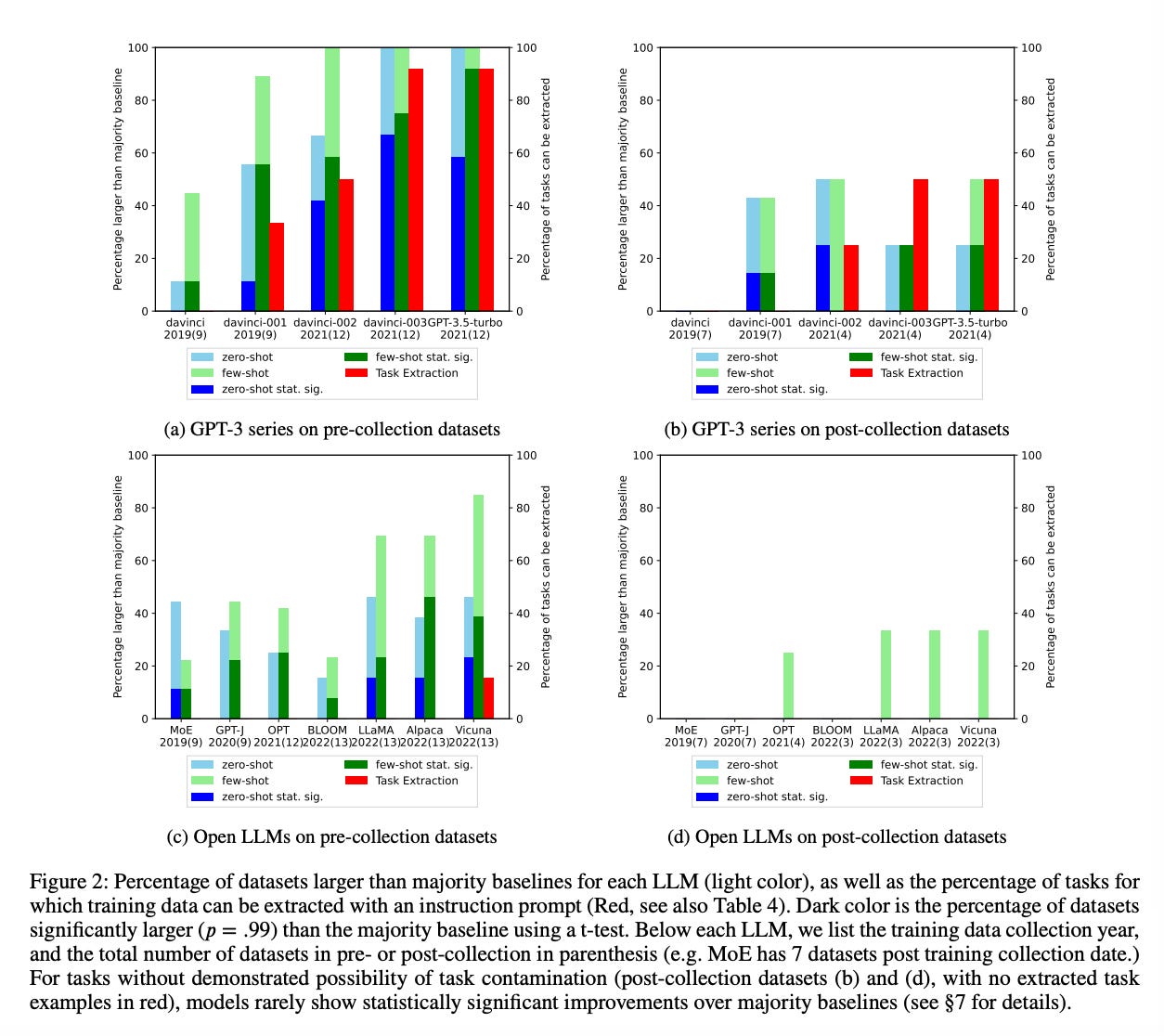

A recent investigation into the performance of LLMs like GPT-3 and other open-source models like Fairseq MoE, GPT-J, Bloom, OPT, LLaMA, Alpaca, and Vicuna, has brought to light the issue of task contamination, a phenomenon where models show enhanced performance on tasks they have been previously exposed to. This study, which rigorously analyzed the LLMs' abilities across various datasets, found a significant disparity in their performance based on the release dates of these datasets. This finding challenges the previously held notion of the effectiveness of LLMs in zero-shot and few-shot learning scenarios, as it suggests their successes might be partly attributed to prior exposure to similar tasks.

Key Highlights:

LLMs, including the GPT-3 series, exhibited better results on datasets that were available prior to their training data collection. This contrasts with their relatively poorer performance on newer datasets, underscoring the impact of task contamination where prior exposure to task-related data influences outcomes.

In controlled settings where task contamination was ruled out, these models failed to demonstrate significant superiority over simple majority decision baselines. This observation was consistent across both zero-shot and few-shot learning environments, indicating possible limitations in the models' inherent learning and generalization capabilities.

The study also highlights the difficulties involved in accurately identifying and quantifying task contamination. Given the variations in data formatting, the vast size of datasets, and the complexity of models, detecting whether a model has been exposed to specific task data beforehand poses a significant challenge.

Tiny Model Trained on 3T Tokens in 90 Days 🫰

A 1.1 billion parameter model trained in just 90 days! TinyLlama project, an open endeavor to pre-train a 1.1B Llama model on a staggering 3 trillion tokens, began its training phase on September 1, 2023, and has successfully completed its ambitious phase. This accomplishment was achieved using an array of 16 A100-40G GPUs, demonstrating a notable blend of efficiency and power in AI model training.

Key Highlights:

TinyLlama follows the architecture and tokenizer of Llama 2, allowing for easy integration with existing projects. A notable feature is the use of Grouped Query Attention (GQA), a mechanism that enhances the model's processing capabilities. The training data was a curated set of around 950 billion tokens, sourced from Slimpajama & Starcoderdata, with certain subsets of data specifically included or excluded to optimize training quality.

TinyLlama's training benefited from SOTA optimization techniques. These included distributed training across multiple GPUs and methods like Flash Attention 2 and improved layer normalization. This approach resulted in a significant processing capability of 24K tokens per second for each GPU.

TinyLlama's compact yet robust design makes it suitable for use in environments with limited computational capacity, such as edge devices for real-time translation and in-game dialogue generation.

Tools of the Trade ⚒️

Microsoft Copilot: AI-powered chat assistant powered by GPT-4 and DALL.E-3 for tasks like email drafting, story writing, and multilingual content creation, along with generating high-quality images from text for design and storytelling. Available for both Android and iOS devices.

Windows AI Studio: Combines Azure AI and Hugging Face tools for easy AI app development. Browse, download, fine-tune, and test AI models locally for Windows apps. All computations are local, so ensure your device is sufficiently powerful.

ResearchGOAT: AI-powered research platform offering rapid customer insights in multiple languages and geographies, with up to 90% lower costs compared to traditional consumer research. It enables user-defined questions, conducts AI-moderated interviews, and provides comprehensive analysis

Snzzle: A single link for showcasing live projects with a single link, featuring AI-generated descriptions, analytics, and enhanced visibility to recruiters. It offers lead generation, showcases coding skills, and facilitates networking within a professional community.

😍 Enjoying so far, TWEET NOW to share with your friends!

Hot Takes 🔥

Just as everything from the steam engine to the microprocessor was driven by an intense dialogue between physics and engineering, so the coming decades will be defined by a convergence of biology and engineering ~ Mustafa Suleyman

Feel appreciation for the LLMs that painfully descend down the loss curves, for answering our endless questions. The boring monotonous day to day tasks are what will lead to greatness and value. ~ Aravind Srinivas

Meme of the Day 🤡

That’s all for today!

See you tomorrow with more such AI-filled content. Don’t forget to subscribe and give your feedback below 👇

Real-time AI Updates 🚨

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!

![[optimize output image] [optimize output image]](https://substackcdn.com/image/fetch/$s_!oB9v!,w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F26df58cf-c7ca-427d-af38-bac1b715aea5_800x450.gif)

![[video-to-gif output image] [video-to-gif output image]](https://substackcdn.com/image/fetch/$s_!jIkh!,w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F7a4f37b2-2ed1-4825-ac8f-56236019ff56_800x385.gif)