Reproduce GPT-2 in 90 mins for just $20

PLUS: OpenAI is training new AI model, Train GPT-2 in 90 minutes for $20

Today’s top AI Highlights:

Study shows GPT-4 outperforms human analysts in financial statement analysis

Andrej Karpathy shows how to reproduce GPT-2 (124M) in 90 Minutes for $20

OpenAI forms a Safety Committee but without independent voices

Prepare for your upcoming technical interview with this AI interviewer

& so much more!

Read time: 3 mins

Latest Developments 🌍

Financial Decisions with GPT-4 for Better Accuracy 📊

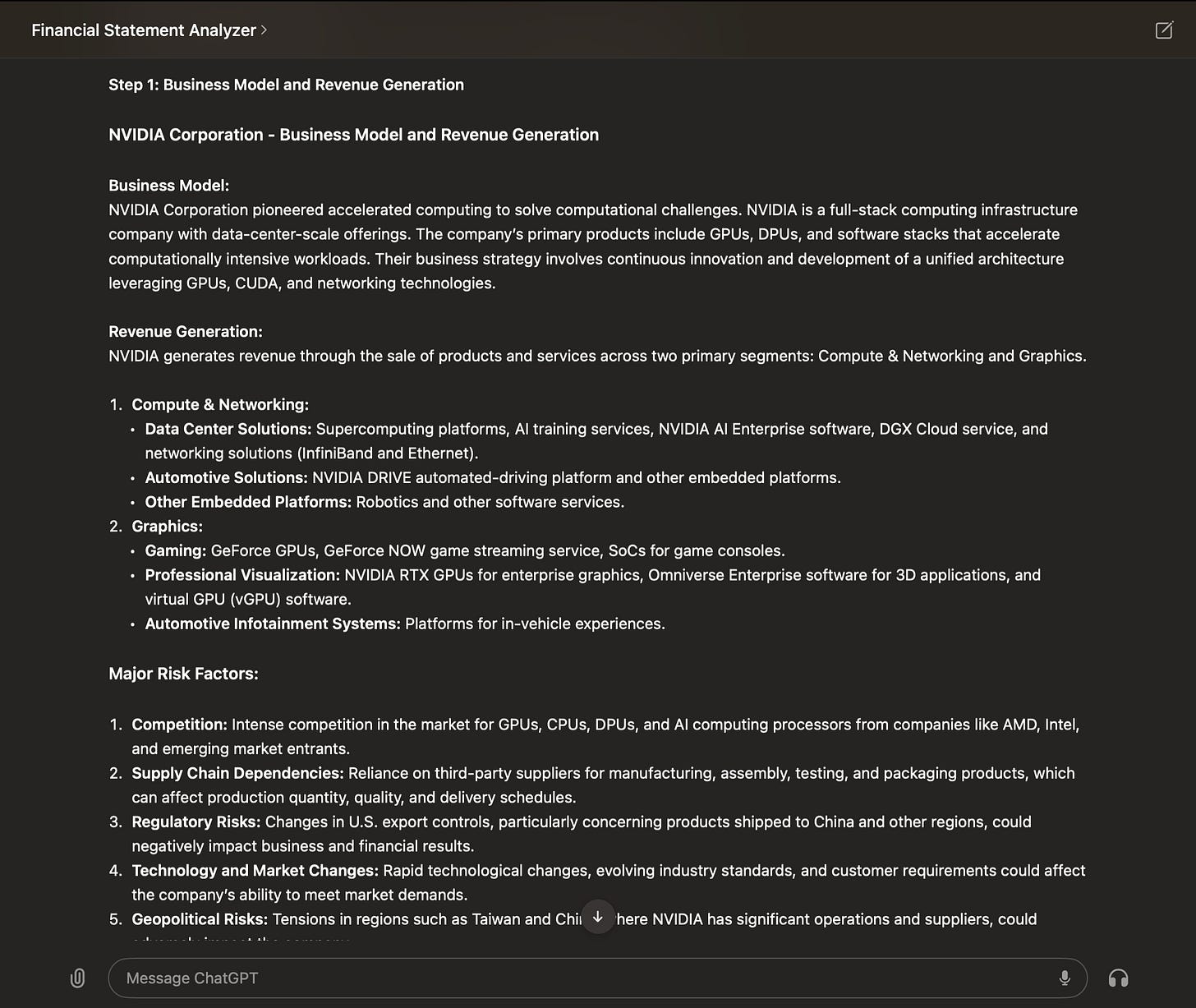

LLMs are now outperforming even human financial analysts in financial statements analysis and earnings change! A recent study from the University of Chicago involved GPT-4’s ability to mimic the work of human financial analysts by giving it standardized, anonymized financial statements and instructing it to follow a step-by-step process. The study’s results are significant, showing that AI can rival human experts in this complex task, and even outperform them in certain areas.

Key Highlights:

Performance: Using Chain-of-Thought (CoT) prompting to mirror human reasoning, GPT-4 predicted the direction of future earnings changes more accurately than professional analysts. GPT-4 achieved an accuracy of 60.35% in predicting future earnings directions, compared to human analysts’ score of 52.71%.

Comparison with Models: GPT’s performance was comparable to specialized artificial neural networks trained on predicting earnings changes, which scored 60.45%.

GPT-4 and human analysts complement each other: GPT-4 excelled in predicting earnings for smaller, less profitable companies, where human analysts struggled. Analysts, however, gave valuable insights by incorporating broader contextual knowledge.

Economic Value: Trading strategies based on GPT-4’s predictions yielded higher Sharpe ratios and alphas, indicating better risk-adjusted returns than those based on human analysts or other models.

Companion App: To demonstrate their study, the team has developed a GPT called Financial Statement Analyzer that analyzes the financial statements of a company step-by-step.

Karpathy’s Opensource Project to Train GPT-2 (124M) ⏱️

90 minutes and $20 for training an entire LLM! OpenAI released the smallest version of GPT-2 with 124 million parameters and you can now build your own GPT-2 without burning your pocket. Andrej Karpathy’s llm.c repo on GitHub retrains the GPT-2 (124M) model in a mind-blowing 90 minutes for a mere $20. By utilizing a single 8X A100 80GB SXM node, Karpathy demonstrates efficient and cost-effective model training, with room for further optimizations.

Key Highlights:

Training Efficiency: Reproducing the GPT-2 (124M) model now takes only 90 minutes using a single A100 node. llm.c consists of ~4,000 lines of C/CUDA code optimized for high performance, achieving ~60% Model Flops Utilization (MFU), making the process both time-effective and resource-efficient.

Cost Reduction: The entire training process costs approximately $20, using a node that runs at ~$14 per hour. This cost-effective approach lowers the barrier for AI enthusiasts, enabling more widespread experimentation and innovation.

High Performance and Validation: The model is trained on 10 billion tokens from the FineWeb dataset, achieving a HellaSwag accuracy of 29.9. This performance surpasses the original GPT-2’s accuracy. The model also outperforms the OpenAI checkpoint on the FineWeb validation dataset.

OpenAI has Started Training a New AI Model 💡

OpenAI is losing its credibility and AI ethicists are raising concerns over how the company has been approaching the very burgeoning topic of AI safety. Over the past few weeks:

OpenAI witnessed resignations of high-profile employees including Co-founder Ilya Sutskever and OpenAI’s Superalignment Team head Jan Leike.

Leike openly criticized how OpenAI under Sam Altman’s leadership has ignored responsible AI development.

It was reported that OpenAI imposes severely restrictive terms on ex-employees that their vested equity will be taken back if they criticize OpenAI.

Additionally, two former OpenAI Board members spoke out about how companies like OpenAI cannot be left to self-govern and the government needs to intervene and regulate the industry.

All these issues have hampered the image of OpenAI’s approach to safety and responsible AI development. Countering this, OpenAI has announced that they have formed a new Safety Committee responsible for making recommendations on critical safety and security decisions for OpenAI projects and operations.

As the first task, the Committee will recommend processes and safeguards in 90 days to the Board.

But here’s the problem! The entire committee comprises OpenAI insiders, including Sam Altman himself. There are no outside independent members who can give objective unbiased recommendations.

OpenAI says it has “begun training its next frontier model” and that the team “is proud to build and release models that are industry-leading on both capabilities and safety.”

Adding to the skepticism, a new article by Vox has surfaced, revealing that Altman and other executives were aware of the restrictive provisions in the exit documents despite their public denials. OpenAI reportedly employed high-pressure tactics to force ex-employees into signing these agreements. These documents were intentionally kept long and employees were given very little time to review them, so they are unable to fully understand the extent and seek legal counsel within this time.

These actions continue to raise serious questions about OpenAI’s transparency and commitment to ethical practices.

✍️ Build Personalized Marketing Chatbots with Google Gemini

Learn how to build personalized marketing chatbots with Google Gemini and LoRA in just 60 minutes. Join this FREE webinar for live demos and hands-on code sharing. Register now before the spots get filled!

😍 Enjoying so far, share it with your friends!

Tools of the Trade ⚒️

Jung.ai: Instant and affordable mental health care with AI and human-psychologist hybrid system, providing assessments and starting treatments within 72 hours. You can share your concerns anonymously with the AI, get a psychologist’s review, begin a personalized treatment plan, and receive continuous support from an AI chatbot.

Preps: Prepare for your upcoming technical interview with AI. Preps AI simulates real-world technical interviews, offering practice sessions that mimic platforms like Zoom or Google Meet. It provides real-time coding challenges and detailed feedback on your performance

Storynest.ai: An AI platform that helps you create interactive stories, novels, screenplays, and more. It offers tools for character creation, world-building, and editing to enhance your writing process and make your narratives more engaging.

Awesome LLM Apps: Build awesome LLM apps using RAG for interacting with data sources like GitHub, Gmail, PDFs, and YouTube videos through simple texts. These apps will let you retrieve information, engage in chat, and extract insights directly from content on these platforms.

Hot Takes 🔥

It is odd when folks get mad when I say AI is already useful. We are only 18 months into LLMs & there have already been many controlled trials finding LLMs are useful, firms have public case studies with real metrics, etc

Lots of reasons to critique AI, “uselessness” isn’t one. ~

Ethan MollickYour brain consumes 20 W of power. A single GPU consumes a kilowatt. A data center has tens of thousands of them, and is still not as powerful as your brain. Chew on that before you say AGI is imminent. ~

Pedro DomingosGPT-4o is a heavily distilled version of their most powerful unreleased model ~

Rohan Paul

Meme of the Day 🤡

That’s all for today! See you tomorrow with more such AI-filled content.

Real-time AI Updates 🚨

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!