Real-time Object Detection without Training

PLUS: OpenSource AI background remover, OpenAI's steps towards AI transparency

Today’s top AI Highlights:

OpenAI Enhances Image Transparency with C2PA Integration

Natural language guidance of high-fidelity text-to-speech models with synthetic annotations

YOLO-World: Real-Time Open-Vocabulary Object Detection

Open-Source Background Removal by Bria AI

DeepMind Looks Out for Visually Impaired

& so much more!

Read time: 3 mins

Latest Developments 🌍

OpenAI Embeds C2PA Metadata in Generated Images

OpenAI has announced the integration of C2PA specifications into images generated through DALL.E-3 in ChatGPT and their API, aimed at enhancing transparency and authenticity in digital media. This move facilitates the embedding of metadata, including provenance details, allowing users to verify the origin of images created with OpenAI's tools.

Key Highlights:

C2PA is an open technical standard that allows publishers, companies, and others to embed metadata in media to verify its origin and related information, extending beyond AI-generated images to encompass various media types. The integration of C2PA metadata aims to address concerns regarding image authenticity and provenance in the digital landscape.

Images generated through ChatGPT on the web and OpenAI's API serving the DALL·E 3 model will now include C2PA metadata. Users can leverage platforms like Content Credentials Verify to ascertain whether an image was generated using OpenAI's tools, providing a crucial layer of verification. However, the effectiveness of metadata like C2PA may be compromised by its potential removal, underscoring the ongoing need for vigilance in verifying image origin.

Despite the addition of C2PA metadata, image file size and latency remain minimally affected. OpenAI provides illustrative examples demonstrating slight increases in file size for different image formats, ranging from 3% to 32%. Importantly, these changes do not compromise the quality of image generation, ensuring a seamless user experience across platforms.

A Scalable Solution for Expanding Voice Styles and Identities 🎙️

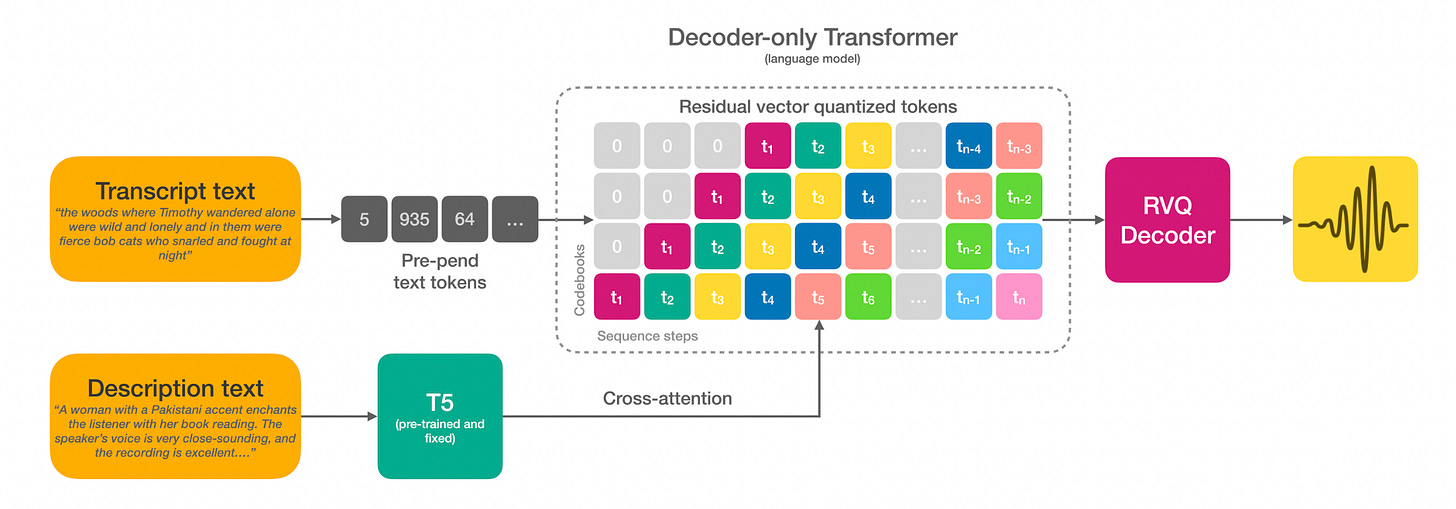

While text-to-speech (TTS) models have demonstrated strong performance in achieving natural-sounding speech from large-scale datasets, they've been hampered by the need for reference recordings to control speaker identity and style. Guiding these models using text instructions struggles to scale due to the dependency on human-labeled data.

Here’s a scalable solution by Stability AI that leverages natural language for intuitive control over speaker identity and style without relying on reference recordings. This method involves labeling a 45,000-hour dataset on various speech aspects and using it to train a speech-language model, significantly enhancing audio fidelity and enabling the generation of high-fidelity speech across a wide spectrum of accents and conditions.

Key Highlights:

The method involves labeling vast amounts of speech data with details like gender, accent, and speaking style, and then training a speech language model on this labeled dataset. This model can generate a diverse array of speech sounds and styles by simply using text prompts, making it possible to create high-quality speech in various accents and conditions without manual annotation.

By incorporating just 1% of high-quality audio in the dataset and using advanced audio codecs, the team achieved remarkably high fidelity in speech generation. This approach ensures the synthesized speech is clear, natural-sounding, and closely resembles human voice, setting a new standard for the audio quality of TTS systems.

The model was put through detailed objective and subjective tests, where it demonstrated a strong ability to match desired speech attributes like gender and accent with high accuracy (94% for gender). In listener evaluations, the model's outputs were preferred over those of competitors for their closeness to natural language descriptions and overall sound quality.

Real-Time Open-Vocabulary Object Detection 👁️

Object detection has been limited to pre-defined and trained object categories, confining their applicability in dynamic real-world scenarios. Addressing this crucial limitation, YOLO-World is an innovative enhancement to the YOLO series of detectors that integrates open-vocabulary detection capabilities. This new model stands out by achieving a remarkable detection accuracy of 35.4 Average Precision (AP) on the LVIS dataset while maintaining a swift inference speed of 52.0 Frames Per Second (FPS), which outperforms many state-of-the-art methods in terms of both accuracy and speed.

Key Highlights:

YOLO-World addresses the longstanding issue of fixed vocabulary in object detection by incorporating vision-language modeling and pre-training on extensive datasets. This enables the detection of a wide array of objects in a zero-shot manner, thereby significantly expanding the model's versatility beyond pre-defined categories.

The model introduces the Re-parameterizable Vision-Language Path Aggregation Network (RepVL-PAN) and utilizes a region-text contrastive loss for training. These innovations foster a more nuanced interaction between visual and linguistic information, enabling YOLO-World to understand and detect objects described in natural language accurately.

YOLO-World not only showcases superior performance with an AP of 35.4 on the challenging LVIS dataset and a high inference speed of 52.0 FPS but also emphasizes ease of deployment for real-world applications. Its efficient design and the ability to adapt to downstream tasks, like open-vocabulary instance segmentation and referring object detection.

Tools of the Trade ⚒️

SurgicalAR Vision: MEDIVIS’ SurgicalAR app is now on Apple Vision Pro, turning 2D medical imaging data (MRI/CT) scans into 3D to enhance surgical precision. SurgicalAR Vision leverages spatial computing and AI for advanced visualization of medical imaging data and other healthcare data, including features for image review, image manipulation, measurement, and 3D visualization (multi-planar reconstructions and volume rendering).

BRIA-RMBG-1.4: Open-Source Background Removal by BriaAI, now live on Hugging Face. RMBG v1.4 excels in separating foreground from background across diverse categories, surpassing current open models.

LookOut: A second pair of eyes for blind and low-vision people. Developed by DeepMind, Lookout makes it easier to get more information about the world around you and do daily tasks more efficiently like sorting mail, putting away groceries, and more. The new Image Q&A feature offers detailed description of images plus the ability to ask questions via voice and text.

localllm: Opensource set of tools and libraries enabling developers to utilize quantized models from HuggingFace via a command-line utility, facilitating GPU-free execution of LLMs on CPU and memory within the Google Cloud Workstation environment. localllm enhances productivity, reduces infrastructure costs, improves data security, and seamlessly integrates with Google Cloud services.

😍 Enjoying so far, TWEET NOW to share with your friends!

Hot Takes 🔥

Civilization itself is AGI ~ Beff Jezos — e/acc

GPT-4 Mystery Explained!

The reason no one is able to overtake GPT-4 for 18 months, is we have not been competing with a static target at all….

We are competing with a constantly moving and improving one! ~ Bindu Reddy

Meme of the Day 🤡

Ah shit... that's where I left it

That’s all for today!

See you tomorrow with more such AI-filled content. Don’t forget to subscribe and give your feedback below 👇

Real-time AI Updates 🚨

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!

![aD2t4RCWT0HFcXJ9.mp4 [optimize output image] aD2t4RCWT0HFcXJ9.mp4 [optimize output image]](https://substackcdn.com/image/fetch/$s_!lgXQ!,w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F4b8dde1b-fe6e-4b5c-a817-6d149cffe190_600x338.gif)