OpenSource Multimodal LLM Competing with GPT-4V and Gemini

PLUS: Strongest and Greenest Opensource Multilingual Model, New Midjourney Niji V6 for Anime Art

Today’s top AI Highlights:

Redefining Multimodal Generalization with Next Gen Qwen VLM

Eagle 7B : Soaring past Transformers with 1 Trillion Tokens Across 100+ Languages (RWKV-v5)

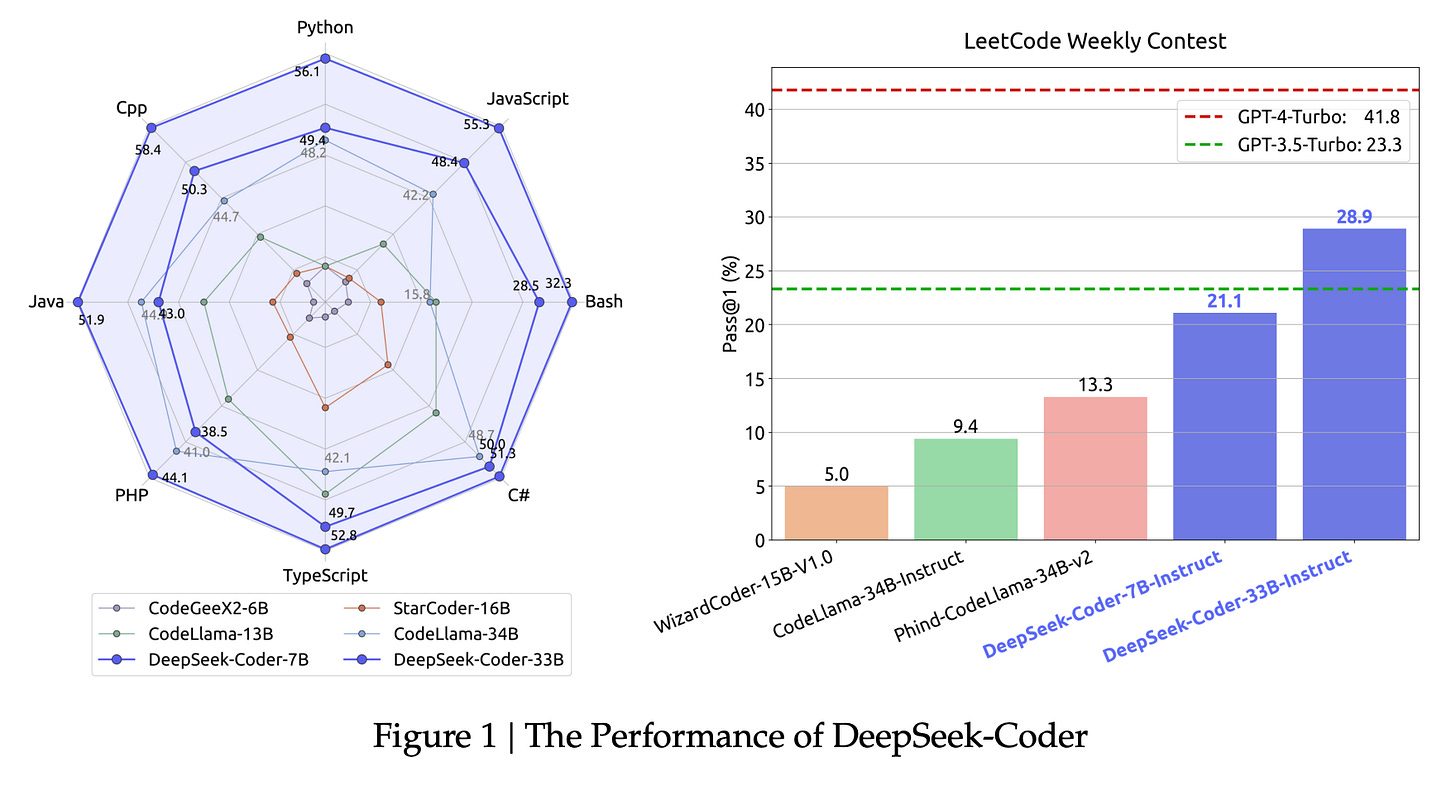

DeepSeek-Coder: When LLM Meets Programming -- The Rise of Code Intelligence

Tenstorrent Unveils Grayskull AI Accelerator

Midjourney Niji V6 is Here Designed for Anime Art

Generate Personalized Code Directly from Figma

& so much more!

Read time: 3 mins

Latest Developments 🌍

Qwen VLM Surpasses GPT-4V and Gemini 🚀

The Qwen team opensourced multimodal model Qwen-VL, a product of the rapid development of the LLM Qwen and its unified multimodal pretraining. This development aimed to tackle the limitations faced by multimodal models in generalization. Building on this foundation, the Qwen-VL series has recently been enhanced with the introduction of two advanced versions, Qwen-VL-Plus and Qwen-VL-Max, offering significant technical advancements in the realm of AI.

Key Highlights:

The Qwen-VL-Plus and Qwen-VL-Max models have substantially improved image-related reasoning abilities. They are adept at recognizing, extracting, and analyzing intricate details in images and texts. Additionally, they support high-definition images with resolutions above one million pixels and accommodate various aspect ratios. This technological evolution enables more precise and comprehensive visual and textual data analysis.

These models showcase performance on par with Gemini Ultra and GPT-4V in multiple text-image multimodal tasks. Specifically, Qwen-VL-Max excels in Chinese question answering and text comprehension, surpassing GPT-4V and Gemini.

The latest Qwen-VL models exhibit exceptional capabilities in a range of practical applications including basic recognition tasks, identifying objects, celebrities, landmarks, and even writing poetry inspired by visuals. Their advanced capability allows them to pinpoint and query specific elements within images, making informed judgments and deductions. Additionally, these models excel in visual reasoning, solving complex problems based on visual inputs, and efficiently recognizing and processing text information in images.

World's Greenest 7B Model 🍀

The RWKV-v5 architecture, known for its linear transformer design and efficient processing, enters a new phase with the introduction of Eagle 7B, currently the most robust multi-lingual model available in open source. This model stands out for its exceptional multi-lingual capabilities and efficient processing, trained on a staggering 1.1 trillion tokens across over 100 languages.

Key Highlights:

Eagle 7B, a linear transformer model with 7.52 billion parameters, is recognized for its low inference cost, being 10-100 times more efficient than traditional models. It also ranks as the world's greenest model in the 7B class, emphasizing its environmental consciousness in AI development.

The model has been rigorously tested across various multi-lingual benchmarks such as xLAMBDA, xStoryCloze, xWinograd, and xCopa, covering 23 languages. These benchmarks focus on common sense reasoning, demonstrating Eagle 7B's substantial leap in performance from its predecessor, RWKV v4, especially in non-English languages.

In English, Eagle 7B shows remarkable results, competing closely with and in some cases surpassing, the top models in its class like Falcon, LLaMA2, and MPT-7B. It was evaluated across 12 different benchmarks, encompassing commonsense reasoning and world knowledge, indicating its robustness and versatility in handling complex language tasks.

Bridging the Opensource Gap in Code 🧑💻

The world of software development has long grappled with the limitations of open-source models in code intelligence, often finding them overshadowed by their closed-source counterparts. DeepSeek-Coder series is a comprehensive range of open-source LLMs, with sizes ranging from 1.3 billion to 33 billion parameters, trained on 2 trillion tokens from 87 programming languages, offering a new level of accessibility and performance in code intelligence tools for developers and researchers.

Key Highlights:

DeepSeek-Coder models incorporate innovative techniques such as the Fill-In-The-Blank task with a 16K window and the Fill-In-Middle (FIM) approach. These advancements result in superior performance in code completion and understanding. Particularly noteworthy is the DeepSeek-Coder-Instruct 33B, which surpasses the performance of OpenAI's GPT-3.5 Turbo in several benchmarks, showcasing the model's effectiveness in practical coding applications.

A pioneering approach in the DeepSeek-Coder series is the organization of pre-training data at the repository level. This strategy significantly enhances the models' capability in cross-file code generation, offering a nuanced understanding of code context that is critical for complex software development tasks.

The series represents a significant contribution to the field of code intelligence. It not only introduces advanced, scalable models proficient in understanding 87 programming languages but also lays new groundwork in the training of code-focused LLMs. The rigorous evaluations against a variety of benchmarks demonstrate the series' ability to surpass existing open-source code models.

Bridging Hardware and AI Innovation ✨

Tenstorrent has unveiled their inaugural AI accelerator, the Grayskull, marking a significant advancement in AI hardware development. Released in two variants: the E75, a compact card designed for 75 watts of power consumption, and the E150, a more robust version tailored for 150 watts. This development kit aims to cater to developers and small to medium businesses. The E75 is priced at $599, while the E150 will be available for $799, positioning these devices as accessible development tools for those looking to engage with Tenstorrent's hardware and software ecosystem.

A key focus for Tenstorrent in this launch is the emphasis on hardware-software co-design, ensuring that the Grayskull accelerators are not just powerful but also user-friendly for programmers. Emphasizing hardware-software co-design, Tenstorrent introduces “Buda,” a compiler that simplifies the deployment of AI models on Grayskull devices, supporting popular frameworks like PyTorch and TensorFlow.

Moreover, Tenstorrent is opening its doors to the developer community by making their bare metal software stack open source, offering detailed insights into the hardware's capabilities and encouraging feedback. This strategy reflects a commitment to fostering a collaborative and innovative environment where developers can explore new applications and optimizations for AI workloads.

Tools of the Trade ⚒️

Midjourney Niji v6: Niji’s new version is out which ius specifically designed for anime art. Its main highlights include improved handling of complex prompts, text generation by quoting, a "raw style" option for non-anime visuals, and features like Vary, Pan, and Zoom, set to fully launch in February.

Anima's Code Personalization: Generate personalized code directly from Figma by using prompts. It includes presets for adding basic logic, responsiveness, SEO tags, and more. You can also teach Anima your coding style and conventions by providing a sample of their own code.

Podnotes: Repurpose podcasts into various forms of content. It offers features for generating transcripts, summaries, articles, blog posts, LinkedIn posts, tweets, audiograms, and over 100 other content assets in more than 19 languages.

Circleback: AI-powered meeting assistant that generates detailed notes, action items, and reminders from both virtual and in-person meetings, supporting over 100 languages across platforms like Google Meet, Microsoft Teams, Zoom, and WebEx. It offers transcription, timestamped video navigation, follow-up task automation, and more.

😍 Enjoying so far, TWEET NOW to share with your friends!

Hot Takes 🔥

Before: mastering any skill requires 10,000 hours of practice.

Now: mastering any skill requires 10,000 GPUs. ~ Bojan TunguzThis year it looks like >4 companies will reach GPT-4 level models

Given its lead, one could guess OpenAI should be at GPT-4.5 or 5

This should reshape some segments of both AI world, as well as open up the next set of industries to transformation by GenAI ~ Elad Gil

Meme of the Day 🤡

That’s all for today!

See you tomorrow with more such AI-filled content. Don’t forget to subscribe and give your feedback below 👇

Real-time AI Updates 🚨

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!