Neuralink's First Human Brain Chip Implant 𑗐

PLUS: Meta's CodeLlama Beats GPT-4, Extending Context Window by 100x

Today’s top AI Highlights:

Neuralink Implants its first Brain Chip in a Human

Soaring from 4K to 400K: Extending LLM's Context with Activation Beacon

Meta’s CodeLlama 2 70B Beats GPT-4 at Code

All-in-one AI tool for Text-to-Video, Animation, Voice to Video, Talking Heads, Hand-Drawn Videos, and much more

& so much more!

Read time: 3 mins

Latest Developments 🌍

Neuralink implants Brain Chip in actual Human 🧠

Elon Musk's Neuralink has achieved a new milestone: the first human has received their brain-computer interface implant. The early data indicates effective neuron spike detection by the device. This event marks a significant step for Neuralink, transitioning from experimental trials in animals to real-world applications in humans.

Neuralink, a venture spearheaded by Musk, is at the forefront of developing technology that connects the human brain directly with computers. Their device is about the size of a coin and is designed to be embedded in the skull to interact with brain cells. It is designed to be implanted beneath the skull. It functions by connecting tiny wires a short distance into the brain to read neuron activity. This innovative technology aims to allow individuals, particularly those with paralysis or other severe neurological conditions, to control devices like smartphones or computers merely through thought.

Extending Llama-2’s Context Length by 100x 🧠

Extending the limited context window of LLMs traditionally incurs considerable costs in training and inference, often adversely affecting the its performance in handling shorter contexts. Addressing this challenge, researchers have developed Activation Beacon, an innovative solution that compresses LLM's raw activations into more compact forms, enabling the perception of much longer contexts within the existing window limits. Notably, Activation Beacon impressively extends the context length of the Llama-2-7B model by 100 times, from 4K to 400K tokens, while maintaining superior performance in both long-context generation and understanding tasks.

Key Highlights:

Activation Beacon stands out as a plug-and-play module that condenses the LLM’s raw activations into a more compact form. This innovative approach allows the model to process significantly longer contexts without expanding the actual size of the context window. Its design ensures that the original capabilities of the LLM for shorter contexts are fully preserved, while simultaneously providing the ability to handle longer contexts efficiently.

The training process of Activation Beacon is both time and resource-efficient. It is trained using an auto-regression task, conditioned on a diverse mix of beacons with varying condensing ratios, and remarkably requires only 10K training steps. This process is completed in less than 9 hours on a single 8xA800 GPU machine, showcasing its feasibility for rapid deployment and integration into existing LLMs without extensive resource allocation.

In terms of performance, Activation Beacon not only extends the Llama-2-7B model's context length by an impressive 100 times but also demonstrates superior results in long-context generation and understanding tasks compared to other methods. This extension to 400K tokens is achieved without compromising the model's efficiency in memory and time during both training and inference phases, highlighting Activation Beacon's effectiveness in handling extensive contextual data.

Best Opensource Model for Code Generation 🧑💻

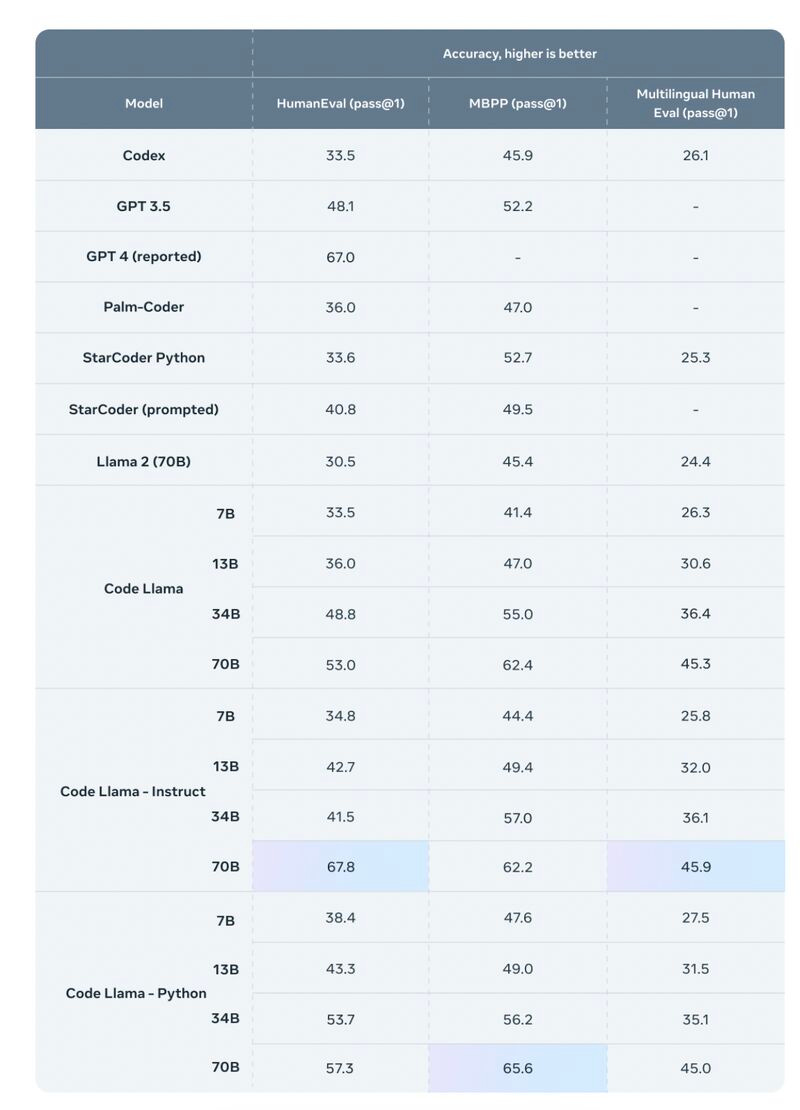

Meta has opensourced Code Llama 70B, its latest and most advanced code generation AI model. Boasting an impressive 53% accuracy on the HumanEval benchmark, Code Llama 70B stands out for its enhanced ability to generate and debug complex programming strings, outperforming not only GPT 3.5 but even GPT-4, closing the gap between the open and closed models.

Key Highlights:

Code Llama 70B, with its vast 70 billion parameters, has achieved a significant milestone in accuracy, scoring 53% in the HumanEval benchmark. This not only surpasses its predecessor, GPT-3.5, which scored 48.1%, but also demonstrates a closer approach to GPT-4's 67% accuracy mark. This enhancement in precision underscores its potential in handling complex coding queries more effectively.

Alongside Code Llama 70B, Meta has introduced specialized tools like Code Llama - Python and Code Llama - Instruct, targeting specific programming languages. This suite of tools, available in three different versions of the code generator, is designed to assist developers in not just writing code but also in debugging and refining their existing code.

Meta's release of Code Llama 70B occurs in a competitive landscape where other tech giants have also introduced AI code generators. Notably, Amazon launched CodeWhisperer in April, while Microsoft has been harnessing OpenAI's model for GitHub Copilot. This highlights the growing trend among major tech companies to develop and refine AI tools for coding.

Tools of the Trade ⚒️

Steve AI: All-in-one AI-powered video generation tool that enables users to create various types of videos, including animations, training videos, and more, from text, scripts, or audio. It offers a range of features such as an AI Avatar builder, a vast library of assets, and multiple video output styles, and more.

Supadash: Connect your databases and instantly generate AI-powered charts to visualize data and track metrics about your apps. It simplifies the process by eliminating the need for writing code or SQL queries, enabling users to create dashboards for their applications in seconds.

CodeAnt AI: AI-driven tool designed to detect and automatically fix bad code across the codebase. It uses a combination of AI and rule-based engines to identify and rectify issues like anti-patterns, dead and duplicate code, complex functions, and security vulnerabilities, while also documenting the entire codebase.

Lilac: A dataset transformation platform designed to enhance data quality for artificial intelligence, particularly LLMs. It provides a suite of tools for data editing, PII detection, duplication handling, and fuzzy-concept search, along with the Lilac Garden feature for efficient dataset computations and embedding.

😍 Enjoying so far, TWEET NOW to share with your friends!

Hot Takes 🔥

Meme of the Day 🤡

That’s all for today!

See you tomorrow with more such AI-filled content. Don’t forget to subscribe and give your feedback below 👇

Real-time AI Updates 🚨

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!

![5zd1cOtVrRGo_Plt.mp4 [optimize output image] 5zd1cOtVrRGo_Plt.mp4 [optimize output image]](https://substackcdn.com/image/fetch/$s_!Ex96!,w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F0afe943e-1a24-48df-9dbc-ae445013eec9_800x450.gif)

![AI Video Making Software.mp4 [optimize output image] AI Video Making Software.mp4 [optimize output image]](https://substackcdn.com/image/fetch/$s_!D6j7!,w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F7fcba588-9c03-4fce-8673-d96f1aae47b8_800x450.gif)