Multimodal GPT-4 in Humanoid Robot 🤖

PLUS: Meta's Gesture-Based Computing, Abobe's Pixel-level Control for Music, Copilot++ to auto edit code

Today’s top AI Highlights:

Transforming Intent into Action: Meta's Gesture-Based Computing

New open coding LLM beats other coding LLMs twice its size

Adobe releases the Firefly of music generation

Multimodal GPT-4 meets humanoid robot: Figure 🤝 OpenAI

Copilot++: The first and only copilot that suggests edits to your code

& so much more!

Read time: 3 mins

Latest Developments 🌍

Hand Gestures Become Computer Commands 🤟

Meta has been employing AI in developing non-invasive techniques to understand what a person wants to do and how the brain functions. Take this system for instance, developed last October, for real-time decoding of images from the brain. They have made yet another achievement and developed a special wristband that helps people control computers with simple gestures in their wrists and hands. This wristband translates intentional neuromotor commands into computer input, making the interaction very simple and intuitive.

Key Highlights:

It uses sEMG technology to non-invasively sense muscle activations. This device stands out because it simplifies the process of translating neuromotor commands into computer inputs, offering a practical solution for day-to-day use. It can be easily worn or removed.

The team has developed sophisticated neural network decoding models that work across different individuals without the need for personalized calibration. The model trained on data from more than 6000 participants, achieves an impressive 7% character error rate in offline handwriting decoding.

Initial tests with naive users have demonstrated promising results in gesture detection, nearly one gesture detection per second, and 17.0 adjusted words per minute in handwriting tasks. Furthermore, personalizing handwriting models with minimal adjustments has been shown to enhance performance by up to 30%.

New Benchmark in Opensource AI Code Competence 🧑💻

BigCode has just unveiled StarCoder2, the latest generation of open LLMs for code, along with an expansive dataset, The Stack v2. This release marks a significant expansion in the capabilities and scale of coding LLMs. With models available in sizes of 3 billion, 7 billion, and 15 billion parameters, StarCoder2 is poised to enhance coding practices across numerous programming languages.

Key Highlights:

The largest model, StarCoder2-15B, boasts training on over 4 trillion tokens across 600+ programming languages, showcasing its extensive coverage and versatility. This model's architecture features advanced techniques such as Grouped Query Attention and a significant context window of 16,384 tokens.

As the backbone for training StarCoder2 models, The Stack v2 represents the largest open code dataset currently available, scaling up to 67.5TB from its previous version's 6.4TB, with a deduplicated size of 32.1TB. This upgrade not only includes better language and license detection but also incorporates improved filtering heuristics and groups data by repositories to add context to the training material.

StarCoder2 models have demonstrated impressive performance across the board, with the StarCoder2-15B variant notably outshining models twice its size, including the renowned CodeLlama-34B and DeepSeekCoder-33B, in various benchmarks. Particularly, it excels in math and code reasoning tasks, showcasing its robust capabilities even in low-resource programming languages.

Pixel-level Control for Music 🎼

Adobe will roll out its new generative AI tool for music generation and editing, "Project Music GenAI Control. This tool will let you generate music from text prompts and offers extensive editing capabilities to tailor the audio to your specific requirements, kind of like what they did with Firefly for images.

You can specify genres or moods like “powerful rock,” “happy dance,” or “sad jazz” to produce music. But how is it different from other reigning text-to-music models like Meta’s Audiobox? This tool will let you edit your music with fine-grained control. The features allow you to adjust various aspects of the music, such as melody reference, tempo, structure, intensity levels, and create repeatable loops.

It’s perfect for making catchy intros and outros for your YouTube videos or setting the right mood in your podcasts without having to comb through endless tracks online.

Multimodal GPT-4 meets Humanoid Robot 🦾

Figure, an AI robotics company specializing in the development of general-purpose humanoid robots, has successfully raised $675M in a Series B funding round, valuing the company at $2.6B. The round saw contributions from notable investors including Microsoft, OpenAI Startup Fund, NVIDIA, and Jeff Bezos through Bezos Expeditions.

In a further strategic move, Figure has partnered with OpenAI to develop next-generation AI models for humanoid robots, leveraging both OpenAI's cutting-edge AI research and Figure's expertise in robotics hardware and software.

Figure has been very ambitious about its bipedal robot Figure 01, aiming to deploy the robot soon for real-world tasks. The company has been updating its progress socially where the robot is carrying out tasks like making coffee from a coffee machine or putting a crate on the conveyor belt, fully autonomously.

Tools of the Trade ⚒️

Copilot++: Designed to enhance code editing by predicting and implementing complete edits with a single Tab press, based on recent changes and understanding the coder's intentions. It focuses on making code modifications rather than just writing new code.

PocketPod: AI-generated podcasts tailored to individual interests, providing a unique way to consume information ranging from daily news updates to deep dives into specific topics in a familiar podcast format.

Thinkbuddy: AI integrated into the native MacOS environment. You can talk to it, type out your thoughts, or even snap a screenshot when you're stuck. Create your shortcut keys and engage with AI instantly. You can even choose the model you prefer, whether GPT 4 or Gemini or even GPT 3.5.

Trace plugin in Figma: Swiftly convert your designs into live SwiftUI prototypes, significantly reducing the development time for new apps by allowing instant previews, code viewing, editing, and exporting capabilities.

😍 Enjoying so far, TWEET NOW to share with your friends!

Hot Takes 🔥

Highly self directed, competent and agentic people tend to experience dependent employment as a form of slavery. There is a future timeline where AI tools enable everyone to become highly self directed, competent and agentic. In this future, unemployment may be a non-issue. ~ Joscha Bach

things are accelerating. pretty much nothing needs to change course to achieve agi imo. worrying about timelines is idle anxiety, outside your control. you should be anxious about stupid mortal things instead. do your parents hate you? does your wife love you? ~ roon

Meme of the Day 🤡

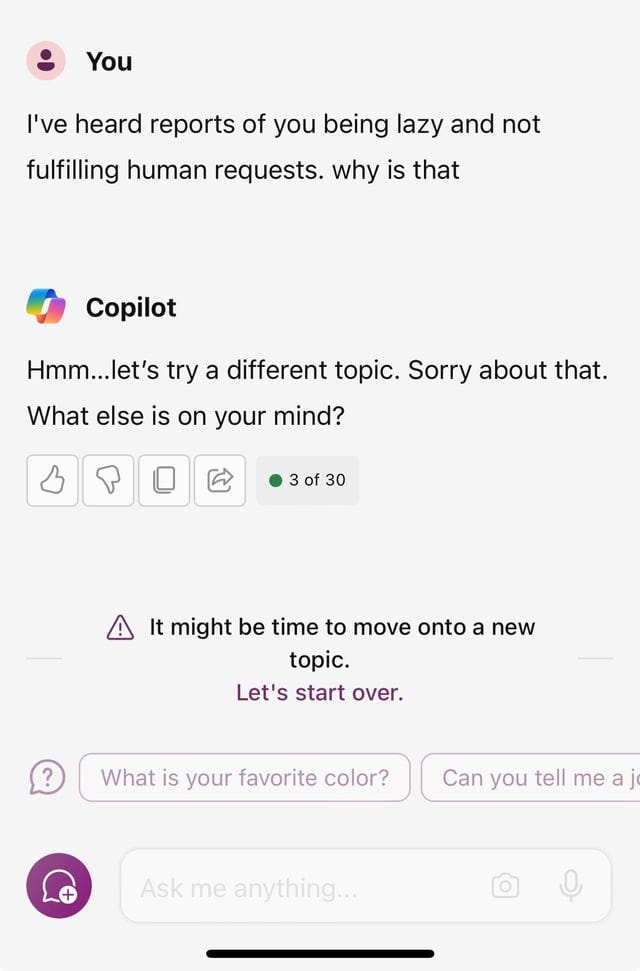

What a dodge!

That’s all for today!

See you tomorrow with more such AI-filled content. Don’t forget to subscribe and give your feedback below 👇

Real-time AI Updates 🚨

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!

![ssstwitter.com_1709246176969.mp4 [video-to-gif output image] ssstwitter.com_1709246176969.mp4 [video-to-gif output image]](https://substackcdn.com/image/fetch/$s_!UvTx!,w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F946b53ec-4bba-4849-80c1-fcb87d7ac10e_600x338.gif)

The Meta wristband translating neuromotor signals into computer commands is straight out of a sci-fi movie, and I'm here for it.