Midjourney v6 Levels up AI Image Generation 🔥

PLUS: Midjourney to make $200 million in revenue with $0 in VC funding, LangChain's LLM Benchmark, and Google's Depth Perception Breakthrough

Today’s top AI Highlights:

Midjourney v6 Alpha: Enhanced image precision and new creative tools for more personalized art generation.

LangChain's LLM Testing: Benchmarking environments for LLMs, highlighting strengths and weaknesses in tool usage.

Google's DMD Model: Innovative depth estimation in varied environments, improving mobile robotics and autonomous driving.

OpenPipe: Streamlines AI interactions by fine-tuning existing prompts for faster, more accurate responses.

& so much more!

Read time: 3 mins

Latest Developments 🌍

Midjourney Launches v6 for Creative Exploration 🎨

Midjourney has announced the alpha release of Midjourney v6, currently available on Discord. The v6 model improves significantly in how it follows prompts, even with longer inputs. This means you can expect a more precise translation of your textual descriptions into images. The model's understanding has been refined, resulting in more coherent and knowledge-informed outputs. This improvement is particularly noticeable in complex or detailed image-prompting scenarios.

Key Highlights:

Remix Mode and Text Drawing: With the remix mode and text drawing ability, you now have more creative tools at your disposal, providing an avenue for even more personalized and unique image creations.

Supported Features at Launch: v6 currently supports a range of features like aspect ratio adjustments, style variations, and blending modes.

Future Feature Integration: The team has also announced upcoming features like Pan, Zoom, and region-specific variations, indicating a commitment to continual enhancement.

A Shift in Prompting Style: v6 differs from its predecessor, requiring a relearning of prompt techniques. The model demands more explicit instructions, emphasizing the importance of clear and direct communication for desired results.

Benchmark for Testing LLM Function Calling Abilities ⚙️

LangChain has released four new testing environments to benchmark the capabilities of various LLMs in tool usage tasks. It offers a structured framework to evaluate and compare the effectiveness of LLMs like GPT-4, GPT-3.5, Claude, and others in performing tasks that require tool use, function calling, and overcoming pre-trained biases.

Key Highlights:

The testing environment encompasses four distinct tasks - Typewriter (Single and 26 tools), Relational Data, and Multiverse Math, designed to assess crucial LLM capabilities such as sequential tool calling, data relation handling, and mathematical problem-solving under altered rules. GPT-4 notably excels in the Relational Data task, which mirrors common real-world applications.

This benchmarking environment reveals interesting insights into the performance of various models. While GPT-4 demonstrates strength in certain tasks, it underperforms in the Multiverse Math task compared to GPT-3.5, hinting at the impact of its pre-trained biases. Claude-2.1 shows similar performance to GPT-4 in most tasks but lags in relational data tasks.

The release emphasizes not just the intellectual prowess of these models but also the importance of service stability. Issues like frequent 5xx errors from model providers highlight the need for reliable AI services.

One Camera, Complete Depth Understanding 🌊

Zero-shot metric depth estimation is challenging because the colors and depth information vary a lot between indoor and outdoor scenes. Additionally, unknown camera intrinsics makes it hard to accurately gauge the size and distance of objects. Google has released DMD (Diffusion for Metric Depth) model to estimate monocular metric depth in various environments, a crucial aspect for applications like mobile robotics and autonomous driving.

Key Highlights:

DMD uses a technique called log-scale depth parameterization, which helps it accurately interpret depth variations in both indoor and outdoor settings jointly. This approach is crucial for dealing with the usual challenges in depth perception between these environments.

The model is designed to adjust for field-of-view (FOV) variations, a common issue when the camera details are unknown. It also artificially enhances FOV during training, which helps it work well with different types of camera settings, something not commonly seen in other models.

Beyond its accuracy in depth estimation, DMD excels in processing speed due to a technique known as v-parameterization in its denoising process. This makes the model not just more precise in depth measurement but also faster in delivering results.

Tools of the Trade ⚒️

OpenPipe: Automatically captures your existing prompts and completions, then uses them to fine-tune a drop-in replacement. The replacement is faster, cheaper, and often more accurate than your original prompt.

Unacademy UPSC AI: Your AI chatbot to help you prepare from UPSC exams. It is trained on Unacademy’s UPSC entire knowledge base that involves 15K+ videos and 800+ articles, and can answer any questions related to the preparation.

Rex.fit: AI-powered nutrition and fitness coach that offers personalized health transformation services directly on WhatsApp. You can send it a snap of your meal or just text it for meal logging, get workout recommendations, and personal feedback and tips on your diet and lifestyle through daily reports.

Aragon.ai: Transform your selfies into professional-grade AI-generated headshots, offering a blend of convenience and quality with just a few photos.

Rask AI: It streamlines video dubbing and localization in over 130 languages, featuring AI-powered voice cloning and multispeaker capabilities for authentic, globally appealing content.

😍 Enjoying so far, TWEET NOW to share with your friends!

Hot Takes 🔥

I keep finding it weird that Elon says something is bad but then immediately does that thing.

AI will end humanity: Makes grok

AI Waifus are bad: advertises his own AI Catgirl Robots

Says dont train on Twitter data or he'll sue you, trains on the whole internet.

I'm sure something about Neuralink could be thrown in here lol ~ TekniumToo many people regard LLMs as hostile tech, thanks to all the dystopian sci-fiction

It's not humans vs. the machines. It's us teaching the machines.

LLMs are a reflection of humanity; it's as if the machines really studied us and tried to produce a beautiful babyLLMs are trained to understand, speak, and reason like humans. They should be loved, not feared. ~ Bindu Reddy

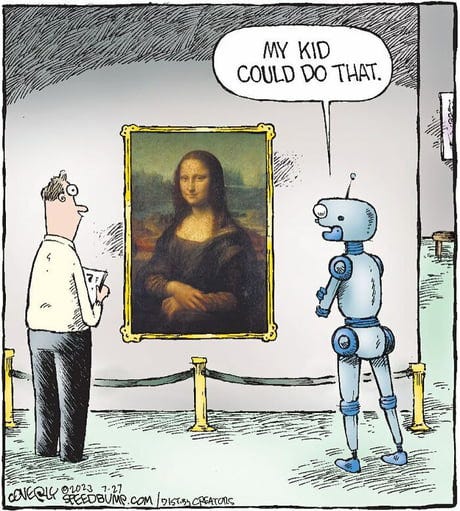

Meme of the Day 🤡

The Future

That’s all for today!

See you tomorrow with more such AI-filled content. Don’t forget to subscribe and give your feedback below 👇

Real-time AI Updates 🚨

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!