It was yet another thrilling week in the AI field with advancements that further extend the limits of what can be achieved with AI.

Here are 10 AI breakthroughs that you can’t afford to miss 🧵👇

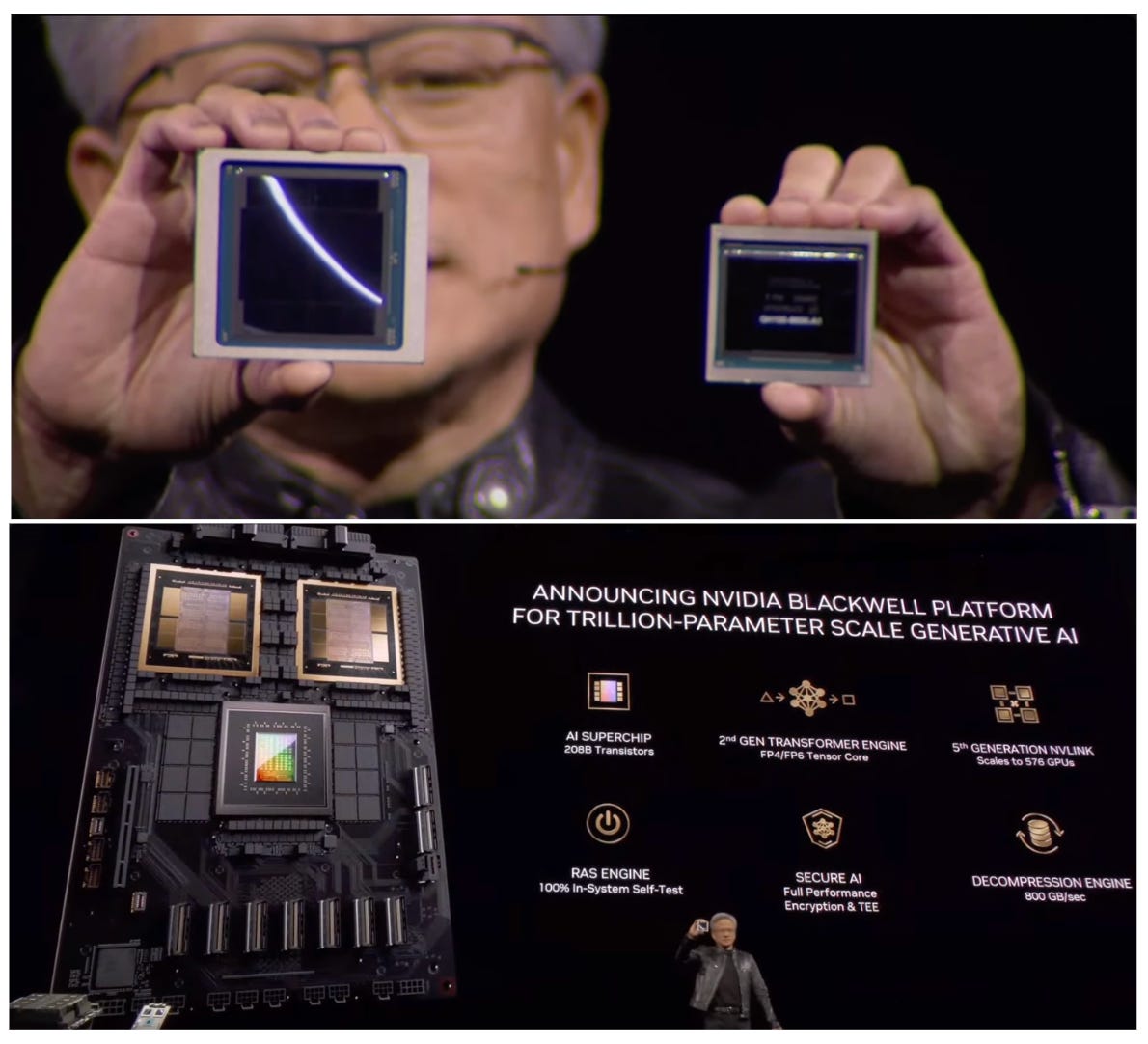

Nvidia Launches New AI Superchip

Nvidia has released the new Blackwell platform for powering multi-trillion parameters AI model training. The new Blackwell chiplet is the largest chip physically possible with 104 billion transistors, built on the TSMC 4NP N4P foundry node. The Nvidia B200 Tensor Core GPU combines two of these chiplets into a single unit. Compared to Hopper, it delivers 5x the AI performance and 4x the on-die memory.

The GB200 Superchip platform combines two NVIDIA B200 Tensor Core GPUs and a Grace CPU. This Superchip delivers up to a 30x performance increase compared to the Nvidia H100 Tensor Core GPU for LLM inference workloads and reduces cost and energy consumption by up to 25x.

The next-gen data-center-scale AI supercomputer- the Nvidia DGX SuperPOD powered by NVIDIA GB200 Superchips - is designed for processing trillion-parameter models with constant uptime for superscale generative AI training and inference workloads.

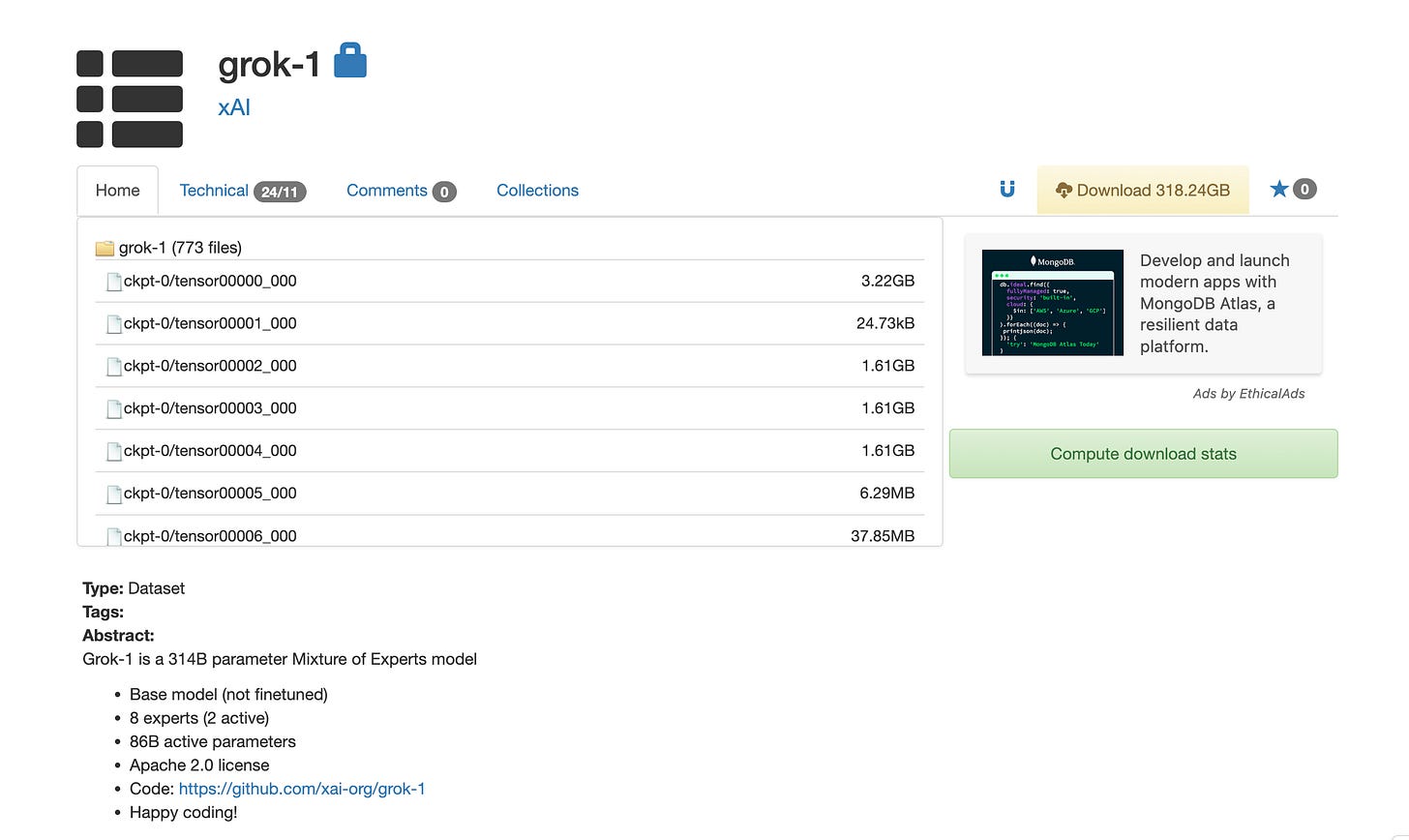

Elon Musk’s xAI Opensources Grok

xAI has opensourced Grok-1 under Apache 2.0 license and here are all the details:

314B parameter Mixture of Experts(MoE) model

Utilizes 8 experts with 2 active, yielding 86B active parameters (Source)

Base model trained on a large amount of text data, not fine-tuned for any particular task

Trained from scratch by xAI using a custom training stack on top of JAX and Rust in October 2023

Apple’s New Multimodal LLMs - MM-1

Apple has released MM-1, a series of multimodal AI models that scale up to 30B parameters, and include both dense models and mixture-of-experts (MoE) variants. A broad range of datasets, ranging from instruction-response pairs generated using GPT-4, to academic and text-only datasets, was employed for fine-tuning. In various few-shot learning settings, MM1 models outperformed Emu2, Flamingo, and IDEFICS in tasks like captioning, multi-image reasoning, and visual QA.

However, it is also confirmed that Apple will not be using MM-1 models to power generative AI apps on Apple’s devices. It is reportedly in talks with Google to leverage Google’s Gemini models for the same.

Inflection AI Co-founders Move to Microsoft AI 🕴️

Microsoft has hired two of Inflection AI’s co-founders, Mustafa Suleyman and Karen Simonyan, to head Microsoft AI including products like Copilot, Bing, and Edge. The third co-founder Reid Hoffman will stay in Inflection AI’s Board.

Inflection AI will pivot to focus on its AI Studio for developing custom generative AI models specifically tailored for commercial customers. Also, Inflection 2.5 LLM will soon be available on Microsoft Azure so that the company’s technology can be more widely distributed and accessible to creators, developers, and businesses worldwide. Inflection AI welcomes Sean White as its new CEO to steer Inflection AI into this new chapter.

GPT-5 will be a big leap from GPT-4

Sam Altman joined Lex Fridman on a podcast where they touched on a lot of topics including what went inside OpenAI when Altman was ousted, the new Board, Musk’s lawsuit, and OpenAI’s future releases. Altman said that:

GPT-4 “sucks” relative to where we need to get to. The delta between GPT-5 and 4 will be the same as between GPT-4 and 3.

They are not ready to talk about Project Q*.

A new model will be released this year, but it won’t be GPT-5. Before releasing GPT-5, OpenAI wants to release other products.

He expects by the end of this decade or maybe sooner than that, “we will have quite capable systems that we look at and say, wow, that's really remarkable.”

Fireworks’ Fine-tuning Service at No Extra Cost

Fireworks has just launched a new fine-tuning service for 10 AI models. It hosts fine-tuned models on the same serverless setup as their base models so that the fine-tuned models remain speedy. Fireworks does not impose additional fees for using fine-tuned models. You will pay exactly the same amount as for the base model. The platform’s design allows for nearly instant deployment of tuned models, which can be ready for use in about a minute. You have the flexibility to deploy and compare up to 100 fine-tuned models simultaneously and swap them into live services, without incurring extra deployment costs.

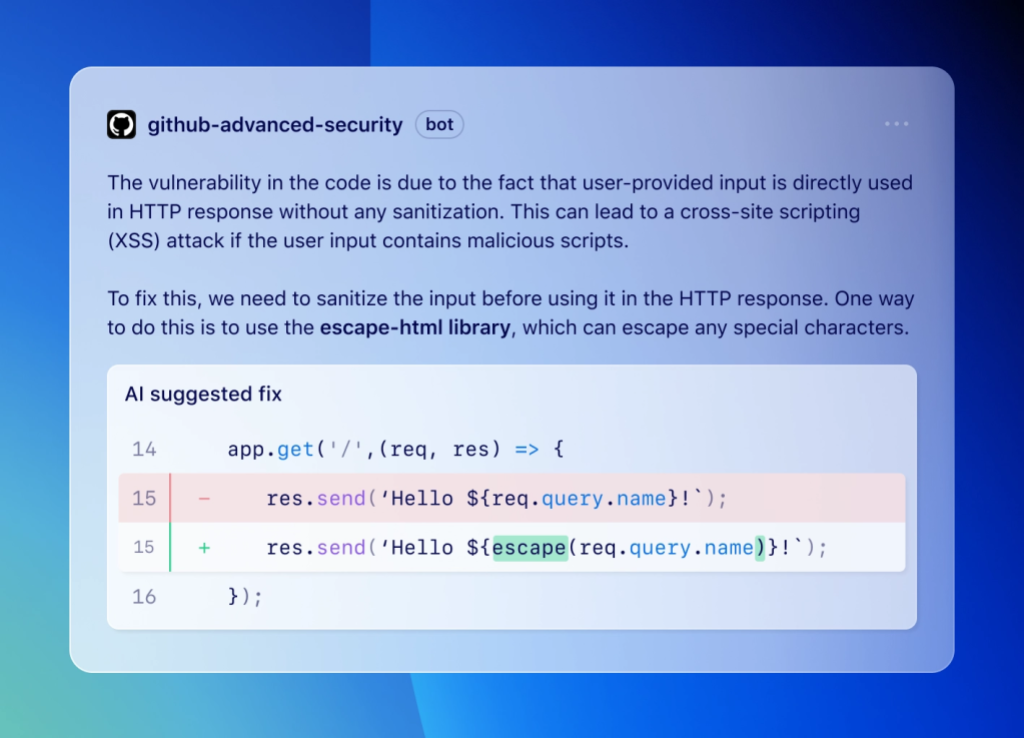

GitHub’s AI Tool for Code Scanning and Autofixing

GitHub is rolling out access to the new code-scanning autofix feature in public beta for all GitHub Advanced Security customers. Powered by GitHub’s Copilot and CodeQL, the feature covers more than 90% of alert types in JavaScript, TypeScript, Java, and Python. It is capable of remediating more than two-thirds of the vulnerabilities found with minimal or no editing required by developers.

For each vulnerability found, code scanning autofix not only suggests code for remediation but also provides an explanation in natural language. This helps developers understand the context and rationale behind each suggested fix, making it easier to decide whether to accept, edit, or dismiss the suggestion.

Portable AI Device to Control Computer

Open Interpreter has released 01, a portable AI device that’s as easy to chat with as a person. It is the first open-source language model computer, which can operate our computer through voice commands. The device can perform tasks like managing the calendar, searching the web, sending messages, and managing emails, and desktop files.

What makes 01 stand out from the AI Pin and Rabbit R1 is the ability to learn new tasks directly from you, such as operating a new desktop application or performing customized workflows that it has never done or seen before, without preset configurations.

Elon Musk’s Neuralink Plans Device for Blind People to See

Elon Musk’s Neauralink went live on X introducing their first human subject and the progress he has made after getting the brain-computer interface implanted in his brain. He was able to control the cursor solely from his mind, and said that he could now play chess and Civilization on his laptop with the Neuralink’s device.

Elon Musk has also announced that Neuralink’s new device will be able to cure blindness. The technology would see 64 tiny wires implanted by a Neuralink surgical robot into the visual cortex, the part of the brain that allows you to see. The Nueuralink would then be able to bypass the eye and create a visual image in the brain. Musk said that the resolution will be low at first, like early Nintendo graphics, but ultimately may exceed normal human vision.

Nvidia’s New Foundation Model for Robot Training

Project GROOT is a foundation multimodal model for training humanoid robots, to make them learn and perform various tasks by understanding natural language and emulating human movements. It teaches robots to navigate, adapt, and interact with the real world efficiently.

Along with GROOT is Jetson Thor, a purpose-built computing platform for humanoid robots, featuring a Blackwell-powered architecture optimized for performance, power efficiency, and compactness.

That’s all for today 👋

Stay tuned for another week of innovation and discovery as AI continues to evolve at a staggering pace. Don’t miss out on the developments – join us next week for more insights into the AI revolution!

Click on the subscribe button and be part of the future, today!

📣 Spread the Word: Think your friends and colleagues should be in the know? Click the ‘Share’ button and let them join this exciting adventure into the world of AI. Sharing knowledge is the first step towards innovation!

🔗 Stay Connected: Follow us for AI updates, sneak peeks, and more. Your journey into the future of AI starts here!

Shubham Saboo - Twitter | LinkedIn ⎸ Unwind AI - Twitter | LinkedIn