NVIDIA Launches AI Superchip 🚀

PLUS: Gemini may power the iPhones, Stable 3D video generation is here

Today’s top AI Highlights:

Nvidia’s Next-gen AI infrastructure (Blackwell chips, superchip and supercomputer)

Nvidia’s project GROOT to train a multimodal foundational model to achieve AGI in Humanoid Robots.

OpenUSD’s Digital twin meets Apple Vision Pro in the omniverse

Stability AI launches Stable Video Diffsuion 3D to generate 3D videos

& so much more!

Read time: 3 mins

Latest Developments 🌍

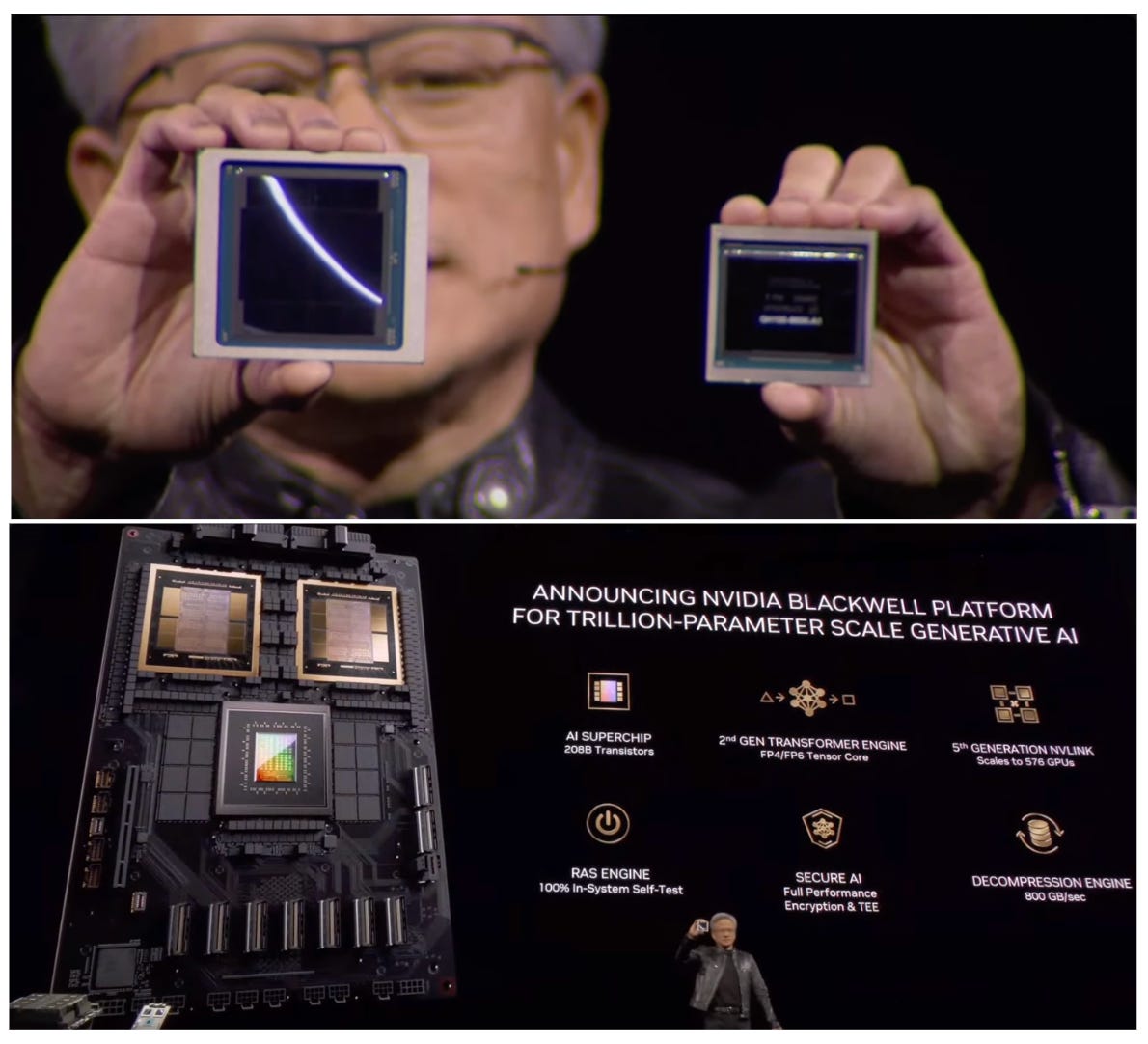

NVIDIA’s New GPU for Trillion-parameter Generative AI ☄️

Today, AI had its Taylor Swift moment at the Nvidia GTC 2024 with thousands of people attending the event. Nvidia CEO Jensen Huang took the stage today and introduced a suite of generative AI technologies, both hardware and models.

While companies are still trying to get their hands on H100s, Nvidia released the “world’s most powerful AI superchip,” Blackwell, named in honor of mathematician David Blackwell. Along with this, Nvidia infused its tech into every area, from robotics and medicine to spatial computing, industrial automation and enterprise-level AI. And this was just the first day!

Blackwell is the largest chip physically possible with 104 billion transistors, manufactured using the TSMC 4NP process and featuring a 10TB/s high-bandwidth interface for rapid data transfer.

Blackwell GPU combines two of the largest chips possible into a single unit, housing 208 billion transistors. Compared to its predecessor Hopper, it has an additional 128 billion transistors, delivering 5x the AI performance and 4x the on-die memory.

Blackwell’s B200 GPU offers up to 20 petaflops of FP4 computing power, whereas Hopper delivers 4 petaflops of FP8 computing.

Training a 1.8 trillion parameter model with Blackwell GPUs requires only 2,000 units and consumes just 4 megawatts of power, as opposed to the 8,000 Hopper GPUs and 15 megawatts needed previously. On a GPT-3 benchmark, the GB200 delivers 7x the performance and 4x the training speed compared to the H100.

Blackwell Superchip: The GB200 Superchip combines two B200 GPUs with a single Grace CPU. GB200 Superchips deliver up to a 30x performance increase compared to the Nvidia H100 Tensor Core GPU for LLM inference workloads and reduces cost and energy consumption by up to 25x.

The Nvidia DGX B200 system represents a comprehensive AI supercomputing platform, integrating eight Nvidia Blackwell GPUs and two 5th Gen Intel processors to deliver exceptional performance for AI model training, fine-tuning, and inference tasks.

Each DGX GB200 system within the SuperPOD comprises 36 Nvidia GB200 Superchips, including 36 Nvidia Grace CPUs and 72 Nvidia Blackwell GPUs, connected via fifth-generation Nvidia NVLink. The system can be scaled to eight DGX GB200 systems, allowing for the connection of 576 GPUs.

DGX SuperPOD is Nvidia’s next-generation data-center-scale AI supercomputer, powered by Nvidia GB200 Grace Blackwell Superchips. They are designed for processing trillion-parameter models with continuous uptime for superscale generative AI training and inference workloads.

Humanoid Robots: Project GROOT is a foundation multimodal model for training humanoid robots, to make them learn and perform various tasks by understanding natural language and emulating human movements. It teaches robots to navigate, adapt, and interact with the real world efficiently.

Along with GROOT is Jetson Thor, a purpose-built computing platform for humanoid robots, featuring a Blackwell-powered architecture optimized for performance, power efficiency, and compactness.

Earth Digital Twin: Earth-2 climate digital twin cloud platform redefines weather and climate simulation to combat economic losses attributed to climate change. Built on Nvidia Omniverse and the OpenUSD 3D framework, it leverages Nvidia’s generative AI model CorrDiff, using SOTA diffusion modeling, that generates 12.5x higher resolution images than current numerical models 1,000x faster and 3,000x more energy efficiently.

NVIDIA introduces enterprise-grade generative AI inference microservices (Nvidia NIMS), for businesses to develop and deploy custom AI applications very easily anywhere, while retaining ownership and control of their intellectual property.

Enterprises can leverage microservices for any kind of task including data processing, LLM customization, inference, RAG, and more.

These microservices are built on top of the Nvidia CUDA platform, offering popular AI models like SDXL, Llama 2, and Gemma, and leverage Nvidia APIs from your existing SDKs with as little as three lines of code.

Nvidia combines digital twins with real-time AI, enabling developers to create, test, and refine large-scale AI solutions within simulated environments before deployment in industrial settings.

Utilizing Nvidia Omniverse, Metropolis, Isaac, and cuOpt, developers can establish AI gyms where AI agents train to navigate complex industrial scenarios, optimizing processes and minimizing downtime.

Nvidia’s Omniverse, a platform for collaborative 3D content creation and simulation, now connects with Apple Vision Pro, enabling developers to effortlessly stream detailed digital replicas, created with OpenUSD, directly to Vision Pro devices.

By leveraging Apple Vision Pro’s high-resolution displays and Nvidia’s RTX cloud rendering, users can enjoy rich, immersive visuals without sacrificing quality or detail.

MM-1 Models will not Power GenAI on iOS 📱

Apple is reportedly considering a partnership with Google to leverage Google’s Gemini models to power the upcoming generative AI features on Apple’s devices. A couple of days back Apple had released its own multimodal AI model and we were quite excited in the hopes of MM-1-powered AI features in the new iOS.

Apple has been rumourdly facing pressure to catch up with major players such as OpenAI, Microsoft, Anthropic, and Google. Apple CEO Tim Cook has announced plans to introduce GenAI features “later this year,” and has been working internally as well, show the company’s job listings over the last year. But clearly the company is facing challenges in developing the technology in-house. Another concerning part of this partnership is Gemini’s history of controversy. Having a reputation of hallucinating the most and “being too woke,” it’s hard to say if the new features will seamlessly align with Apple’s user experience standards.

Stable Video Diffusion for 3D View Generation 🧱

Stability AI has released Stable Video 3D (SV3D), a generative model based on Stable Video Diffusion, designed to enhance the quality and consistency of novel view synthesis and 3D generation. SV3D takes a single object image as input and can output novel multi-views of that object, which can then be used to generate 3D meshes.

Key Highlights:

SV3D comes in two varinats; SV3D_u focuses on generating orbital videos from single images without requiring camera positioning, while SV3D_p extends these capabilities to include both single images and orbital views, creating 3D videos along predetermined camera paths.

Leveraging the power of video diffusion models, SV3D marks a significant improvement in the generation of multi-view videos, offering enhanced generalization and view consistency compared to its predecessors and other models including Stable Zero123.

SV3D employs optimization strategies, including disentangled illumination optimization and a novel masked score distillation sampling loss function, to make 3D images look real and handle light better, so the final results look great even when parts of the picture weren’t clear at the start.

Tools of the Trade ⚒️

Pipio VideoDubbing: AI-powered platform that translates and replaces audio in videos to new languages while automatically syncing the speaker’s lip movements to the new dialogue, maintaining the original voice tone and inflections.

Reprompt AI: Get generative AI applications to production quality 10x faster by offering features for debugging, tracking hallucinations, and writing custom prompt overrides without needing to code.

Wondera: Create your unique AI singing voice and sing like a star in any language or genre and make cover songs or lip-sync videos in your own AI voice. It offers an interactive and fun karaoke experience with features like AI-powered scoring and a built-in music library.

Story.com: Create AI-generated videos and stories. It provides a robust editing toolkit for real-time creativity, allowing for the production of mini-stories and videos up to 60 seconds long with a focus on story-centric content. development.

😍 Enjoying so far, TWEET NOW to share with your friends!

Hot Takes 🔥

I think [GPT-4] kinda sucks... I expect that the delta between 5 and 4 will be the same as between 4 and 3 and I think it is our job to live a few years in the future and remember that the tools we have now are gonna kinda suck looking backwards at them ~ Sam Altman (Source)

Prediction:

OpenAI is not working on building AGI. That job is done

They are working on how to deploy it ~

SullaMy predictions for compute in the next few years.

> 200 chip companies by the end of the year

> 5 will actually make a chip in 3 years

> GPU prices will drop once AMD becomes usable

> Models will become smaller and more ubiquitous

> As with everything, compute will become a commodity where margins tend to zero

> Nvidia will go up to $4-$5T. They can capitalize on this big risk and become a super big tech

> Google, MSFT, and Amazon will also all have their own chips ~

Bindu Reddy

Meme of the Day 🤡

Oh boy, Meta AI can not do Dice

That’s all for today! See you tomorrow with more such AI-filled content.

Real-time AI Updates 🚨

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!

![ssstwitter.com_1710807502377.mp4 [video-to-gif output image] ssstwitter.com_1710807502377.mp4 [video-to-gif output image]](https://substackcdn.com/image/fetch/$s_!-0kh!,w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Feab0afa1-ec24-4353-ad0b-c0484e5f8aff_600x338.gif)