First ever trillion parameter LLM 🤯

PLUS: Elon wants to rename OpenAI as CloseAI, Finer control over Gemini's output

Today’s top AI Highlights:

OpenAI takes a dig at Elon Musk

1.3 trillion parameters, one model, one platform

Optimizing the Optimizers: new fine-tuning method by Abacus AI

Tune a specific part of Gemini’s output

Teach AI bots once and automate the task for ever

& so much more!

Read time: 3 mins

Latest Developments 🌍

What OpenAI Has to Say 👊

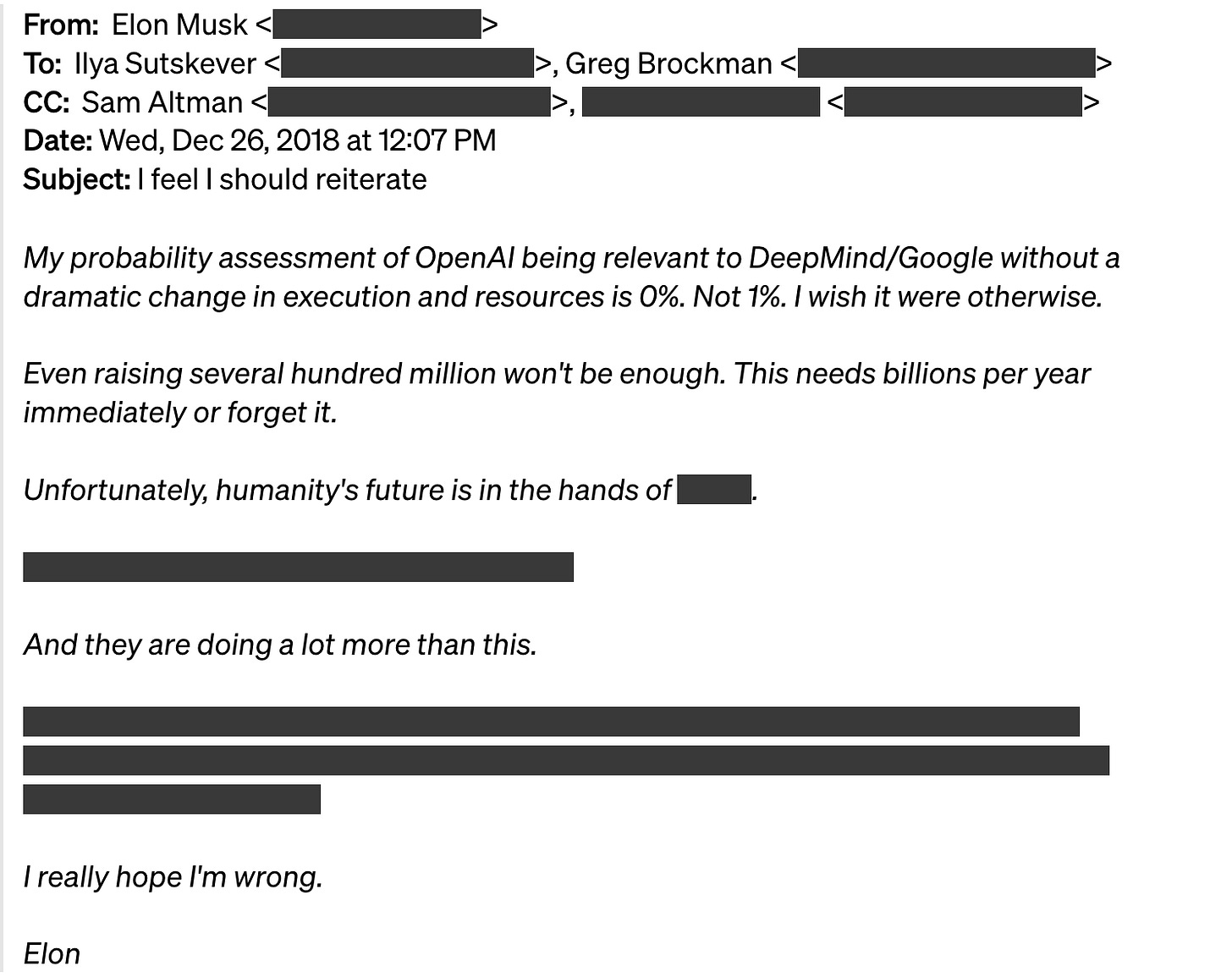

As you all know Elon Musk has sued OpenAI, an organization he once supported both ideologically and financially, accusing it and its co-founders, Sam Altman and Greg Brockman, of deviating from their original nonprofit mission. OpenAI has to say something defending its direction and accomplishments. Here’s a timeline of what went down, from the horse’s mouth itself, OpenAI.

Late 2015: The seeds of discord were sown early when, despite Elon Musk’s ambitious suggestion of "$1B funding commitment", where Musk would fill any gap, OpenAI managed to raise less than $45M from Musk himself, alongside more than $90M from other donors. During this time, the consensus was to ship products to benefit from the fruits of AI, “but it's totally OK to not share the science...”

Early 2017 to Late 2017: The realization that building AGI would require "billions of dollars per year" set the stage for a significant drift. Elon and OpenAI founders agreed to create a for-profit entity to further the mission. But Musk wanted majority equity, initial board control, and to be CEO, and the OpenAI team couldn’t agree to give him absolute control over OpenAI.

During these discussions, Musk witheld the funding which Reid Hoffman had to bridge the gap for operational expenses.Early 2018: Musk suggested that OpenAI should “attach to Tesla as its cash cow”, commenting that it was “exactly right… Tesla is the only path that could even hope to hold a candle to Google.” The fracture got deeper and Musk left OpenAI to build an AGI competitor within Tesla, saying that OpenAI’s probability of success was 0.

As OpenAI started releasing its products, GPT-2 in 2019, GPT-3 in 2020, and the most widely used ChatGPT in 2022, along with other models like Whisper and DALL.E with multiple iterations, we have all benefitted from the automation and the sheer ease of working. Not just at an individual or corporate level, many countries have partnered with OpenAI to leverage its tech for optimizing fields like farming and healthcare.

Elon Musk officially formed xAI and released its AI chatbot Groq in 2023. The witty, humorous and fast chatbot might be competing with Google’s but the growth that ChatGPT witnessed after its release was unprecedented.

50+ Experts Combined to Make 1 trillion Model 👯👬

SambaNova has open-sourced Samba-1, a model leveraging over 1 trillion parameters to deliver a new level of performance. Samba-1 integrates 54 expert models, merging the collective power of LLMs with the nuanced expertise and runtime footprint of smaller specialized models. Each expert supports role-based access control that mirrors organizational design, for instance, a query asking for an SQL conversion would be directed to a dedicated text-to-SQL expert model. Should there be a subsequent request to encode the SQL, Samba-1 would route this to a specialized text-to-code model, all through a single chat interface.

Key Highlights:

Samba-1's architecture combines many smaller expert models into a singular, large model, achieving the performance expected from multi-trillion parameter models, in a single model API endpoint.

The model prioritizes secure training on private data. Enterprises can now train models on their private data, behind their firewall, and without the risk of their data being exposed externally or within the organization.

Samba-1 increases performance by up to 10x while simultaneously reducing inference costs, complexity, and management overhead.

Samba-1 has demonstrated superior performance in benchmarking tests, outperforming GPT-3.5 across all enterprise tasks and matching or exceeding GPT-4 on a significant subset of these tasks, but with a fraction of the computational footprint. The inclusion of SOTA expert models in its Composition of Experts model enhances accuracy and functionality for enterprise applications.

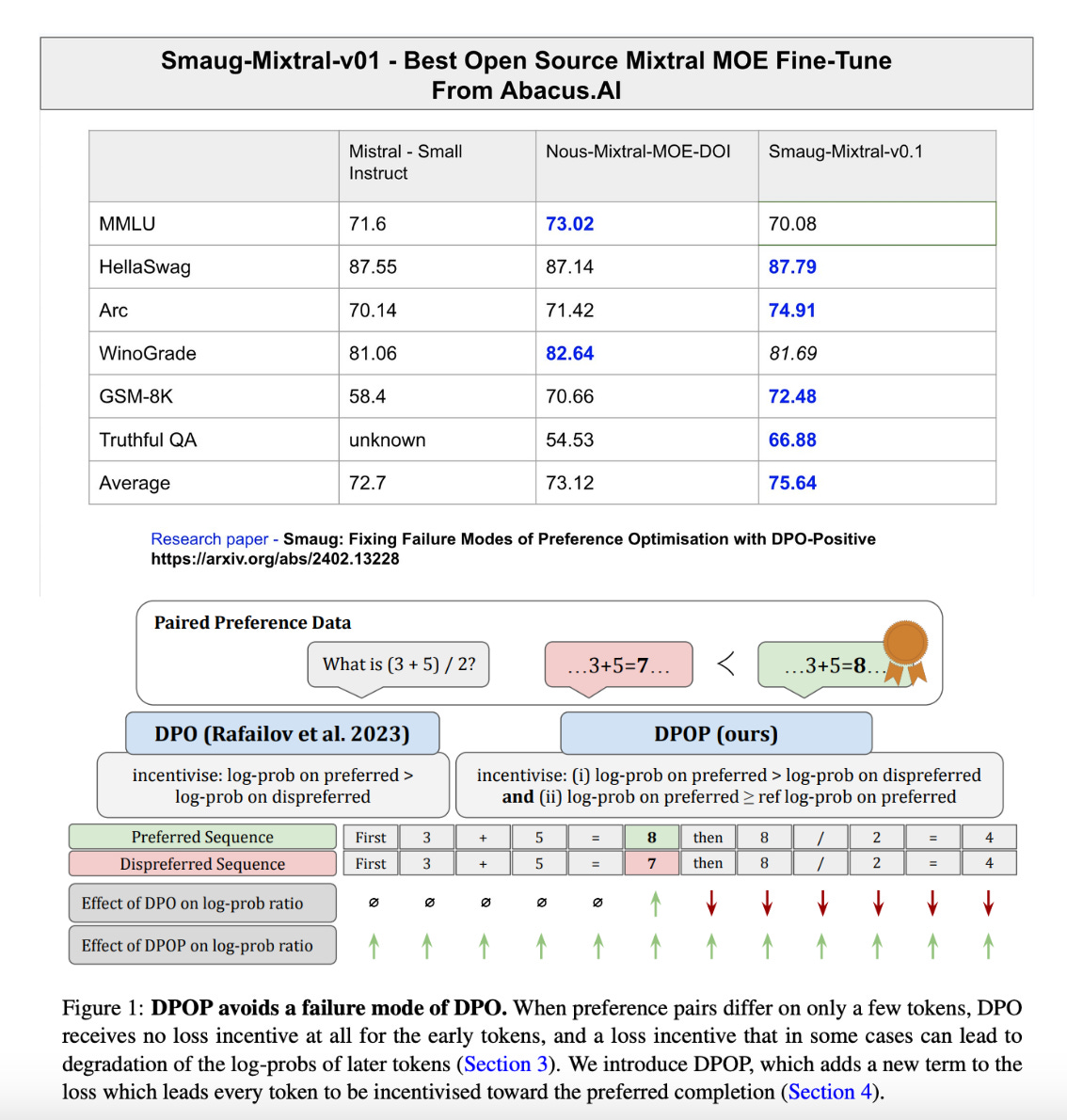

New fine-tuning method for enhanced accuracy in LLMs

Direct Preference Optimization (DPO) has been a staple in fine-tuning LLMs for better reasoning and summarization abilities, but it’s had its share of hiccups, decreasing the likelihood of choosing the more desired outcomes. DPO-Positive (DPOP) by Abacus AI is a refined strategy to fix these pitfalls while enhancing overall model effectiveness. Abacus AI has also open-sourced Smaug-Mixtral models, fine-tuned on DPOP, achieving state-of-the-art performance, 2% better than any other open-source model on the HuggingFace Open LLM Leaderboard.

Key Highlights:

DPOP introduces a novel loss function that penalizes decreases in the likelihood of these preferred responses, enhancing the model's alignment with human preferences. This process involves utilizing preference data to iteratively adjust the model's parameters, ensuring improved performance across various tasks without compromising the accuracy of preferred outcomes.

DPOP significantly outperforms the DPO method across a wide variety of datasets and downstream tasks, including those with high edit distances between completions.

Smaug-Mixtral suite of models (Smaug-34B and Smaug-72B) is a fine-tuned implementation using DPOP, tailored for Mixtral-Moe architectures. Smaug-72B is the first open-source LLM to achieve an average accuracy of over 80%, marking a notable improvement in model performance. Smaug Mixtral also outperforms other models on several other benchmarks including HelloSwag and GSM8K.

Tune Gemini’s Output More Precisely 🎯

Google has rolled out a new feature in the Gemini web app for tuning responses more precisely. Just select the part of Gemini's response you want to change and click on the edit icon to modify the text. You can regenerate, shorten, elaborate, remove, or give Gemini some instructions to get the output you want.

Gemini may not accommodate changes with prompts that lack clear modification instructions, request unsupported text formatting, violate the Prohibited Use Policy, or are unclear or unachievable. Also, modifications cannot be applied to text from extensions, responses involving code (including code description and code blocks), or selections containing images. (Source)

Tools of the Trade ⚒️

Zapier Central: AI workspace where you can effortlessly automate tasks across over 6,000 apps by simply chatting with bots, eliminating the need for coding. It connects to live data sources and lets you teach the AI bots through natural language chat. The bots will continue to carry out these tasks autonomously including querying data, automating customer follow-ups, enriching leads, and more.

Appointment Scheduling through Synthflow: Synthflow’s AI voice agents can now autonomously handle appointment scheduling - check availability, find slots, and send booking details - all in real time during calls.

AgentHub: Automate a wide range of business workflows without the need for AI expertise, through a simple drag-and-drop interface and custom tools tailored to their business needs. It offers a rich library of pre-built automation templates for various domains such as sales, HR, software development, and more.

Winglang: Combines infrastructure and runtime code in one language for cloud development. It facilitates developers to create, debug, and deploy applications more rapidly and securely, with less context switching.

😍 Enjoying so far, TWEET NOW to share with your friends!

Hot Takes 🔥

What makes the whole LLM/self-awareness debate so tricky is that neurons are not self-aware either. Instead, our neurons *simulate* a person, which experiences itself as self-aware. Can LLMs simulate a self-aware person too, or are they just simulating the simulation of a person? ~ Joscha Bach

If we are actually close to AGI, why do companies building AGI need to keep hiring more people? Wasn't the promise of AGI the median digital remote knowledge worker? ~ Aravind Srinivas

Meme of the Day 🤡

Elon Musk is on fire today!

That’s all for today!

See you tomorrow with more such AI-filled content. Don’t forget to subscribe and give your feedback below 👇

Real-time AI Updates 🚨

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!

![central-demo-natural-language-4k.mp4 [video-to-gif output image] central-demo-natural-language-4k.mp4 [video-to-gif output image]](https://substackcdn.com/image/fetch/$s_!weYq!,w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fced5c9bb-2a02-4607-bc88-389c2caf9495_800x551.gif)