Codestral 22B outperforms Llama-3 70B

PLUS: Why Sam Altman was fired, New winner in LLM Speed Test

Today’s top AI Highlights:

First-ever code model by Mistral AI that supports 80+ programming languages

SambaNova Achieves Record AI Speed Test on Llama 3 8B

“Toxic atmosphere and manipulation” - Ex-Board member of OpenAI gives shocking revelations on why Sam Altman was fired

& so much more!

Read time: 3 mins

Latest Developments 🌍

Developers Gain New AI Assistant with Codestral 22B 👩💻

Mistral AI has released Codestral, the first-ever code model explicitly designed for code generation. Codestral supports over 80 programming languages, including popular ones like Python and Java, and specific ones like Swift and Fortran. With its large 22-billion-parameter model and advanced fill-in-the-middle mechanism, Codestral can save developers time and minimize coding mistakes. The model is available on Hugging Face.

Key Highlights:

Architecture: Codestral is a 22-billion-parameter size and a context window of 32k tokens, more than other models fine-tuned for coding which have a context size of maximum 16k tokens.

Capabilities: Codestral supports over 80 programming languages, making it versatile for various coding projects. It features a fill-in-the-middle mechanism to complete partial code and write tests.

Performance: The model outperforms all other models fine-tuned for coding including Llama 3 70B and DeepSeek Coder 33B, across all coding benchmarks.

Avaiability: Codestral is integrated into popular tools like LlamaIndex, LangChain, Continue.dev, and Tabnine, allowing seamless use within VSCode and JetBrains environments. It is available on Le Chat and via API for free for an 8-week beta period.

Samba-1 Turbo Tops AI Speed Test Leaderboard 🔥

SambaNova Systems has set a new benchmark for LLM inference speed. Their Samba-1 Turbo system, using 16 chips, achieved a blazing 1,084 tokens per second (t/s) on Meta’s Llama 3 Instruct 8B, surpassing previous records. This performance, verified by Artificial Analysis, is over 8x faster than the median output speed across other providers. This significant leap in speed and useful for real-time applications and high-volume document interpretation.

Key Highlights:

Samba-1 Turbo’s performance dwarfs that of other providers, including Groq’s which achieved 877 t/s on Llama 3 8B. This remarkable speed is achieved using a proprietary Dataflow technology that accelerates data movement on the SN40L chips, minimizing latency, and maximizing processing throughput.

The Samba-1 Turbo platform can concurrently host up to 1,000 Llama 3 checkpoints on a single 16-socket SN40L node. This means developers can run multiple models simultaneously, significantly increasing the efficiency and scalability of their AI workloads.

Their approach offers a significantly lower total cost of ownership compared to competitors. This is achieved by utilizing fewer chips to achieve higher performance.

The Dark Secrets Behind Sam Altman’s Ouster 😵💫

Since November 2023 when Sam Altman was ousted from OpenAI, the company has seen a fallout of its credibility. Employees are resigning from the company, Elon Musk has sued OpenAI for being “closed AI”, restrictive NDAs for ex-employees, and a lot more! But no one really knows how it all started and what went inside OpenAI when the Board decided to fire Altman.

In a podcast with Bilawal Sidhu at The TED AI Show, Helen Toner spoke at length about what happened then, why the Board had to decide to fire Altman, and more on why self-regulation is not enough for AI companies. Here’s what happened, straight from the horse’s mouth:

For years, Sam had made it difficult for the Board to do its job by withholding information, misrepresenting things that were happening in the company, and “outright lying to the Board.”

When ChatGPT came out in November 2022, the Board was not informed about that and they learned about ChatGPT on Twitter.

Sam did not inform the Board that he owned the OpenAI Startup Fund even though he claimed to be an independent Board member with no financial interest in the company.

On multiple occasions, he gave the Board inaccurate information about the number of safety processes the company had in place. It was impossible for the Board to know how well those processes were working.

Helen had co-written a research paper last year for the policymakers where she criticized the release of ChatGPT, prompting companies like Google to circumvent safety checks and hurry the release of chatbot Bard. The paper also appreciated OpenAI’s competitor Anthropic for deciding “not to productize its technology in order to avoid stoking the flames of AI hype.”

After the paper came out, Sam started lying to other Board members to push Helen off the Board.

There are more individual issues where Sam would come up with “innocuous-sounding explanations” of why it wasn’t a big deal or was misinterpreted. After years of this, all four Board members who fired him concluded that they couldn’t believe things he was telling us.

It’s a completely unworkable place especially for a Board that is supposed to provide independent oversight over the company and not help the CEO raise more money.

In October last year, two of the executives told the Board about their own experiences with Sam which they weren’t comfortable sharing before. They told how they couldn’t trust him and “the toxic atmosphere he was creating.” They used the phrase “psychological abuse,” that he wasn’t the right person to lead the company to AGI and they had no belief that he would change.

They even sent the Board screenshots and documentation of instances where he was lying and being manipulative.

As soon as Sam would have an inkling that the Board might do something that went against him, he would pull out all the stops to undermine the Board and stop it to the point that it won’t even be able to fire him.

When Sam was fired, the employees were given two options: either Sam comes back immediately with no accountability and a new Board of his choice or “the company will be destroyed.” That’s why people got in line and supported Sam.

Sam was fired from his previous job at Y Combinator which was hushed up and his job before that, his start-up Loopt. Apparently the management team went to the board there twice and asked the board to fire him for “deceptive and chaotic behaviour”.

✍️ Build Personalized Marketing Chatbots with Google Gemini

Learn how to build personalized marketing chatbots with Google Gemini and LoRA in just 60 minutes. Join this FREE webinar for live demos and hands-on code sharing. Register now before the spots get filled!

😍 Enjoying so far, share it with your friends!

Tools of the Trade ⚒️

Apten: An AI SMS assistant that automates sales, marketing, and customer service for B2C businesses via SMS. It customizes communication flows, follows up with leads, and responds 24/7, ensuring no lead is forgotten.

micro1: An AI-powered recruitment engine that interviews over 20,000 engineers monthly using AI and gpt-vetting, ensuring only the top 1% is available for hire. This platform handles global compliance, payroll, and benefits, so you hire deeply vetted engineers quickly.

Kerlig: An AI writing assistant for macOS that integrates with any app using your own API key from OpenAI, Claude, Gemini Pro, or Groq. It helps you fix spelling and grammar, change tone, write replies, and answer questions directly within any selected text.

Awesome LLM Apps: Build awesome LLM apps using RAG for interacting with data sources like GitHub, Gmail, PDFs, and YouTube videos through simple texts. These apps will let you retrieve information, engage in chat, and extract insights directly from content on these platforms.

Hot Takes 🔥

Prediction: AI will displace social drinking within 5 years

Just as alcohol is a social disinhibitor, like the Steve Martin movie Roxanne, people will use AI powered earbuds to help them socialize. At first we'll view it as creepy, but it will quickly become superior to alcohol ~

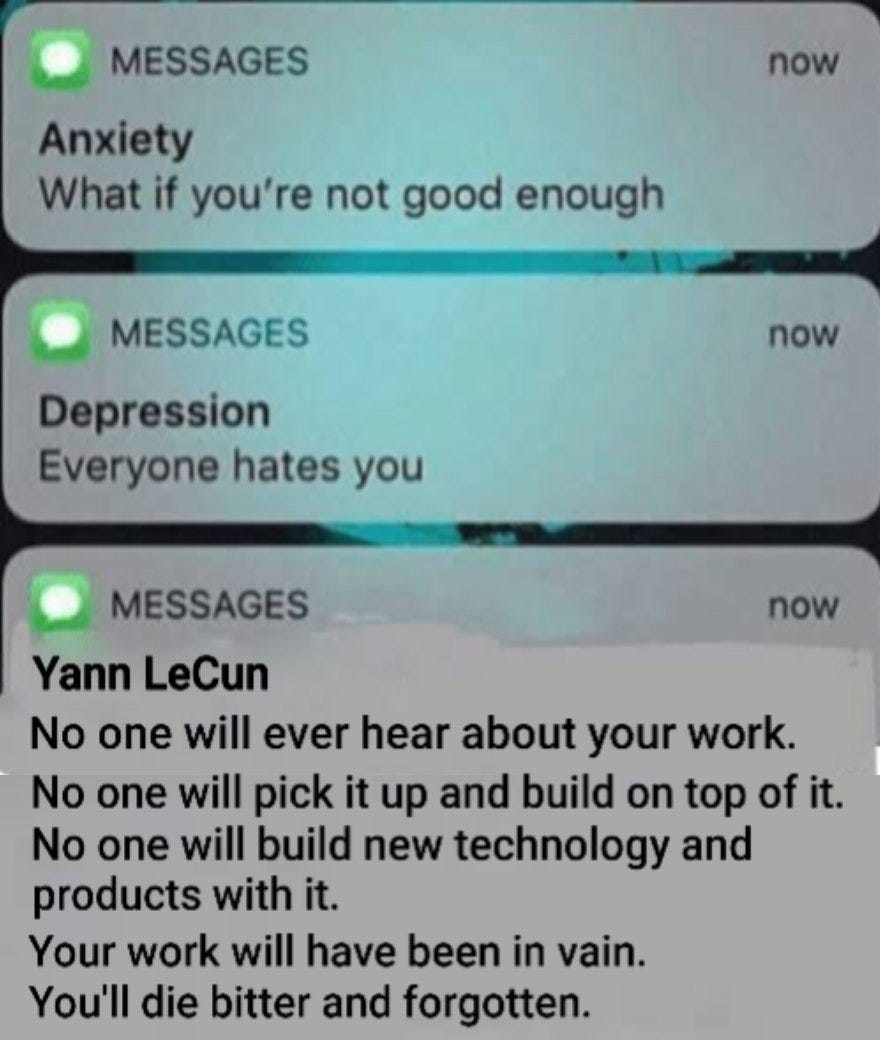

Jonathan RossTo qualify as Science a piece of research must be correct and reproducible.

To be correct and reproducible, it must be described in sufficient details in a publication.

To be 'published' (to receive a seal of approval) the publication must be checked for correctness by reviewers.

To be reproduced, the publication must be widely available to the community and sufficiently interesting.

If you do research and don't publish, it's not Science.

Without peer review and reproducibility, chances are your methodology was flawed and you fooled yourself into thinking you did something great.

No one will ever hear about your work.

No one will pick it up and build on top of it.

No one will build new technology and products with it.

Your work will have been in vain.

You'll die bitter and forgotten.

If you never published your research but somehow developed it into a product, you might die rich. But you'll still be a bit bitter and largely forgotten. ~

Yann LeCun

Meme of the Day 🤡

That’s all for today! See you tomorrow with more such AI-filled content.

Real-time AI Updates 🚨

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!

Codestral sounds AMAZING!!!