AI Voice Cloning with < 3 seconds Audio🤯

PLUS: Language to Quadrupedal Motion, How Knowledgeable are LLMs?

Hey there 👋

There’s never a dull moment in the AI field and today too we have some really intriguing updates. Ever imagined cloning voice using AI with only 3 seconds of reference audio? It is possible!

Shifting gear, a Google project is bridging the gap between human communication and quadrupedal robots by turning natural language into seamless, fluid movements. On the other side, Meta’s CoTracker emerges with its cutting-edge video motion prediction capabilities. Lastly, as our AI world expands and we increasingly use AI chatbots, we’ll explore how accurate are our LLMs on some lesser-known facts.

Let’s dive right in!

This issue covers:

Latest Developments 🌍

Tools of the Trade ⚒️

Hot Takes 🔥

AI Meme of the Day 🤡

Read time: 3 mins

Latest Developments 🌍

SayTap: Making Robots Dance to Your Words 🦿

Meet SayTap, a groundbreaking project from Google that's making four-legged robots understand human language. Imagine telling a robot to "walk slowly" or "jump high," and watch it follow your commands like a real pet! This system uses foot contact patterns and language models to translate your words into the robot's movements.

CoTracker: Track Videos Smarter, Not Harder 🎥

Meet CoTracker, the cutting-edge solution that's redefining video motion prediction. CoTracker smartly keeps tabs on multiple points in a video, recognizing how they relate to each other. Built on a transformer network with specialized attention layers, this system delivers both efficiency and accuracy that outpaces current methods.

Clone any Voice using AI in < 3 secs 🤯

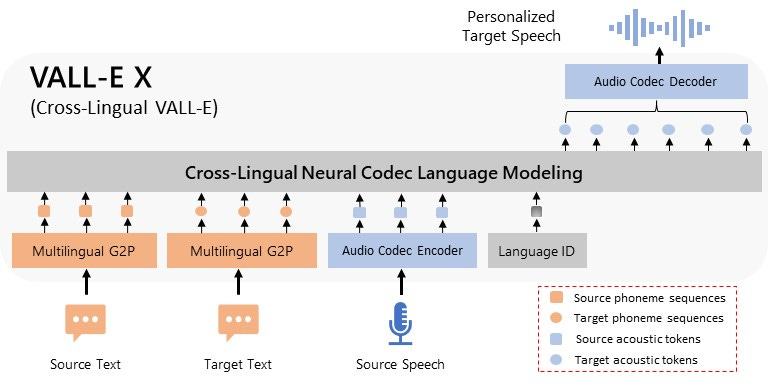

VALL-E X is an amazing multilingual text-to-speech (TTS) model proposed by Microsoft. While Microsoft initially published a research paper, they did not release any code or pre-trained models. But now, we have an Opensource implementation of VALL-E X that you can use to clone any voice on your computer.

Can LLMs Replace Knowledge Graphs? 🤔

In a new research, the authors try to understand: How smart are LLMs? Using a clever benchmark called Head-to-Tail, researchers evaluate the knowledge base of 14 public LLMs. While these chatbots may seem like a know-it-all they still have a long way to go, particularly in their understanding of less-popular facts.

Tools of the Trade ⚒️

Voxel: Uses AI and security cameras for site safety, risk management, ergonomic assessment, vehicle safety, PPE tracking, and efficiency gains.

VemoAI: Transform messy spoken words into formatted clear content for brainstorming, content creation, journaling, interviews, and more.

Motion Slider in Gen-2: Control the amount of movement in your video output by selecting a value between 1 to 10.

VirtualBrain: AI assistant for organizations to streamline knowledge management, content sharing, transform into chatbot, and create several VirtualBrains.

RestoGPT: Free AI-driven restaurant online ordering storefront with integrated POS and delivery for effortless autopilot operations.

😍 Enjoying so far, TWEET NOW to share with your friends!

Hot Takes 🔥

The best benchmark I can think of is people recommending a model. This is what happens with GPT-4, you'll see plenty of folks saying use GPT-4 because it works well. Benchmarks don't capture that essence, people do ~ anton

It's actually possible to achieve SOTA on all NLP tasks using RegEx, but no one knows how to actually use it. ~ Bojan Tunguz

Momentum is going to start accelerating in XR/Spatial this Fall. Anecdotal and subjective, but I can sense it. ~ Jonathan Chavez

Meme of the Day 🤡

That’s all for today!

See you tomorrow with more such AI-filled content. Don’t forget to subscribe and give your feedback below 👇

Real-time AI Updates 🚨

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!

![[optimize output image] [optimize output image]](https://substackcdn.com/image/fetch/$s_!h_gD!,w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fc88b3b45-820c-4b11-b169-43caf1b6c924_800x450.gif)

![[optimize output image] [optimize output image]](https://substackcdn.com/image/fetch/$s_!3kgY!,w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F0be73547-5a29-459d-aa85-0137e78245b3_800x450.gif)

![[optimize output image] [optimize output image]](https://substackcdn.com/image/fetch/$s_!aBE6!,w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F0e7909d4-2591-4ec3-ada0-9e7cc46ad42f_600x339.gif)