AI Pin Startup Humane up for Sale 🏷️

PLUS: PyTorch library for robotic training, Anthropic peeks inside the mind of LLM

Today’s top AI Highlights:

Anthropic researchers peek inside black box LLMs to learn how AI ‘thinks’

Hugging Face releases a new PyTorch library for real-world robotic training

Humane is Seeking a Buyer After AI Pin’s Disappointing Launch

AI notepad for meetings that transforms unstructured patchy words to proper neat notes

& so much more!

Read time: 3 mins

Latest Developments 🌍

Mapping the Mind of a Large Language Model 🧠

It’s a major challenge to understand how LLMs work internally. We treat them like black boxes: input goes in, output comes out, and we often don’t know how and why. This lack of transparency raises concerns about their safety and reliability. But a team of researchers at Anthropic has made significant progress in peering inside these black boxes. They’ve developed a method to map the concepts that LLMs use to “think” and generate text. This discovery could have huge implications for making AI safer and more reliable.

Key Highlights:

Millions of Concepts Mapped: The team mapped millions of concepts represented inside Claude-3 Sonnet using a technique called ‘dictionary learning.’ This technique finds patterns of neuron activations that correspond to specific concepts.

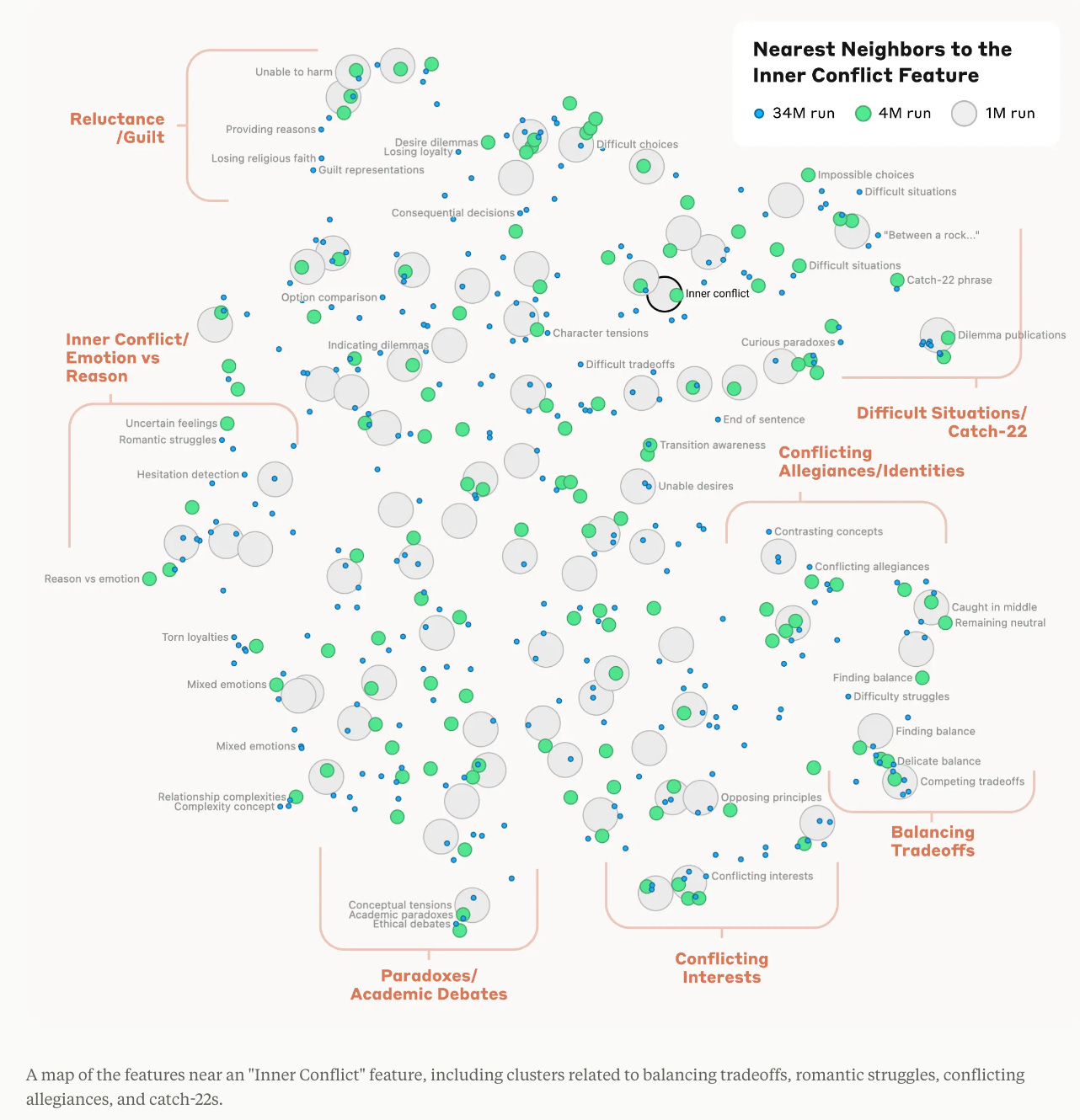

Features Go Beyond the Surface: The mapped concepts are more complex than simple words. They represent things like cities, people, scientific fields, and even abstract concepts like gender bias or keeping secrets. The researchers were even able to identify a feature related to ‘inner conflict.’

Features Can Be Manipulated: These features could also be manipulated, causing changes in the model’s output. For example, artificially amplifying the ‘Golden Gate Bridge’ feature made the LLM obsessed with the bridge, mentioning it in response to almost any question.

Features Linked to Potential Misuse: The research identified features connected to potentially harmful capabilities like creating code backdoors or developing biological weapons. This is a critical finding as it helps pinpoint areas where LLMs could be misused.

Potential for Safer AI: This research opens the door to new ways to improve AI safety. The findings could be used to identify and mitigate dangerous behaviors, steer LLMs towards desirable outcomes, and even remove harmful content from their output.

Hugging Face’s Library to Kick-Start Robotics Training 🦾

Hugging Face has released LeRobot, a new PyTorch-based library to make real-world robotics more accessible to researchers and developers. LeRobot provides a suite of pre-trained models, datasets with human demonstrations, and simulation environments, all designed to help researchers train robots efficiently. It is designed to be user-friendly, with clear documentation and examples. Remi Cadene who has built this library says “LeRobot is to robotics what the Transformers library is to NLP.”

Key highlights:

LeRobot offers a variety of pre-trained models and datasets, including human demonstrations, which can be used to train models for various robotics tasks. Users can start building their own robotics applications without the need to collect data.

LeRobot includes support for Weights & Biases for experiment tracking, to monitor their model training progress and evaluate different hyperparameters.

LeRobot’s codebase has been validated by replicating state-of-the-art results in simulations, ensuring that the library is robust and reliable.

AI Wearable Startup Humane Faces Uncertain Future 🌥️

Humane, the company behind the AI Pin that was supposed to be the next big thing, is reportedly looking for a buyer. The company founded by ex-Apple employees Imran Chaudhri and Bethany Bongiorno, has faced a lot of criticism after its underwhelming release of the AI Pin and criticism coming from left, right and centre.

Last year it was valued by investors at $850 million. The company raised $230 million from a roster of high-profile investors including OpenAI CEO Sam Altman, even before it sold its first product. Companies like Meta and Rabbit have been trying to establish a space for AI wearables but it doesn’t seem to be happening anytime soon.

This a classic case of a startup raising millions by just riding the “AI” wave and becoming over-valued, only to be burst later. However, the founders may also be able to turn it around by successively improving the technology and make it a viable product.

😍 Enjoying so far, share it with your friends!

Tools of the Trade ⚒️

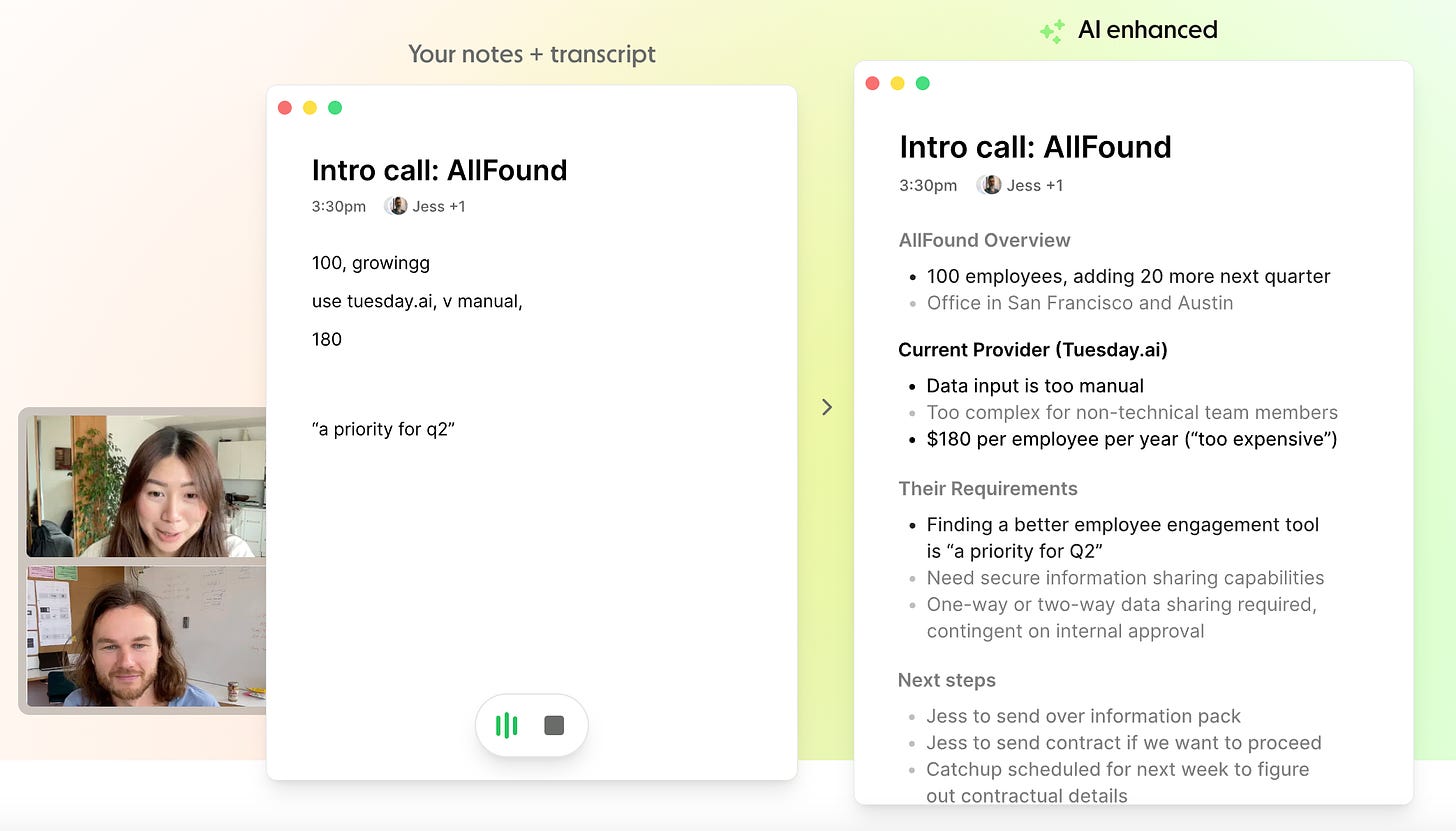

Granola: An AI notepad for people who attend back-to-back meetings. It transforms raw unstructured meeting notes to properly readable notes that can be shared with everyone. It transcribes your meeting audio to do this. With GPT-4 built in, it can also help you do your post-meeting action items.

Multi Agent Flow in Flowise AI: Have multiple AI agents working together for you to complete complex tasks more efficiently by delegating specific roles and responsibilities. Powered by LangGraph, each AI agent has dedicated prompts, tools, and models, for better performance for long-running tasks through reflective loops for auto-correction and specialized functions.

Codium Cover Agent: Automates the generation of unit tests and enhances code coverage for software projects. It leverages generative AI to create and validate tests, and streamline development workflows. It can run via a terminal, and is planned to be integrated into popular CI platforms.

FlyCl: Automatically fix your failing CI builds, helping you save time by reducing debugging efforts. You can start using it quickly by changing just one line in your GitHub workflow, and focus on building instead of troubleshooting.

Awesome LLM Apps: Build awesome LLM apps using RAG for interacting with data sources like GitHub, Gmail, PDFs, and YouTube videos through simple texts. These apps will let you retrieve information, engage in chat, and extract insights directly from content on these platforms.

Hot Takes 🔥

If you are a student interested in building the next generation of AI systems, don't work on LLMs ~

Yann LeCunHypothetically if I was the CEO of a major AI company (OpenAI) and I wanted the industry to be regulated to ensure there is no competition, what would I do?

Maybe clone the voice of a mainstream actor and then release it causing an uproar and demands for regulation from Hollywood and the general public.

The company is not public so there are no repercussions in the equity markets + I can just do a monetary settlement with the actor (Scarlett).

Some 4-D chess here? ~

Karma

Meme of the Day 🤡

Has Sky been cheating on me?

That’s all for today! See you tomorrow with more such AI-filled content.

Real-time AI Updates 🚨

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!