Top AI Breakthroughs from NVIDIA GTC 2024

20 major AI launches from the biggest AI conference of the year.

Nvidia GTC 2024 was one of the most pivotal events for the future of AI and Robotics. The four-day event witnessed a number of releases and announcements from major tech companies, with Jensen Huang’s, CEO of Nvidia, stealing the show. They even introduced what they're calling the "Most Powerful AI Supercomputer," and let me tell you, and the AI community is still talking about it!

To make sure you don't miss out, I've put together a list of the top 20 announcements from the event that you've just got to see.

Processors for Trillion parameter scale GenAI

1. Blackwell B200 GPU:

Combines two of the largest dies possible to build a single GPU, housing 208 billion transistors. Compared to its predecessor Hopper, it has an additional 128 billion transistors, delivering 5x the AI performance and 4x the on-die memory.

Blackwell-architecture GPUs are manufactured using a custom-built 4NP TSMC process with two-reticle limit GPU dies connected by 10 TB/second chip-to-chip link into a single, unified GPU.

Blackwell’s B200 GPU offers up to 20 petaflops of FP4 computing power, whereas Hopper delivers 4 petaflops of FP8 computing.

Second-generation Transformer Engine introduces new precision levels and micro-tensor scaling, enabling more efficient and accurate AI operations with FP4 support, thereby doubling the performance and capacity for future models.

Training a 1.8 trillion parameter GPT-4 model with Blackwell GPUs requires only 2,000 blackwell GPUs and consumes 4 megawatts of power, as opposed to the 8,000 Hopper GPUs and 15 megawatts needed previously. On a GPT-3 benchmark, the GB200 delivers 7x the performance and 4x the training speed improvement compared to the H100.

In a CNBC interview, Nvidia CEO Jensen Huang said a Blackwell GPU will cost between $30,000 to $40,000. He also said Nvidia spent $10 billion to develop Blackwell.

2. GB200 Grace Blackwell Superchip:

A cutting-edge processor designed for trillion-parameter-scale generative AI, combining two Nvidia B200 GPUs and a Grace CPU with 72 Arm Neoverse cores over a high-speed 900GB/s NVLink chip-to-chip interconnect

This powerful configuration provides 40 petaFLOPS of AI performance, supported by 864GB memory and an incredible 16TB/sec memory bandwidth, enhancing its capacity to handle complex AI workloads efficiently.

It’s memory coherent, so it’s like one big AI computer working on a single application together with no memory localization issues

The Superchip’s architecture includes several transformative technologies such as a fifth-generation NVLink for high-speed GPU communication, a dedicated RAS (reliability, availability, and serviceability) engine for system resilience, secure AI features for data protection, and a decompression engine to accelerate database queries.

GB200 Superchips delivers up to a 30x performance increase compared to the Nvidia H100 Tensor Core GPU for LLM inference workloads and reduce cost and energy consumption by up to 25x.

3. Nvidia DGX B200: High-Performance AI Training and Inference

Nvidia DGX B200 marks the sixth generation in Nvidia’s lineup of traditional, air-cooled rack-mounted designs tailored for AI model training, fine-tuning, and inference tasks.

It houses 8 Nvidia B200 GPUs and is powered by two 5th Gen Intel Xeon processors. It's engineered to provide up to 144 petaflops of AI performance, equipped with 1.4TB of GPU memory and 64TB/s of memory bandwidth.

The DGX B200 is specifically designed to be 15x faster at real-time inference for trillion-parameter models than its predecessors, showcasing its capability to efficiently manage extensive AI tasks without the need for a data-center-scale infrastructure.

4. Nvidia DGX SuperPOD: Data-Center-Scale AI Supercomputing

The next-generation data-center-scale AI supercomputer- the Nvidia DGX SuperPOD powered by Nvidia GB200 Superchips - is designed for processing trillion-parameter models with constant uptime for superscale generative AI training and inference workloads.

DGX SuperPOD features 8 or more DGX GB200 systems and can scale to tens of thousands of GB200 Superchips connected via Nvidia Quantum InfiniBand. For a massive memory to power next-generation AI models, customers can deploy a configuration that connects the 576 Blackwell GPUs in eight DGX GB200 systems connected via NVLink.

5. AWS + Nvidia to Power Multi-trillion-parameter LLMs Projects

AWS and Nvidia have collaborated enabling customers to dramatically scale their computational capabilities. AWS will offer Nvidia Grace Blackwell GPU-based Amazon EC2 instances and Nvidia DGX Cloud to boost performance for generative AI training and inference.

Customers can tackle even the most compute-intensive projects, from training multi-trillion parameter LLMs to conducting sophisticated data analyses.

To protect customers’ sensitive data, Nvidia’s Blackwell encryption will be integrated into AWS services to ensure end-to-end security control. Customers can confidently secure their training data and model weights against unauthorized access.

The Future of Chip Fabrication

6. Computation Lithography Platform for Accelerating Chip Production

Nvidia has teamed up with TSMC, the leading semiconductor foundry, and Synopsys, a frontrunner in silicon-to-systems design solutions, to roll out Nvidia's computational lithography platform, cuLitho, into production. This aims to speed up the making of the next-gen semiconductor chips.

Computational lithography is about creating the blueprints for a chip’s design on a silicon wafer. It is the most compute-intensive workload in semiconductor manufacturing, requiring over 30 million hours of CPU compute time annually.

Nvidia has introduced cuLitho, a platform that leverages GPU acceleration to dramatically enhance the process. By bringing in 350 of its H100 systems, Nvidia claims it can do the work of 40,000 CPU systems. Nvidia has also introduced new generative AI algorithms that can predict and generate the optimal patterns for chip designs, speeding up the lithography process further.

The integration of cuLitho has led to dramatic performance improvements, speeding up the workflows by 40-60x.

Omniverse and AI-powered Digital Twins

7. API for Creating and Testing Digital Twins 👯

Nvidia has launched Omniverse Cloud APIs for developing digital twins, which are virtual replicas of physical systems that allow companies to simulate, test, and optimize products and processes before they are implemented in the real world. Omniverse is a platform for designing and deploying 3D applications, powered by the OpenUSD framework.

Digital twins are virtual copies of real-world physical objects like cars or buildings. Before making changes in the real world—like adjusting how a machine works or trying out a new layout for a building—you can test it on the digital twin to see if it's a good idea.

These APIs are already accelearating the development of autonomous machines by enabling developers to efficiently train, test, and validate autonomous systems, such as self-driving vehicles and robots. Through high-fidelity, physically based sensor simulation, companies can simulate real-world conditions more accurately, speeding up the development process while reducing the costs and risks associated with physical prototyping.

Nvidia has also demonstrated how digital twins with real-time AI can enable developers to create, test, and refine large-scale AI solutions within simulated environments before deployment in industrial settings.

8. Omniverse Expands Worlds Using Apple Vision Pro

Blending digital twins, spatial computing and ease for developers, Nvidia has expanded Omniverse Cloud API to support the Apple Vision Pro by enabling developers to stream interactive, industrial digital twins into the device. This cloud-based approach allows real-time physically based renderings to be streamed seamlessly to Apple Vision Pro, delivering high-fidelity spatial computing experiences.

9. Digital Twin of Earth for Weather Modelling

Earth-2 is a digital twin cloud platform for simulating and visualizing weather and climate on an unprecedented scale. The Earth-2 platform offers new cloud APIs on the Nvidia DGX Cloud for users to create AI-powered emulations for interactive, high-resolution simulations. These simulations can cover various aspects, from global atmospheric conditions to specific weather events like typhoons and turbulence.

Earth-2 employs a SOTA diffusion-based generative AI model, CorrDiff. It can produce images that are 12.5x more detailed than those generated by current numerical models, and it does so 1,000x faster and 3,000x more energy-efficiently. It generates critical new metrics for decision-making, effectively learning the physics of local weather patterns from high-resolution data sets.

Enterprise AI and Analytics

10. Generative AI Copilots Throughout the Enterprise

Nvidia has launched a suite of generative AI microservices for enterprises to develop and deploy custom applications directly on its platform while maintaining complete ownership of their intellectual property.

The services, built on the Nvidia CUDA platform, include NIMs microservices for optimized inference and CUDA-X microservices for applications such as data processing, LLM customization, RAG, and more. It offers popular AI models like SDXL, Llama 2, and Gemma, allowing you to use NIMs from your existing SDKs with as little as three lines of code.

This offering is aimed at leveraging Nvidia’s extensive CUDA installed base, spanning across millions of GPUs in various environments including clouds, datacenters, and personal computing devices.

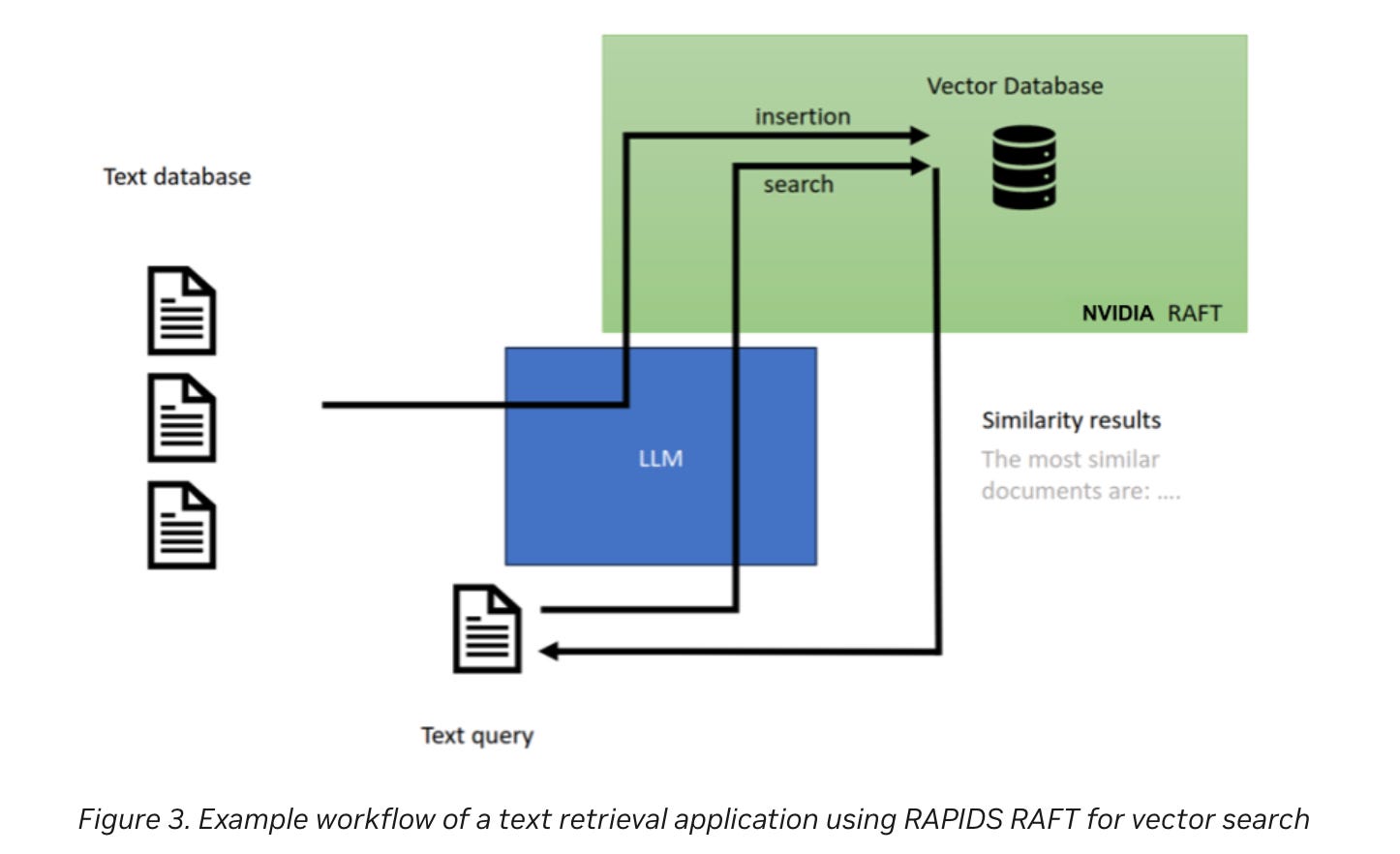

11. Real-Time Vector Similarity Search powered by Nvidia Tech

Kinetica has introduced a vector similarity search engine at the GTC. This engine is unique for its ability to ingest vector embeddings 5x faster than the prior market leader. By leveraging GPU-accelerated analytics, this solution enhances the way enterprises can interact with and glean insights from their operational data, including both textual and quantitative data. It utilizes RAG to integrate up-to-the-minute information from external databases, ensuring that the AI’s responses are as informed and relevant as possible.

The solution is powered by Nvidia NeMo and its computing infrastructure, focusing on two key components: low-latency vector search using Nvidia Rapids Raft technology and the capability for real-time, complex data queries.

Kinetica’s real-time generative AI solution removes the requirement for reindexing vectors before they are available for query. Developers can now enrich their AI applications with detailed, context-aware information without the lag time.

12. Conversational Analytics for Location and Time Data

Navigating through massive datasets, especially those rich in location and time data, has often been a cumbersome task for business users and data scientists alike. They face challenges with complex data exploration tools or mastering SQL queries, slowing down the discovery of insights from large data sets.

HEAVY.AI has introduced HeavyIQ, a GPU-powered tool to simplify data analysis by allowing users to interact with their data through conversational analytics. This enables users to ask natural language questions and quickly generate advanced visualizations.

HeavyIQ stands out for its accuracy, speed, and ease of use. It’s trained on over 60,000 custom training pairs, delivering an accuracy that surpasses GPT-4, with more than 90% accuracy on common text-to-SQL benchmarks.

13. Pura Storage + Nvidia for Optimizing GenAI Apps with RAG

Pure Storage and Nvidia are collaborating to enhance generative AI applications for enterprise use through RAG. By integrating external, specific, and proprietary data sources into LLMs, RAG addresses key challenges such as hallucination and the need for constant retraining, making LLMs more accurate, timely, and relevant.

This collaboration leverages Nvidia’s computing power and Pure Storage’s FlashBlade enterprise storage, supporting a wide array of data-intensive operations from analytics to AI model training.

The partnership also introduces Nvidia NeMo Retriever microservices, aimed at facilitating production-grade AI deployments within the Nvidia AI Enterprise software suite. By providing an efficient and scalable RAG pipeline, enterprises can now process and query vast document repositories in seconds, enabling quicker, more accurate decision-making and insights.

Humanoid Robots and AI in Healthcare

14. Project GR00T for Developing General-Purpose Robots

Nvidia has introduced Project GR00T, a new initiative aimed at developing general-purpose foundation models specifically for humanoid robots. This technology is designed to give robots the ability to understand natural language and mimic human actions, enabling them to learn skills such as coordination and dexterity. This means that robots could soon be better equipped to navigate, adapt, and interact with the real world around them.

Alongside Project GR00T, Nvidia has also announced Jetson Thor, a computer tailored for these humanoid robots. Jetson Thor is built on the Nvidia Thor system-on-a-chip (SoC), featuring Blackwell GPUs and a transformer engine that supports complex AI models like GR00T, optimized for performance, power efficiency, and compactness.

15. AI Foundation Model for Drug Discovery

Nvidia’s BioNemo, a platform for training and deploying AI models for drug discovery applications, now includes new foundation models that can analyze DNA sequences, predict changes in proteins due to drug molecules, and determine cell functions from RNA. These models are accessible as microservices through Nvidia NIMs and will be available on AWS soon.

AI-powered 3D Design and Visualization

16. 3D Generative AI on Shutterstock and Getty

Nvidia Edify, a multimodal architecture for visual generative AI, is venturing into 3D asset generation. It offers developers and visual content providers enhanced creative control over AI-generated images, including 3D objects.

Edify can be tapped into through APIs provided by Shutterstock and Getty Images, now available for early access. API on Shutterstock will let you quickly generate 3D objects for virtual scenes using simple text prompts or images.

Getty Images is enhancing its service with custom fine-tuning capabilities, making it easier for enterprise customers to produce visuals that closely match their brand’s guidelines and style.

17. Text to 3D in under a second with Nvidia’s AI model

Nvidia has just rolled out a new AI model named LATTE3D to whip up 3D shapes from text prompts in < 1 second. These shapes are not only high quality but also formatted for easy integration into various graphics softwares or platforms like Nvidia Omniverse, making them a handy tool for developers, designers, and creatives across the board.

A year prior, it took about an hour to generate 3D visuals of similar quality. The current state of the art before LATTE3D could do it in 10 to 12 seconds. LATTE3D greatly accelerates this process, producing results an order of magnitude faster and making near-real-time text-to-3D generation. The model was initially trained on two datasets: animals and everyday objects. However, its architecture allows for training on other types of data.

AI Brings Virtual Worlds to Life

18. Nvidia brings AI game characters to life

Nvidia launched its digital human technology to create lifelike avatars for games. This toolkit is breathing life into video game characters, giving them voices, animations, and the ability to whip up dialogue on the fly.

The suite of tools includes Nvidia ACE for animating speech, Nvidia NeMo for processing and understanding language, and Nvidia RTX for rendering graphics with realistic lighting effects. Together, these tools create digital characters that can interact with users through AI-powered natural language that mimics real human behavior. Every playthrough brings a unique twist due to the real-time interactions that influence the unfolding narrative.

Nvidia’s technology is also being used for multi-language games. Games like World of Jade Dynasty and Unawake are incorporating Nvidia’s Audio2Face technology to generate realistic facial animations and lip-syncing in both Chinese and English.

Optimizing Resources for AI and Beyond

19. NVIDIA Is Being Pushed to the Edge

Nvidia is teaming up with Edge Impulse to enhance the precision and efficiency of edge ML models, especially for devices with limited resources. Edge ML is a crucial technology that allows running models on devices located at the network’s edge, such as smartphones, sensors, IoT devices, and embedded systems.

This collaboration leverages the strengths of Nvidia’s TAO Toolkit, a platform to create highly accurate, customized, and enterprise-ready vision AI models, and Omniverse alongside Edge Impulse’s platform, including the Blackwell architecture known for its capability to manage massive trillion-parameter ML models.

Customers will be able to develop and deploy powerful ML models more quickly and accurately on a wide range of devices, from IoT sensors to embedded systems. The integration allows for the creation of customized, production-ready applications that are both efficient and privacy-centric, even on the smallest platforms powered by Arm Cortex-based microcontrollers.

20. ClearML Open Source Fractional GPUs for Everyone

ClearML has announced a significant update for the opensource community, unveiling its new free fractional GPU capabilities. This enables the partitioning of Nvidia GPUs for multi-tenancy, allowing for more efficient use of GPU resources across multiple AI workloads.

This release aims to enhance compute utilization for organizations, making it possible to run high-performance computing (HPC) and AI workloads more efficiently and to achieve better return on investment from existing AI infrastructure and GPU investments.

Fractional GPUs represent a breakthrough in computing resource management, enabling the division of a single GPU into multiple smaller, independent units. This technology leverages Nvidia’s time-slicing technology to distribute GPU resources effectively, ensuring that multiple applications can run concurrently on the same physical GPU without interference.

That’s all for today 👋

See you next week with another interesting AI deep dive, where we'll explore fascinating aspects of the latest AI breakthroughs in depth. Can’t wait to share more insightful discussions and discoveries in the world of AI with you.

Click on the subscribe button and be part of the future, today!

📣 Spread the Word: Think your friends and colleagues should be in the know? Click the ‘Share’ button and let them join this exciting adventure into the world of AI. Sharing knowledge is the first step towards innovation!

🔗 Stay Connected: Follow us for updates, sneak peeks, and more. Your journey into the future of AI starts here!

Shubham Saboo - Twitter | LinkedIn ⎸ Unwind AI - Twitter | LinkedIn