Today’s edition is sponsored by Traycer: Grammarly for Code

Get instant feedback on your code as you write. A Visual Studio extension, Traycer identifies potential issues and offers actionable suggestions to improve your code, keeping you productive & confident. It doesn’t interrupt your code flow and pops up only when you need it.

Today’s top AI Highlights:

OpenAI research offers a glimpse inside GPT-4’s thinking

AI-powered bionic arm gives amputees a new level of freedom

Qwen2 is here: Speaks more languages and solves Maths better

HP in talks to acquire the struggling AI Pin maker Humane

& so much more!

Read time: 3 mins

Latest Developments 🌍

16 Million Features Reveal How GPT-4 Thinks 🧠

It’s difficult to understand how LLMs work. We can’t easily peek inside these “black boxes” to see how they arrive at their outputs. This makes it challenging to ensure their safety and reliability, especially as they become more powerful. Now, OpenAI researchers have developed a method to break down the inner workings of GPT-4, offering a glimpse into its internal representations. They’ve extracted 16 million interpretable patterns from GPT-4, giving us a better understanding of how this sophisticated AI model thinks.

Key Highlights:

Breaking Down GPT-4: The researchers used a technique called sparse autoencoders to decompose GPT-4’s internal representations. These autoencoders identified a massive number of “features” – patterns of activity that represent concepts.

Scaling to Millions of Features: The new methods allowed the researchers to scale their sparse autoencoders to tens of millions of features, which is a major improvement over previous efforts. This enabled them to capture a wider range of concepts within GPT-4.

Understanding the Inner Workings: The researchers identified many interpretable features, including human imperfection, price increases, training logs, rhetorical questions, and algebraic rings. These features offer insights into the way GPT-4 processes language and information.

Sharing Knowledge: The research team has made their code, autoencoders, and a feature visualizer available to the public. This allows other researchers to explore and build upon their findings, furthering our understanding of LLMs and their capabilities.

Future of Prosthetics is Here, It’s Mind-Controlled 🦾

A California-based company, Atom Limbs, is developing a new bionic arm powered by AI that would give amputees a level of functionality previously unimaginable. This non-invasive arm uses advanced sensors and machine learning to interpret brain signals and control movement, providing users with near-human dexterity, individual finger control, and even a basic sense of touch. The arm attaches securely via a strengthened sportswear-style vest that evenly distributes weight, making it more comfortable and less cumbersome than other bionic options.

Key Highlights:

Adaptive Technology: The arm’s advanced AI technology allows it to learn and adapt to the user’s movements. Users can “assign” their residual muscles to specific movements, allowing them to control the arm’s hand, wrist, and elbow with precision. This intuitive interface reduces the learning curve and enhances the overall usability of the device.

No Surgery Required: It connects to the wearer’s residual limb through a non-invasive system, eliminating the need for surgery or implants. This non-invasive design also makes it easier for users to adjust the arm and adapt it to their changing needs.

Lower Cost: While the estimated price tag of $20,000 (£15,000) is still significant, it’s affordable compared to many other bionic products on the market. This technology can become more accessible to a wider range of amputees.

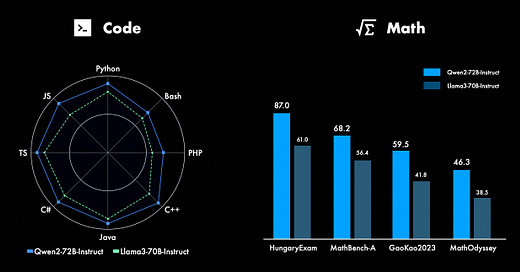

Qwen2 Outperforms Llama 3 Across All Benchmarks 🥇

The Qwen team has released the latest version of their LLM suite, Qwen2, featuring several key improvements. Qwen2 offers a range of models, from the compact 0.5B parameter version to the powerful 72B parameter model. This release expands on Qwen’s multilingual capabilities, now supporting 27 additional languages beyond English and Chinese. Qwen2 also demonstrates significantly enhanced performance in coding and mathematical tasks, a key focus area for the team.

Key Highlights:

Multilingual Capabilities: Qwen2 incorporates training data from 27 additional languages, including popular languages from Europe, Asia, and the Middle East.

Strong Performance: Qwen2 72B model outperforms Llama 3 70B and Mistral 8x22B across all standard benchmarks including MMLU, GSM8K and HumanEval. The models especially show a very strong performance in math and coding tasks.

Longer Context: Qwen2-7B-Instruct and Qwen2-72B-Instruct support longer context lengths, up to 128K tokens. These models are particularly effective in handling long document tasks, such as information extraction, as demonstrated in the “Needle in a Haystack” (NIAH) benchmark.

Safety Focus: Qwen2 models, specifically the instruction-tuned versions, have been designed with safety in mind. They demonstrate competitive performance against other models in handling multilingual prompts and minimizing harmful outputs.

HP in the Running to Acquire Humane for $1 Billion 💸

Humane, the company behind the ambitious AI Pin, is reportedly looking for a new home and is in talks with HP about a potential sale. HP might be the potential buyer in a deal worth over $1 billion.

The AI Pin’s journey has been fraught with challenges. The reviews were flooded with criticism about even the basic functionality of the Pin. Internal company emails revealed a culture of silencing dissent, with a senior software engineer reportedly fired for raising concerns about the device being launched hurriedly. The company had recently informed its customers that the AI Pin’s charging case poses a fire safety risk. The executives had to chill the AI Pin with ice packs.

It seems Humane’s ambition to revolutionize wearable computing may have outpaced its ability to deliver a genuinely useful and polished product.

😍 Enjoying so far, share it with your friends!

Tools of the Trade ⚒️

Inspectus: A versatile visualization tool for LLMs. It runs smoothly in Jupyter notebooks via an easy-to-use Python API. Inspectus provides multiple views, offering diverse insights into language model behaviors.

Archie AI: Transform boring written content into interactive conversations for increasing user engagement and revenue. It boosts ad impressions, subscriber numbers, and return visitors by making content more engaging and personalized.

AirNotes: AI that helps you manage your notes neatly by understanding text, audio, and picture inputs. You can use it on Telegram (@airnotesbot) to categorize information like contact details, reading lists, language studies, money tracking, and calorie records.

Awesome LLM Apps: Build awesome LLM apps using RAG for interacting with data sources like GitHub, Gmail, PDFs, and YouTube videos through simple texts. These apps will let you retrieve information, engage in chat, and extract insights directly from content on these platforms.

Hot Takes 🔥

All computer science departments are becoming machine learning departments. (Naveen Rao) ~

Pedro DomingosThe current upper class will fall. Connections. Degrees. Prestige. Perception. Narrative control. The news. Their systems are failing.

A new upper class will rise. Rationality. Engineering mindset. Information filtering. Scientific truth. The Internet. Our systems are working. ~

George Hotz

Meme of the Day 🤡

That’s all for today! See you tomorrow with more such AI-filled content.

Real-time AI Updates 🚨

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!