It was yet another thrilling week in the AI field with advancements that further extend the limits of what can be achieved with AI.

Here are 10 AI breakthroughs that you can’t afford to miss 🧵👇

Siri + ChatGPT will be the Ultimate AI Assistant 📱

Apple is reportedly giving Siri a significant upgrade. It will control individual app functions with voice commands. Powered by OpenAI’s GPT model or Google’s Gemini, Siri would be able to analyze user activities and suggest voice-controlled features, initially focusing on Apple’s own apps before expanding. Users will be able to chain multiple commands, such as summarizing a meeting and sending it to a colleague in one request.

Nvidia Reveals Blackwell’s Successor: A New GPU Platform 🌟

At Computex 2024 in Taipei, Jensen Huang just revealed the company’s roadmap for its GPU architecture till 2027. As he said that Nvidia is on a one-year rhythm, he revealed very few details on the new platforms which will be announced in the coming years. This includes the Blackwell Ultra platform in 2025, the all-new Rubin platform in 2026, and the Rubin Ultra platform in 2027. These platforms are under development and will be backward compatible with existing software.

The Netflix of AI - Watch and Create TV episodes 🍿

The Simulation, the company behind last year’s viral South Park AI episode generator, has launched its own platform, Showrunner, that lets you create your own TV shows with AI. Showrunner is designed for anyone, even those with no technical or professional experience, and lets you create shows in different styles, like anime, cutout animation, and 3D animation. It’s like the “Netflix of AI,” and it’s not just about watching—it’s about making.

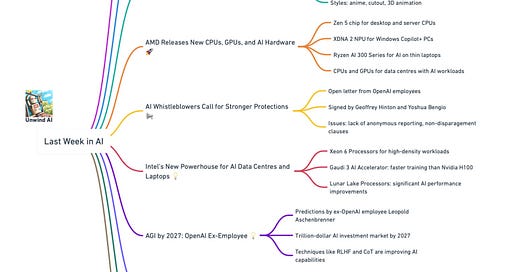

AMD Releases New CPUs, GPUs, and AI Hardware 🚀

AMD, known for its high-performance computing products, is closing the gap with Nvidia by unveiling a slew of major updates at Computex 2024, showcasing its commitment to the rapidly evolving AI and computing landscapes. Here are the key announcements:

Zen 5 chip: Powering both desktop and server CPUs, delivers a significant performance leap.

Ryzen 9 9950X CPU: This flagship CPU in the Ryzen 9000 Series is claimed as the world’s fastest consumer CPU, designed for demanding tasks.

XDNA 2 NPU: Designed to power Windows Copilot+ PCs, providing the processing muscle with 50 TOPS for advanced AI features.

Ryzen AI 300 Series (3rd Gen Ryzen AI): Specifically designed to deliver powerful AI performance in thin laptops.

5th Gen EPYC CPU “Turin”: Server-grade CPUs targeting the high-performance computing market.

MI325X and MI350X Next-Gen GPUs: Designed for demanding AI workloads in data centers.

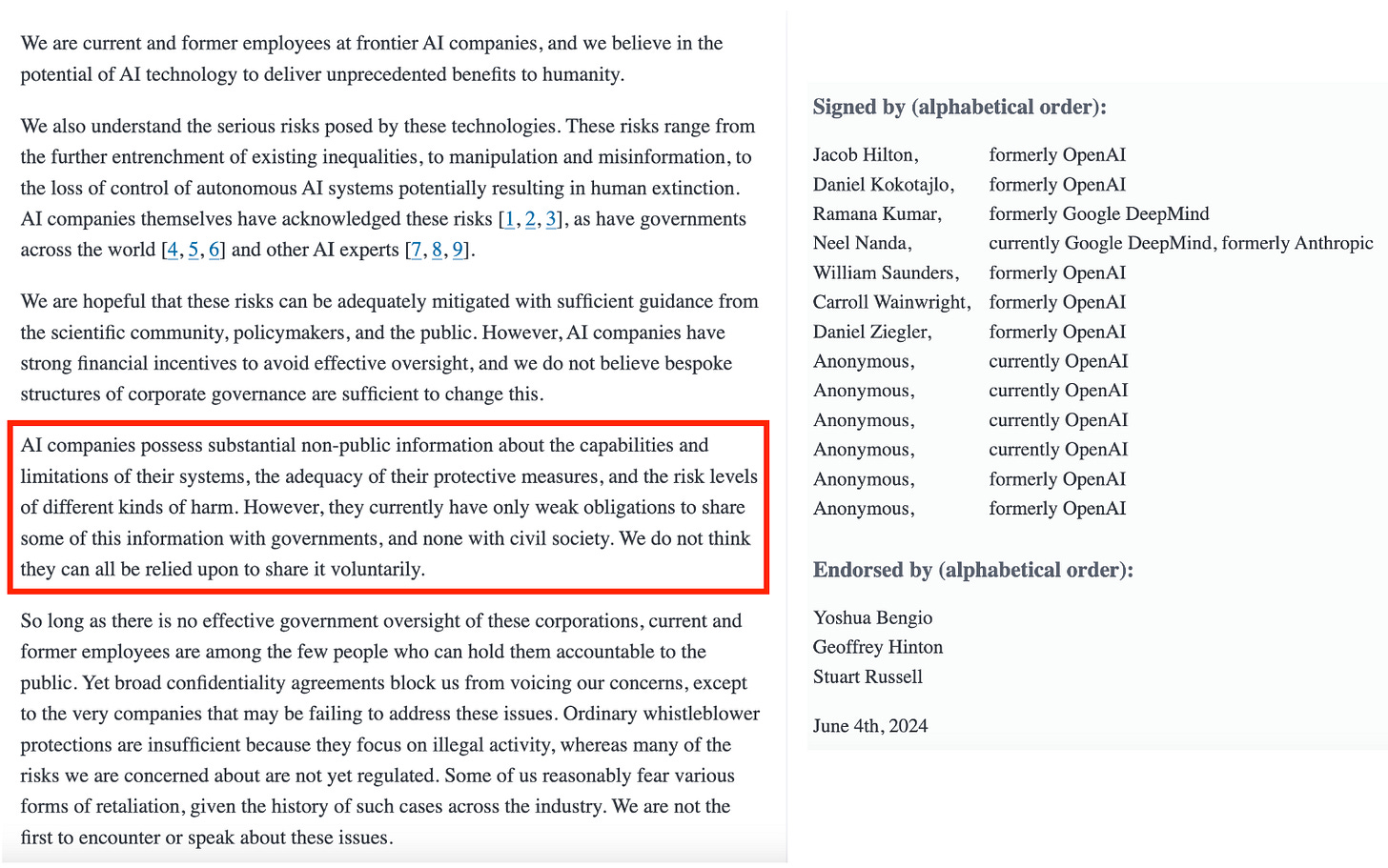

AI Whistleblowers Call for Stronger Protections 📢

As OpenAI faces criticism for inadequate AI safety measures, several current and former employees have written an open letter advocating for stronger whistleblower protections. Prominent AI researchers Geoffrey Hinton and Yoshua Bengio co-signed the letter, emphasizing that current protections only cover illegal activities and not broader AI safety concerns. The letter highlights issues such as discouraging criticism, lack of anonymous reporting channels, and non-disparagement clauses that silence employees.

Intel’s New Powerhouse for AI Data Centres and Laptops 💡

Intel made a splash at Computex 2024, announcing a stack of new AI technologies to boost performance and drive down costs across the entire AI ecosystem. From the data center to the edge and the PC, Intel is giving developers and businesses more tools to build and deploy powerful AI solutions, and competing strongly with AMD. Here are the major releases:

Xeon 6 Processors: Featuring the Sierra Forest chip for high-density workloads, consolidating 3 servers into 1 rack, with up to 4.2x performance and 2.6x performance per watt compared to Xeon 2 processors.

Gaudi 3 AI Accelerator: Trains GenAI models 40% faster than Nvidia H100 GPU clusters, and offers 15% faster training throughput for the Llama 2-70B model.

Lunar Lake Processors: Equipped with a 4th generation Intel NPU and Xe2-powered GPU, delivering significant AI performance improvements, expected to ship in Q3 2024.

AGI Will be Achieved by 2027: OpenAI Ex-Employee 💡

In a podcast with Dwarkesh Patel, former OpenAI employee turned investor Leopold Aschenbrenner spoke at length about the burgeoning AI landscape. They discussed the rapid scaling of AI, the “trillion-dollar cluster” for the development of superintelligence, and the dangers if AGI is not controlled. Here are some key takeaways:

AI is evolving into an “industrial process,” needing significant infrastructure investments.

By 2026, training clusters will need a gigawatt of power, and by 2030, 100 gigawatts, costing trillions and consume 20% of US electricity.

This expansion will drive a $1 trillion AI investment market by 2027, necessitating new power plants and fabrication facilities.

Aschenbrenner’s blog series “Situational Awareness” highlights the rapid advancement towards AGI by 2027 and the underestimation of AI’s impact.

Techniques like RLHF and chain-of-thought prompting are significantly improving AI capabilities, making them practical and useful as coworkers by 2027.

First Large-scale Foundation Model of the Atmosphere 🌪️

Microsoft has released “Aurora,” the world’s first large-scale foundation model for atmospheric forecasting, boasting 1.3 billion parameters. Trained on over a million hours of diverse weather and climate data, Aurora addresses limitations in current weather prediction models and improves the accuracy of forecasting extreme events. The model offers a computational speed-up of approximately 5,000 times compared to the Integrated Forecasting System (IFS) and outperforms other leading models like GraphCast and IFS HRES in various tasks and resolutions.

Mistral AI Launches Fine-tuning API and SDK 👩🔧

Mistral AI has launched a fine-tuning API and SDK to customize AI models on its platform, La Plateforme. The open-source SDK, based on the LoRA training paradigm, allows developers to fine-tune models on their own infrastructure. La Plateforme also offers serverless fine-tuning services, eliminating the need for extensive infrastructure. Currently compatible with Mistral 7B and Mistral Small models, these services promise cost-effective model customization with plans for future compatibility expansion.

Meta’s New AI Model to Translate 200 Languages 📚

Meta researchers have developed an AI model, “No Language Left Behind” (NLLB-200), which translates between 200 languages, including many low-resource ones. This model uses an advanced Mixture of Experts (MoE) architecture and achieves a 44% improvement in translation quality compared to previous systems. NLLB-200 supports three times as many low-resource languages as high-resource ones and utilizes new data mining techniques to enhance training datasets. The team also created the FLORES-200 benchmark for evaluating translation quality, and all resources are available for non-commercial use.

Which of the above AI development you are most excited about and why?

Tell us in the comments below ⬇️

That’s all for today 👋

Stay tuned for another week of innovation and discovery as AI continues to evolve at a staggering pace. Don’t miss out on the developments – join us next week for more insights into the AI revolution!

Click on the subscribe button and be part of the future, today!

📣 Spread the Word: Think your friends and colleagues should be in the know? Click the ‘Share’ button and let them join this exciting adventure into the world of AI. Sharing knowledge is the first step towards innovation!

🔗 Stay Connected: Follow us for AI updates, sneak peeks, and more. Your journey into the future of AI starts here!

Shubham Saboo - Twitter | LinkedIn ⎸ Unwind AI - Twitter | LinkedIn