It was yet another thrilling week in the AI field with advancements that further extend the limits of what can be achieved with AI.

Here are 10 AI breakthroughs that you can’t afford to miss 🧵👇

Evaluate RAG App with Personalized Evaluation Models 📋

LastMile AI has released RAG Workbench in beta to help developers evaluate, debug, and optimize their Retrieval Augmented Generation (RAG) applications. This tool is a game changer for anyone working with RAG, providing deeper insights into your RAG app’s performance and offering a rich debugging experience.

The best part is it allows you to tailor your evaluations to your specific needs by using your own data to fine-tune evaluators. This ensures you’re measuring what really matters, not just generic metrics.

Microsoft Releases New Vision Language Models 👁️

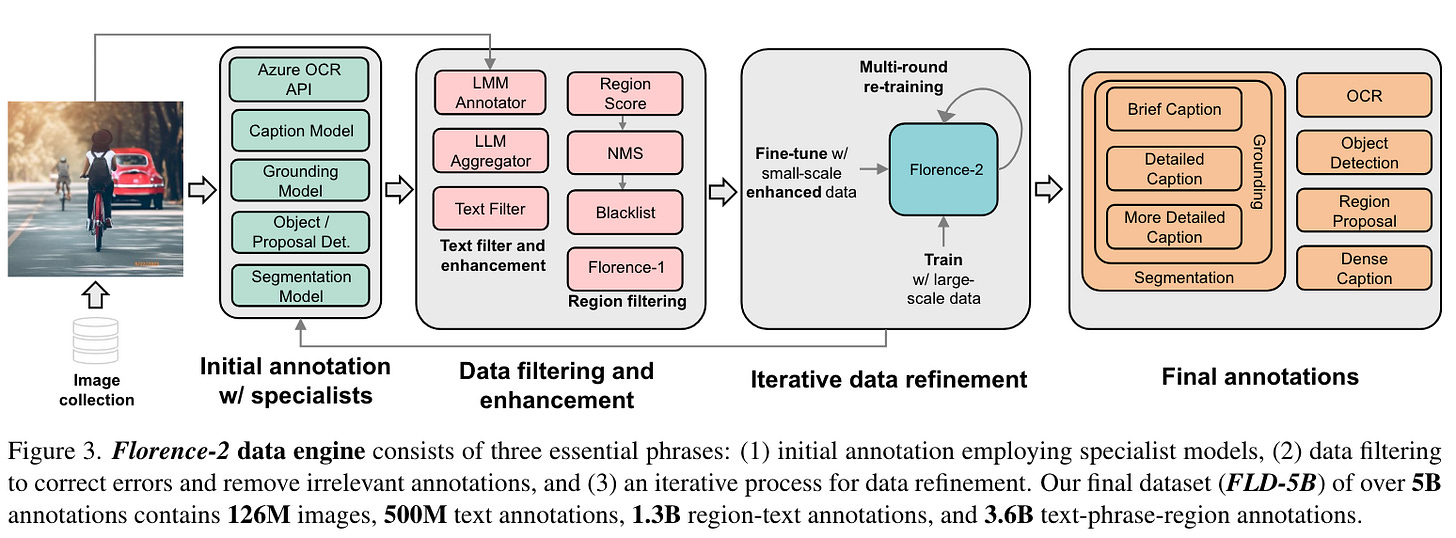

Microsoft has released Florence-2, a new vision foundation model that can handle a wide range of computer vision and vision-language tasks. Unlike many existing models, Florence-2 can tackle these tasks with simple instructions and understand complex visual information.

This was possible due to a massive dataset called FLD-5B, which contains 5.4 billion visual annotations on 126 million images. Microsoft has made Florence-2 models available to the public, so researchers and developers can explore its capabilities.

Opensource LLM + RAG Fine tuning for Best AI Code Assistant 🧠

Together AI team has built a new personalized code assistant using Mistral 7B Instruct v0.2, an open-source LLM and Retrieval-Augmented Generation (RAG) fine-tuning. This innovative approach tackles the shortcomings of LLMs in code generation, such as producing inaccurate or outdated code. RAG fine-tuned models demonstrated significant performance improvements over existing models like Claude 3 Opus and GPT-4o, while achieving a significant cost reduction.

LLMs Discover Better Ways to Train Themselves 🔨

Researchers at Sakana AI have successfully used LLMs to invent better ways to train themselves to automate AI research. This innovative approach, dubbed ‘LLM²,’ leverages LLMs to generate hypotheses and write code, effectively turning them into AI researchers.

In a recent open-source project, researchers prompted LLMs to propose and refine new preference optimization algorithms which resulted in a new state-of-the-art algorithm called DiscoPOP, significantly outperforming existing methods in aligning LLMs with human preferences.

Anthropic Introduces Projects in Claude.ai 📚

Anthropic has introduced a new feature to Claude.ai for users to organize their chats into Projects. This feature enables teams to collaborate more effectively with Claude by bringing together relevant information, chat activity, and insights in one centralized location.

Projects offer a shared workspace for teams to work on specific tasks or projects. Each Project has a context window of 200k tokens so users can add all of the relevant documents, code, and insights to enhance Claude’s effectiveness.

The Next-gen of Training Sets for Language Models ⚙️

A massive new dataset pool called DCLM-POOL containing 240 trillion tokens has been made freely available by a team of institutes. DataComp for Language Models (DCLM) is a standardized platform for dataset experiments for researchers to compare different data curation techniques and their impact on model performance.

DCLM offers a massive 240 trillion token corpus extracted from Common Crawl, along with open-source software for processing large datasets and a multi-scale experimental design that accommodates researchers with varying compute budgets.

AI Chips Startup Etched Bets Big on Transformers 🔥

AI chip startup Etched has introduced Sohu, the world’s first transformer ASIC, designed specifically for transformer AI models. Sohu achieves a throughput of 500,000 tokens per second for Llama 70B, making it significantly faster than the latest GPUs. A single 8x Sohu server can replace 160 H100 GPUs, offering remarkable cost-effectiveness. Etched supports an open software ecosystem, allowing developers to customize and optimize transformer models for Sohu.

Gemini With 2 Million Context Window Now Available 🌟

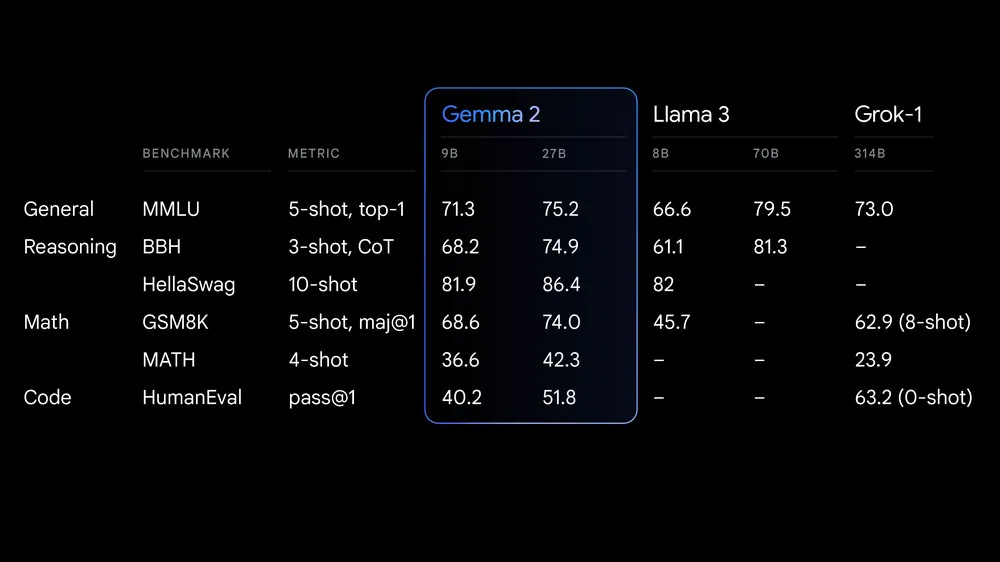

Google announced new additions to their lightweight open Gemma models, Gemini 1.5 Pro with 2 million tokens context, and context catching in the Gemini API last month at the I/O event. All three have been made available.

They have released Gemma 2 models in two sizes - 9B and 27B parameters. The 2B parameters model will be available soon. These models perform better than the first gen, outperforming other open models in their size category and competing with models 2x their size. Gemma 2 is now available in Google AI Studio, and their weights can be downloaded from Kaggle and Hugging Face.

OpenAI Uses GPT-4 to Identify Coding Errors of GPT-4 🙅♀️

OpenAI has developed a “critic” model to help humans evaluate AI-generated code more effectively. This model called CriticGPT is based on GPT-4, and has been trained to catch errors in ChatGPT’s code output. This new method of scalable oversight could be successful in ensuring the reliability of AI systems.

CriticGPT reviews AI-generated code and provides natural language critiques to highlight errors. It was preferred over human critiques in 63% of cases and detected more bugs than human contractors.

Meta’s LLM Compiler Models for Code Optimization 🧑💻

Meta has released the LLM Compiler, a family of models built on CodeLlama with additional code optimization and compiler capabilities. Available in two sizes: 7B and 13B, they offer enhanced capabilities including emulating compiler functions, predicting optimal code size reductions, and disassembling code.

LLM Compiler models can emulate compiler functions, predict optimal passes for code size, and disassemble code into its intermediate representations, achieving state-of-the-art results. Meta also offers fine-tuned versions of these models, which achieve impressive results.

Which of the above AI development you are most excited about and why?

Tell us in the comments below ⬇️

That’s all for today 👋

Stay tuned for another week of innovation and discovery as AI continues to evolve at a staggering pace. Don’t miss out on the developments – join us next week for more insights into the AI revolution!

Click on the subscribe button and be part of the future, today!

📣 Spread the Word: Think your friends and colleagues should be in the know? Click the ‘Share’ button and let them join this exciting adventure into the world of AI. Sharing knowledge is the first step towards innovation!

🔗 Stay Connected: Follow us for AI updates, sneak peeks, and more. Your journey into the future of AI starts here!

Shubham Saboo - Twitter | LinkedIn ⎸ Unwind AI - Twitter | LinkedIn

Thanks for sharing