Intel, Google & Arm unite to challenge Nvidia

PLUS: OpenAI released Sora-generated videos by artists, AI journalist agent

Today’s top AI Highlights:

Big tech companies Intel, Google, Arm forms an alliance to challenge NVIDIA

First impressions of Sora - What users have to say

Stealing information from black-box transformer models

Consequences of using generative AI by students

Opensource AI journalist agent that writes with citations

& so much more!

Read time: 3 mins

Latest Developments 🌍

Big Techs Form an Alliance to Challenge NVIDIA 🤝

The $2 trillion AI company Nvidia is going to face a challenge from a coalition of major tech companies including Intel, Google, Arm, Qualcomm, and Samsung. These companies, under the name The Unified Acceleration Foundation (UXL), are collaborating on an open-source software suite to dismantle the proprietary software barriers that currently bind AI developers to Nvidia’s technology.

The initiative focuses on enabling AI code to run universally, freeing developers from the constraints of using specific coding languages, code bases, and other tools tied to using a specific architecture, such as Nvidia’s CUDA platform.

Nvidia’s Hopper GPUs, which require using Nvidia’s CUDA, had become the benchmark of computing power, much superior to anything produced by other chipmakers. This brought a huge demand for the hardware and consequent scarcity which other companies have been trying to develop alternatives for. While UXL says the project will initially aim to open up options for AI apps and high-performance computing across different hardware platforms, the group plans to eventually support Nvidia’s hardware and code too. (Source)

How Good is Sora - Directly from the Users 💫

OpenAI has been testing its text-to-video AI model, Sora, by giving its access to some visual artists, designers, creative directors, and filmmakers to understand how Sora can be used to aid in their creative journeys. While some in the community are strongly resisting the use of AI as they fear loss of jobs and their art getting plagiarized, others have warmly received Sora for its ability to foster new levels of creativity and idea realization.

In a blog, the company has shared some feedback that was given by Sora users. They appreciate how it allows for exploring beyond the boundaries of the real into the surreal and impossible. Sora is liberated from real constraints like time, money, and permissions, breaking traditional physical laws and thought conventions, and has allowed the users to truly venture into long-held wild ideas that previously were technically impossible. Do check out the blog and watch the videos the users have created, they are mind-blowing!

“Sora is at its most powerful when you’re not replicating the old but bringing to life new and impossible ideas we would have otherwise never had the opportunity to see.”

- Paul Trillo, Director

Peeking inside the Black-box Transformer Models 👀

While AI models like ChatGPT and Google’s PaLM-2 are powering a wide range of applications, the specifics of their inner workings remain tightly under wraps. Companies keep these details secret to maintain a competitive edge and also fear the security concerns that might come with opening too much about the models. However, research using a novel model-stealing attack peeked into these black-box models and extracted details with startling precision and cost as low as $20!

Key Highlights:

The study focuses on a top-down approach, focusing on the last layer of the models. It states while these models’ weights and internal details are not publicly accessible, the models themselves are exposed via APIs. By smartly querying the API, the team could recover the complete embedding projection layer of a transformer language model.

Stealing this last layer was important as it reveals the width of the model, which is often correlated with its total parameter count. Even stealing a small part of the model makes it less of a “black box.” Lastly, the ability to steal even the last layer shows that production-level language models aren’t secure.

This attack is effective against models whose APIs expose full logprobs, or a “logit bias”, like PaLM-2 and GPT-4.

For a cost of under $20, the team managed to extract the entire projection matrix of OpenAI’s Ada and Babbage models, revealing their hidden dimensions to be 1024 and 2048, respectively. The same technique was used on the GPT-3.5 Turbo model to discover its exact hidden dimension size, estimating a cost of under $2,000 to extract its full projection matrix.

Following responsible disclosure of their technique, OpenAI and Google took swift action to implement new defenses, adjusting their APIs to prevent such attacks in the future.

Consequences of generative AI use among students 🙇

Imagine it’s night already and you need an essay written by tomorrow morning – what do you do? In a scramble for help, you turn to ChatGPT, hoping for a shortcut through your academic workload. It’s a scenario many students find themselves in, either occasionally or daily! This prompted researchers to take a closer look at how and why students use generative AI tools like ChatGPT. On a sample of around 500 university students, their findings reveal the factors driving students towards these technologies and also the impact on learning outcomes.

Key Highlights:

Researchers have crafted a new scale specifically designed to quantify ChatGPT usage among university students to understand how deeply integrated this AI technology is in their academic lives.

The findings reveal that students overwhelmed by heavy academic workloads and tight deadlines are more inclined to use ChatGPT.

Interestingly, students who are highly motivated by rewards and the prospect of good grades exhibit less interest in using ChatGPT. The potential risks associated with being caught or receiving lower marks for AI-generated work seem to outweigh the benefits for this group.

Students frequently using ChatGPT tend to procrastinate more, experience memory loss, and see a decline in their academic performance. Those sensitive to rewards and avoid ChatGPT show lower levels of procrastination and memory loss.

Tools of the Trade ⚒️

Claude-Journalist: Use Claude 3 Haiku to research, write, and edit high-quality articles on any given topic. It utilizes web search APIs to gather relevant information, analyzes the content, and generates well-structured, informative, and engaging articles that read as if they could be published in major media publications.

Induced AI: A browser-based automation platform that leverages AI to streamline repetitive tasks. Users can teach the AI agent a workflow once, and the system will subsequently handle those tasks autonomously. Tasks can range from monotonous to even structuring content that requires human-like reasoning. AI agents can also perform the same task with different inputs, making them dynamic.

Maestro: A Python-based framework that leverages Anthropic’s Claude 3 models (Opus and Haiku) to orchestrate complex tasks. It intelligently breaks down goals into smaller subtasks, executes those subtasks, and then combines the results into a refined final product.

Eggnog: AI-powered video creation platform that solves the problem of inconsistent characters in AI-generated content. It allows creators to design custom characters, reuse/remix characters from others, and produce cinematic scenes in a collaborative environment without the need for the extensive resources traditionally required.

😍 Enjoying so far, TWEET NOW to share with your friends!

Hot Takes 🔥

X is nothing without its spambots. ~

One certainty about the next AI wave is that the word “generative” won’t be involved. ~

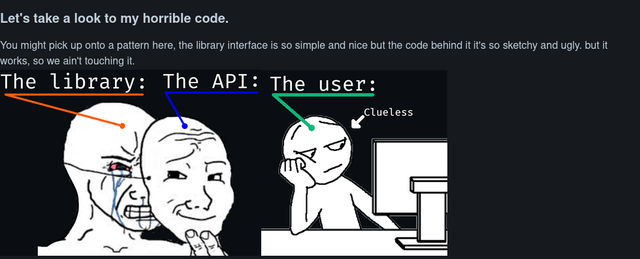

Meme of the Day 🤡

That’s all for today! See you tomorrow with more such AI-filled content.

Real-time AI Updates 🚨

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!

![landing.mp4 [video-to-gif output image] landing.mp4 [video-to-gif output image]](https://substackcdn.com/image/fetch/$s_!RPh5!,w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F725ec5ea-d464-4995-a892-2117eb0af1e1_800x398.gif)