Google's Gemma with 10 million context

PLUS: Multi-token prediction in LLMs, Create Videos from Image and Text

Today’s top AI Highlights:

Multi-token prediction in LLMs for faster and better LLMs

TikTok to start labelling third-party AI-generated content

OpenAI’s model spec doc to guide AI behavior for models like GPT-5

Context window of google’s LLM gemma scaled from 8K to 10 Million

& so much more!

Read time: 3 mins

Latest Developments 🌍

Multi-token Prediction in LLMs for More Efficiency 🔡

LLMs like GPT and Llama models rely on next-token prediction where models predict the next word in a sequence. This approach is inefficient because it focuses on local patterns and neglects the broader context, leading to slower learning and higher data consumption. Meta has released a new multi-token prediction method where the models predict several future tokens simultaneously without extra training time or memory overhead. This improves the efficiency and performance of LLMs.

Key Highlights:

Better Efficiency: Multi-token prediction reduces the reliance on local patterns so the models can learn from a broader context and reduce the data needed to reach similar levels of fluency.

Better Performance: Models trained with this method solved 12% more problems on HumanEval and 17% more on MBPP compared to traditional next-token models.

Faster Inference: This architecture enables self-speculative decoding, speeding up inference by up to 3x, making it ideal for real-world applications.

Stronger Reasoning Skills: Multi-token prediction also promotes the development of induction heads and algorithmic reasoning capabilities in LLMs.

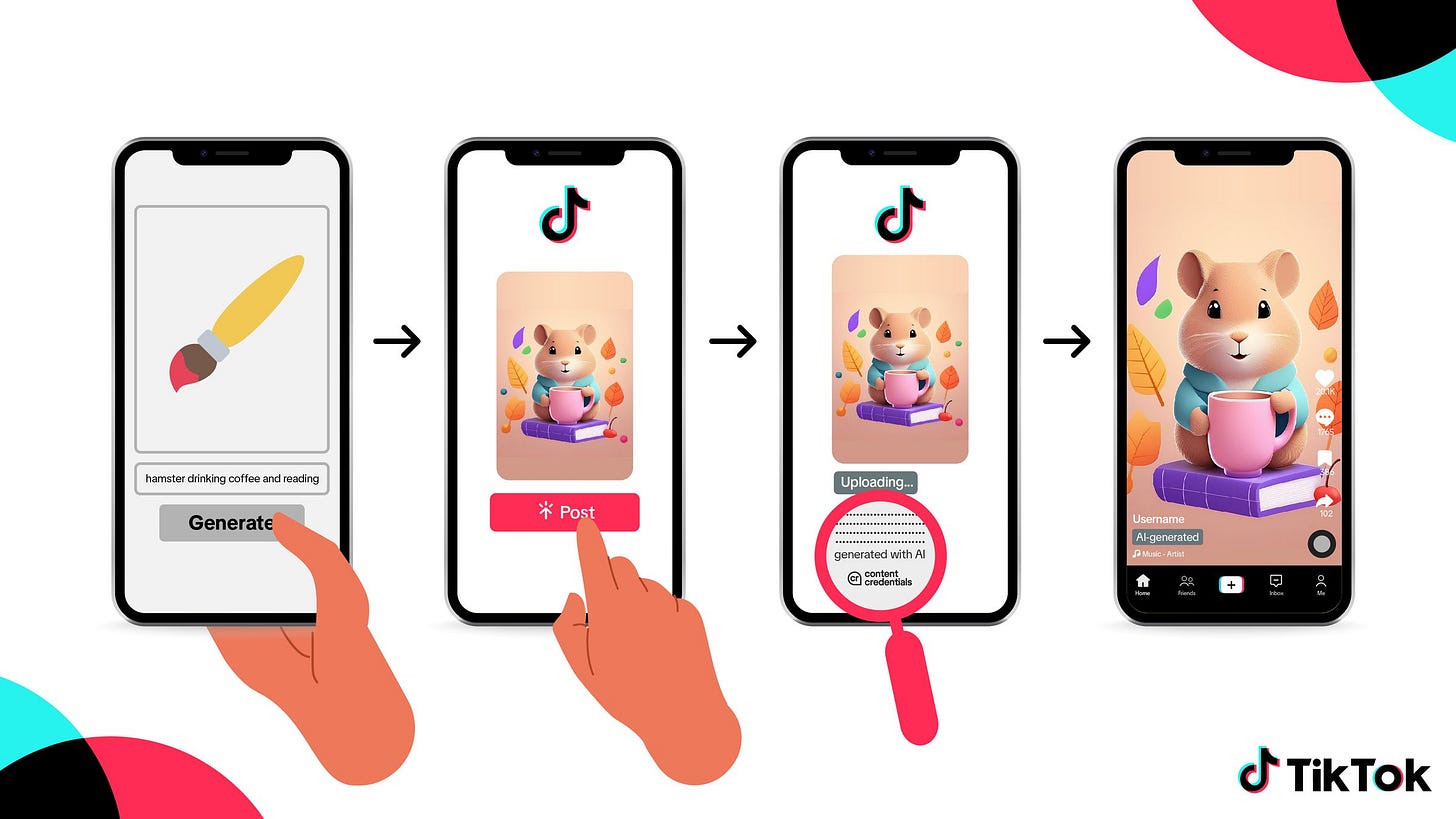

TikTok to Label Third-Party AI-generated content 🪄

TikTok is stepping up its efforts to promote transparency and clearly label AI-generated content. The platform will label the content as such which is generated by both TikTok’s AI as well as other third-party AI tools. This will be done using Adobe’s Content Authenticity Initiative (CAI) as well as the Coalition for Content Provenance and Authenticity (C2PA) details which are metadata tagging systems.

The platform has already been labeling the content produced using its own AI, and Meta does the same too. However, TikTok will be the first social media platform that’ll label AI-generated content even if other AI tools generate it.

OpenAI’s Model Spec to Guide AI Behavior 🧑🏫

OpenAI has introduced a “Model Spec” document, outlining its approach to shaping AI behavior in its API and ChatGPT. This document serves as a guideline for researchers and data labelers working with reinforcement learning from human feedback (RLHF). This is especially important for more people to understand why the models respond the way we do, and it gives us a peek into the opaque reasoning structure of these models.

Key Highlights:

What is included: The document establishes a framework with objectives (e.g., assist users, benefit humanity), rules (e.g., comply with laws), and defaults (e.g., assume best intentions) to guide AI behavior and resolve potential conflicts.

Chain of Command: A “chain of command” system prioritizes instructions based on the roles of platform, developer, user, and tools, ensuring clarity in conflict resolution.

Safety and Ethics: It also outlines rules against harmful activities like illegal actions, information hazards, privacy violations, NSFW content, and self-harm promotion, emphasizing safety and ethical considerations.

What can be inferred: GPT-5 and other future AI models will be excellent in reasoning capabilities and should be more safe while responding. The amount of rules and defaults will help GPT-5 to give more contextual responses.

Doc’s weaknesses: A major part of the document is to align the model with human values. Themes like safe, respectful, kind, and hatred can be very subjective. Also, the document doesn’t show specific examples of RAG and tool use which have become imperative.

Context Window Scaled from 8K to 10 Million Tokens 🧠

Google had released its series of small opensource models Gemma with a context window of 8K tokens. A team has scaled the context window from 8K tokens to a staggering 10 Million tokens. This window can encompass thousands of documents, research papers, and even 30-50 novels! Despite its large capacity, Gemma 2B is designed to run efficiently, requiring less than 32GB of memory.

Key Highlights:

Technique: It uses local attention blocks (as outlined by InfiniAttention) and applies recurrence to these blocks, resulting in 10M context global attention.

Memory Efficient: Gemma 2B-10M requires less than 32GB of memory, making it accessible on a wider range of hardware compared to other large context models.

Progressive Learning Approach: The model starts with shorter sequences and gradually increases its capacity to understand longer contexts. This step-by-step approach helps the AI learn more effectively, much like building knowledge from the ground up, making it more powerful as it progresses.

😍 Enjoying so far, share it with your friends!

Tools of the Trade ⚒️

Conversational AI Teams by Synthflow: Create entire virtual teams or businesses of customer representatives with AI agents that sound just like humans. These AI agents work together with different tools to automate the workflow. While one AI agent would talk to the customer, other would schedule the appointment, another one would update the CRM, and so on.

Markdowner: An open-source tool that quickly converts any website into markdown format, optimized for LLMs. By entering a URL and configuring optional parameters, you can get the markdown data including content from subpages.

ScrapeGraphAI: A web scraping Python library that uses LLM and direct graph logic to create scraping pipelines for websites, documents and XML files. Just say which information you want to extract and the library will do it for you.

Krea Video: Create videos using images and text descriptions. You can define specific moments with keyframe images and guide the overall look with text prompts, all within a customizable timeline. Generate videos with different styles, aspect ratios, and levels of motion, with granular control.

Hot Takes 🔥

Until now, humans learned the language of machines - code

Going forward, machines will learn the language of humans - English ~

Bindu ReddyI learn how to speak English to the AI.

Prompt engineering is a thing. ~

Robert ScobleOne thing that I’ve discovered about tech is that no matter how “crazy” or “wild” or “original” your idea is, some startup has been already been working on it for the past decade. ~

Bojan Tunguz

Meme of the Day 🤡

Product development meeting for Rabbit R1

But people still cracked Rabbit R1 and found out its Android 🤷♀️

That’s all for today! See you tomorrow with more such AI-filled content.

Real-time AI Updates 🚨

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!