Meta releases Llama 3.1 405B with 128k context

PLUS: Tesla to sell Optimus Robot by 2026, Nvidia's AI foundry to build and deploy GenAI apps

Today’s top AI Highlights:

Meta releases Llama 3.1 405B model and upgraded 8B and 70B models

NVIDIA’s new AI Foundry to customize Llama 3.1 models with 2.5x throughput

Tesla will begin selling Optimus robot by 2026

Recreate your portraits with Meta AI’s “Imagine Me”

Create OpenAI-like API for Llama 3.1 and deploy it locally on your computer (100% free and without internet)

& so much more!

Read time: 3 mins

Latest Developments 🌍

Llama 3.1 405B Leads the Opensource AI Charge 🏆

Meta has released its new series of Llama models, Llama 3.1, including the much-anticipated Llama 3.1 405B model. This is the first frontier-level, opensource AI model with state-of-the-art capabilities, competing with the best closed-source models. This new family of models also includes upgraded versions of the 8B and 70B models. All Llama 3.1 models are multilingual, have a longer context window of 128K tokens, state-of-the-art tool use, and overall stronger reasoning capabilities.

Key Highlights:

Architecture - Llama 3.1 405B uses a standard decoder-only transformer model architecture with minor adaptations for training stability. Supervised fine-tuning and direct preference optimization were used for high-quality synthetic data generation and performance improvement.

Training - Llama 3.1 405B is trained on over 15 trillion tokens, utilizing over 16,000 H100 GPUs.

Inference Optimization - The model was quantized from 16-bit (BF16) to 8-bit (FP8) numerics for lower compute requirements and single-server node operation.

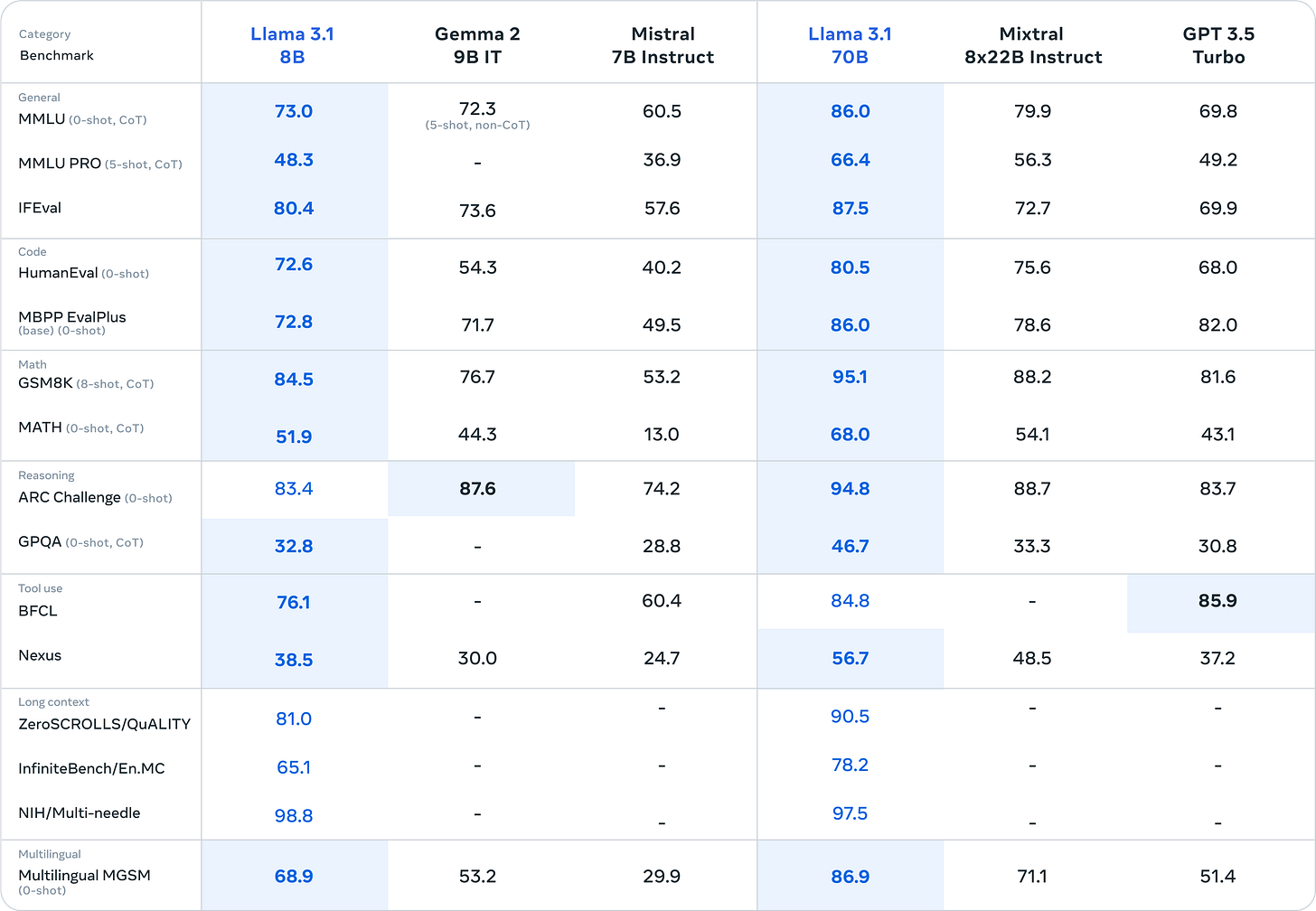

Performance - Llama 3.1 405B competes strongly with the leading closed model GPT-4o and Claude 3.5 Sonnet, even outperforming them in a few tasks like math and tool use. Llama 3.1 8B and 70B are the best in their class, outperforming Gemma 2, Mixtral models, and even GPT 3.5 Turbo.

Building with Llama 3.1 - Llama 3.1 models are available to download on Meta’s website and Hugging Face. Meta has partnered with AWS, Nvidia, Databricks, Groq, Dell, and others to give you the tools for real-time and batch inference, fine-tuning with your own data, evaluating their performance for your specific needs, generating synthetic data, and more.

Chat on Meta AI - Llama 3.1 models are also available to chat with on Meta AI. By default, the chatbot uses Llama 3.1 70B. However, you can manually change it to 405B model for complex questions as it is limited to a few responses only.

Fine-tune Llama 3.1 with NVIDIA’s All-in-one AI Foundry 🧰

NVIDIA has introduced “AI Foundry,” a new platform for enterprises to build and deploy custom generative AI models using the new Meta Llama 3.1 models. This platform offers comprehensive tools for creating domain-specific models, fine-tuning with proprietary or synthetic data, and deploying them using NVIDIA’s optimized infrastructure. Along with this, NVIDIA has also released NeMo Retriever microservices to enhance RAG pipelines by improving response accuracy.

Key Highlights:

Custom Model Creation - This AI Foundry allows businesses to create custom “supermodels” based on the new Llama 3.1 models with proprietary data or synthetic data. This platform includes tools for synthetic data generation, fine-tuning, evaluation, and deploying guardrails.

Data for fine-tuning - Enterprises that need additional training data for creating a domain-specific model can use Llama 3.1-405B and Nemotron-4 340B together to generate synthetic data.

Performance Enhancement - AI Foundry integrates NVIDIA NIMs optimized to deliver up to 2.5x higher throughput for Llama 3.1 models compared to standard deployments. This ensures that AI applications can run with lower latency and higher efficiency.

Optimized Infrastructure - Powered by the NVIDIA DGX Cloud AI platform, AI Foundry leverages significant compute resources that can scale with changing AI demands. The platform is co-engineered with leading public clouds, providing flexibility and scalability for enterprises to deploy AI models.

NeMo Retriever Microservices - NVIDIA has also released NeMo Retriever microservices to enhance response accuracy in RAG. These microservices include models optimized for embedding, QA, and data retrieval for more precise and context-aware outputs.

Quick Bites 🤌

Elon Musk, just like always, announced via a comment on X that Tesla plans to start selling its humanoid robot Optimus to other companies by 2026. By next year, Optimus will be in low production for Tesla’s internal use.

Five U.S. senators have demanded that OpenAI provide data on its safety and security measures for its AI systems. Following concerns raised by former OpenAI employees like Jan Leike and Leopold Aschenbrenner, they asked CEO Sam Altman about how the company prevents misuse of AI, ensures employee safety, and addresses cybersecurity threats.

Meta has expanded Meta’s AI access to more countries and more languages including French, German, and Hindi, across all its platforms including WhatsApp, Facebook and Instagram. New announcements regarding Meta AI chatbot:

“Imagine me” prompts in Meta AI will let you recreate your image based on a photo of you and a text prompt.

You can easily add or remove objects, change them and edit them with just simple prompts.

Meta AI will be rolled out next month on Meta Quest in the US and Canada in experimental mode, replacing the current Voice Commands.

😍 Enjoying so far, share it with your friends!

Tools of the Trade ⚒️

Llama 3.1 on LM Studio: Create an OpenAI-like API for the newly launched Llama 3.1 and deploy it locally on your computer in just 2 minutes using LM Studio.

Glaive: Build custom models using synthetic data. You can train models for any use case by creating a dataset for it, measure performance, and improve the model by updating or transforming the dataset based on feedback.

Robbie: AI agent that navigates GUIs to solve tasks for you. It uses OCR, Canny Composite, and Grid to navigate both web and desktop GUIs, making it versatile for various tasks like sending emails and searching for flights.

Awesome LLM Apps: Build awesome LLM apps using RAG to interact with data sources like GitHub, Gmail, PDFs, and YouTube videos through simple text. These apps will let you retrieve information, engage in chat, and extract insights directly from content on these platforms.

Hot Takes 🔥

Is there any clarity on what X’s Grok is for? This isn’t snark: are spending a lot on training & have talent, but unclear what’s next. If B2B, they haven’t built out their API & not built for commercial users. On consumer, the chatbot is lagging others. Just powering Elonverse? ~

Ethan MollickKind of wild to think meta is training and releasing open weights models faster than OpenAI can release closed models. Dude is already cooking llama 4 ~

anton

Meme of the Day 🤡

That’s all for today! See you tomorrow with more such AI-filled content.

Real-time AI Updates 🚨

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!