Llama-3 405B Outperforms GPT-4o (Leak)

PLUS: Opensource hallucination detection model, AI-powered local chatbot assistant

Today’s top AI Highlights:

Meta’s Llama 3 405B will close the gap between opensource and closed AI models

Patronus AI releases opensource AI hallucination detection model

Microsoft’s new study to enhance RAG performance with a unified database

Cohere raises $500 million in Series D funding

Developer platform to ship reliable LLM agents 10x faster

& so much more!

Read time: 3 mins

Latest Developments 🌍

Llama-3 405B Base Model Outperforms GPT-4o 😳

Llama-3 405B is supposed to be released today but the model got leaked yesterday on Hugging Face. It was later taken down but the leak gave a sneak peek into the model’s impressive features and performance. The base model itself outperforms the state-of-the-art GPT-4o across multiple benchmarks. Here are all the details we could gather from Reddit:

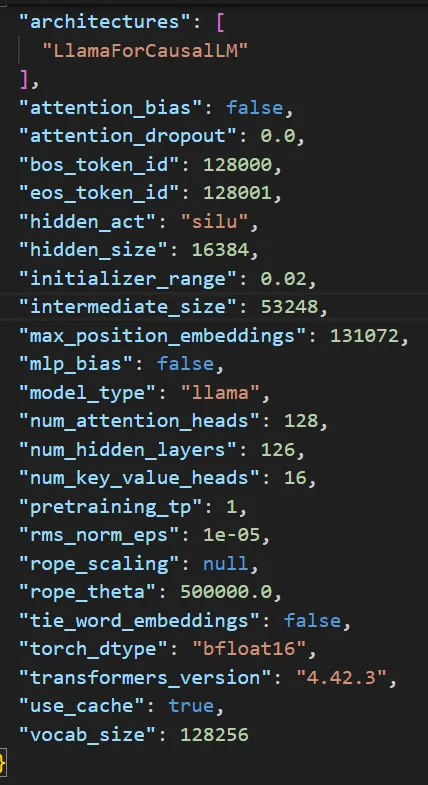

Technical Specifications - The Llama-3 model includes 126 hidden layers, 128 attention heads, and a hidden size of 16,384, promising substantial computational power and advanced processing capabilities.

Performance Metrics - In benchmark tests, the model outperforms GPT-4o in several tasks, such as MMLU, BoolQ, GSM8K, and HellaSwag.

More Features - With a vocabulary size of 128,256 and support for bfloat16 data type, the model is designed to handle long context efficiently, ensuring robust and accurate outputs.

Leading Hallucination Detection Model Beating GPT-4o

While designed to reduce LLM hallucinations, current RAG systems still struggle to produce text grounded in provided information consistently. This unreliability is particularly noticeable in specialized domains like finance and medicine.

Addressing this, Patronus AI has released LYNX open-source hallucination detection models for superior performance and transparency. In benchmark tests, LYNX outperforms existing models, including GPT-4o in detecting hallucinations.

Key Highlights:

Performance - LYNX-70B is the largest open-source hallucination detection model to date. It surpasses even GPT-4o in accurately identifying hallucinations. LYNX-70B demonstrated an 8.3% higher accuracy rate than GPT-4o in detecting inaccurate medical information within the PubMedQA dataset.

Explainable AI - LYNX doesn’t just provide a score; it offers a rationale behind its evaluation, mirroring human-like analysis. This “reasoning” capability makes the results more transparent and understandable.

Tackling Subtle Hallucinations - LYNX excels in a particularly challenging area: identifying subtle and ambiguous hallucinations often missed by other models. This is attributed to its novel training approach that includes techniques like semantic perturbations, where slight meaning alterations are introduced.

Introducing HaluBench - To advance research in hallucination detection models in real-world scenarios, Patronus AI has also released HaluBench, a new, publicly available benchmark dataset that encompasses 15,000 samples across diverse domains, including finance and medicine.

Unified Database to Boost RAG and LLM Performance 🗃️

LLMs rely on fixed training data which limits their ability to access real-time information, leading to inaccurate or outdated responses. RAG addresses this issue by incorporating up-to-date information from external sources but it doesn’t fully solve the problem due to challenges in managing diverse and multimodal knowledge bases.

To address this, Microsoft has introduced a unified database system that can manage and query various data types, including text, images, and videos.

Key Highlights:

Unified Database System - This novel database system seamlessly handles both vector data (representing semantic relationships) and scalar data (traditional structured data). This allows for complex queries combining both types, 10 to 1,000x faster than traditional methods, improving information retrieval speed and accuracy for LLMs.

SPFresh Technology - This vector index utilizes a lightweight protocol to incorporate updates in real-time, eliminating the need for resource-intensive periodic rebuilds and ensuring LLMs are always current.

Sparse and Dense Vector Search - This system efficiently handles both sparse and dense vectors, enabling multi-index mixed queries that cater to diverse data types and encoding methods for more comprehensive search and retrieval.

Open-source platform - The team has open-sourced their unified database system, MSVBASE, on GitHub, so you can build and with complex RAG systems.

Quick Bites 🤌

Toronto-based AI company Cohere has raised $500 million in Series D funding, valuing it at $5.5 billion post-money. The round was led by PSP Investments, alongside a syndicate of new investors at Cisco Systems, Japan’s Fujitsu, and chipmaker Advanced Micro Devices Inc.’s AMD Ventures.

Nvidia is reportedly working on a version of its new flagship AI chips for the China market that would be compatible with current U.S. export controls. Nvidia will work with Inspur, one of its major distributor partners in China, on the launch and distribution of the Blackwell chip, named the “B20.”

😍 Enjoying so far, share it with your friends!

Tools of the Trade ⚒️

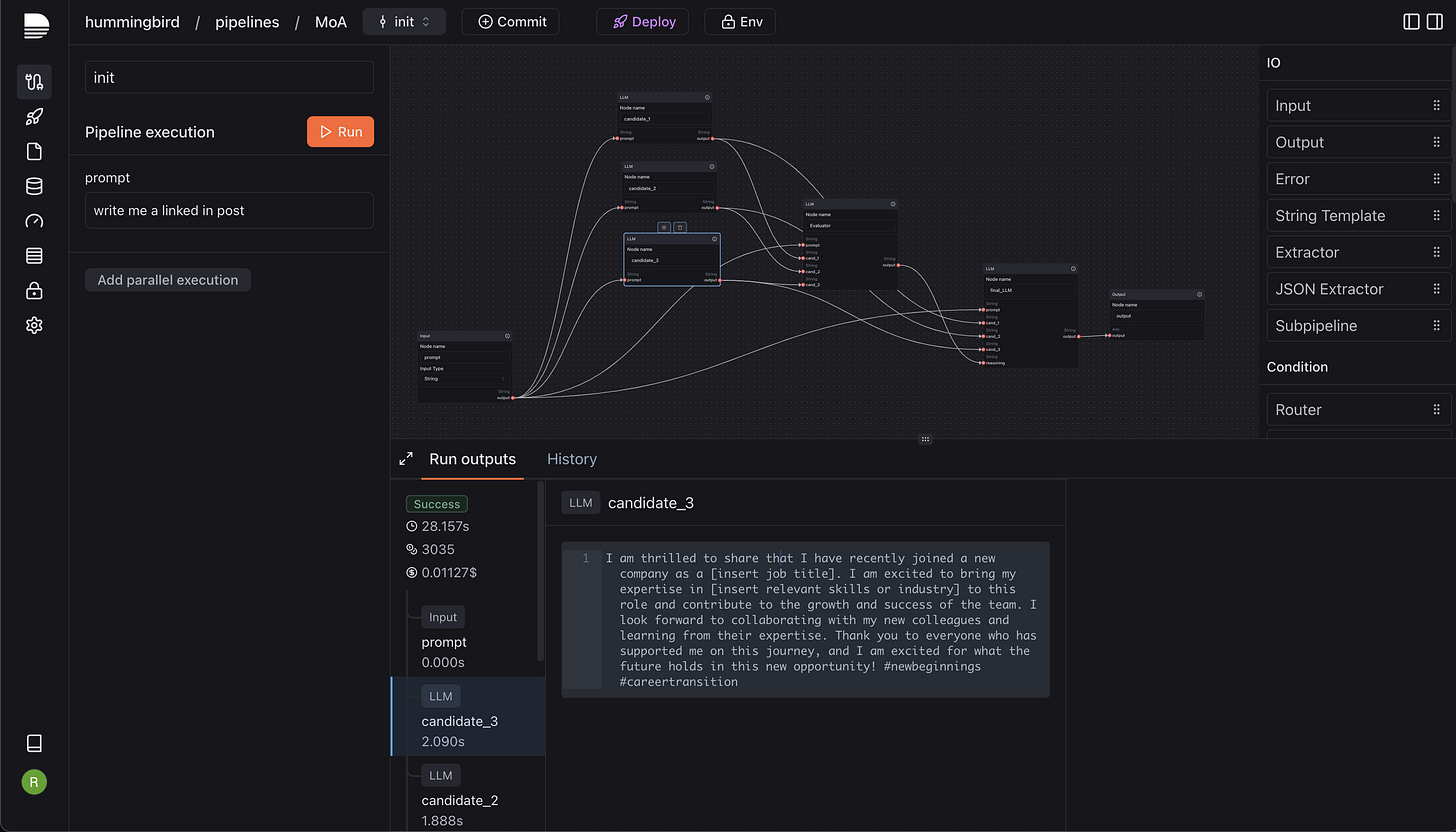

Laminar: Build LLM agents using a visual programming interface and integrate dynamic graphs for rapid experimentation. Utilize RAG for semantic search, switch between various LLM providers, and deploy scalable pipelines with a remote debugger and real-time collaboration.

everything-ai: A local open-source AI-powered chatbot assistant that supports tasks like RAG, summarization, audio translation, and image generation. It leverages various open-source libraries.

Sparrow: Opensource solution for extracting and processing data from documents and images. It supports local LLM data extraction, providing APIs for transforming unstructured data into structured formats for custom workflows.

Awesome LLM Apps: Build awesome LLM apps using RAG for interacting with data sources like GitHub, Gmail, PDFs, and YouTube videos through simple texts. These apps will let you retrieve information, engage in chat, and extract insights directly from content on these platforms.

Hot Takes 🔥

The risk of application copilots is that they hide many of the really powerful features of AI to make LLMs feel safe.

So it is powerful as an initial model for getting companies to adopt AI systems, but it is hard to get real benefits because all the sharp edges are sanded off. ~

Ethan Mollickit's just so weird how the people who should have the most credibility--sam, demis, dario, ilya, everyone behind LLMs, scaling laws, RLHF, etc--also have the most extreme views regarding the imminent eschaton, and that if you adopt their views on the imminent eschaton, most people in the field will think *you're* the crazy one ~

James Campbell

Meme of the Day 🤡

Not a meme! But this deserved a space

That’s all for today! See you tomorrow with more such AI-filled content.

Real-time AI Updates 🚨

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!